大家讲道理2017-04-18 09:27:14

Here is a method that does not require cutting files, itertools.product can help you complete it more concisely:

import itertools

with open('zidian.txt', 'w') as z:

with open('file1.txt') as f1, open('file2.txt') as f2:

for a, b in itertools.product(f1, f2):

a, b = a.strip(), b.strip()

print(a+b, file=z)How to cut output:

import itertools

with open('file2.txt') as f2:

for key, group in itertools.groupby(enumerate(f2), lambda t: t[0]//5):

with open('file1.txt') as f1, open('zidian-{}.txt'.format(key), 'w') as z:

for a, (_, b) in itertools.product(f1, group):

a, b = a.strip(), b.strip()

print(a+b, file=z)Tell me a little bit about some problems with your original code:

f = open('zidian.txt','w') You opened the file here but forgot to close it. It would be better to use with to read and write files

dict.readlines(), 若非萬不得已, 不要使用 readlines, remember!! Please refer to this article Text format conversion code optimization

In addition, the word dic 或 dict has a unique meaning in python. Python programmers with a little experience will think that they are python dictionary, which can easily cause misunderstandings

Questions I answered: Python-QA

阿神2017-04-18 09:27:14

Uh, I misunderstood the meaning of the question, so I re-wrote the code. I admit that it can be deleted using filehandler.readlines()是自己打脸了~

其实如果只是觉得生成的文件有些大的话, *nix有一款自带的小工具split非常适合, 可以随意把大文件拆分成若干小的

下面的代码如果不考虑结果分割可以简单修改write2file函数, 然后id_generator函数及相关模块(random, string)

def write2file(item):

with open("dict.txt", "a") as fh, open("file1.txt", "r") as f1:

for i in f1.readlines():

for j in item:

fh.write("{}{}\n".format(i.strip(), j))

import random

import string

from multiprocessing.dummy import Pool

def id_generator(size=8, chars=string.ascii_letters + string.digits):

return ''.join(random.choice(chars) for _ in range(size))

def generate_index(n, step=5):

for i in range(0, n, step):

if i + step < n:

yield i, i+step

else:

yield i, None

def write2file(item):

ext_id = id_generator()

with open("dict_{}.txt".format(ext_id), "w") as fh, open("file1.txt", "r") as f1:

for i in f1.readlines():

for j in item:

fh.write("{}{}\n".format(i.strip(), j))

def multi_process(lst):

pool = Pool()

pool.map(write2file, b_lst)

pool.close()

pool.join()

if __name__ == "__main__":

with open("file2.txt") as f2:

_b_lst = [_.strip() for _ in f2.readlines()]

b_lst = (_b_lst[i: j] for i, j in generate_index(len(_b_lst), 5))

multi_process(b_lst)

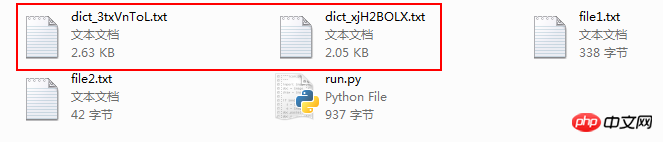

The result is as shown in the figure, several dict_加8位随机字符串的文本文档

其中一个内容dict_3txVnToL.txt

zhangwei123

zhangwei123456

zhangwei@123

zhangwei888

zhangwei999

wangwei123

wangwei123456

wangwei@123

wangwei888

wangwei999

...Satisfy your cravings, put the code:

with open("file1") as f1, open("file2") as f2, open("new", "w") as new:

b = f2.readline().strip()

while b:

a = f1.readline().strip()

for i in range(5):

if b:

new.write("{}{}\n".format(a, b))

else: break

b = f2.readline().strip()

Only read by line each time, no matter how big the file is, it can be held, energy saving and environmental protection, the results are as follows:

$ head new

zhangwei123

zhangwei123456

zhangwei@123

zhangwei888

zhangwei999

wangwei666

wangwei2015

wangwei2016

wangwei521

wangwei123

PS: As mentioned above, try to avoid using the readlines method. When the memory is limited, it will be a disaster if you encounter a very large file

阿神2017-04-18 09:27:14

Save each line of file2 into a list, and then just take five from the list each time

I don’t have python at hand, so the code is purely handwritten and there may be errors. Just understand the thought

names = []

with open('file1.txt','r') as username:

for line in username.readlines():

names.append(line)

list = []

with open('file2.txt','r') as dict:

for line in dict.readlines():

list.append(line)

for i in range(len(line) / 5):

f = open('zidian' + str(i + 1) + '.txt', 'w')

for j in range(5):

for name in names:

f.write(user.strip() + line[i * 5 + j] + '\n')

f.close()

# 把除5的余数,即剩下的最后几行再写一个文件,代码不写了PHP中文网2017-04-18 09:27:14

@dokelung’s itertools.cycle is a great idea, I have a better way:

with open('file2') as file2_handle:

passwords = file2_handle.readlines()

# 当然了,就如楼上所说,用readlines不好,但是这不是绝对的,在你的文件没有大到内存吃不消的情况下,readlines会显著提高程序的性能(这句话是有问题的,前提是你没拿读文件的IO时间做其他的事)

# 在我看来,几百万行的文件,那都不是事,我用python读取10G以上的文件都是常有的事

# 当然了,尽量不要用readlines,这里只是为了我实现下面的算法方便

with open('file1') as file1_handle:

name_password_dict = ['%s%s' % (line.rstrip(), passwords[i%len(passwords)]) for i, line in enumerate(file1_handle)]

# 有了name_password_dict还不是想干嘛干嘛,不管是分文件其他是什么的高洛峰2017-04-18 09:27:14

Simply add a counter line, every time it matches a set of values line += 1, close the file when line is 5, open a new file and set line to 0.