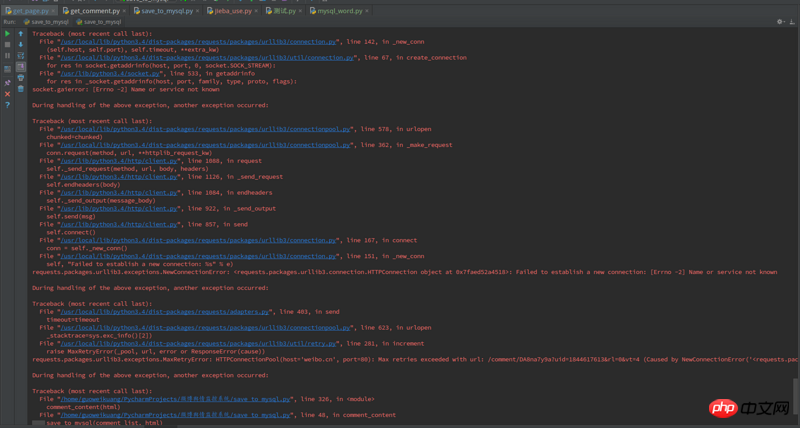

使用cookie模拟登录微博后想抓取多页微博内容,只是抓取到第二页就出现错误,以前都没出现过,使用的是Request库来模拟登录和获取内容。

代码如下:

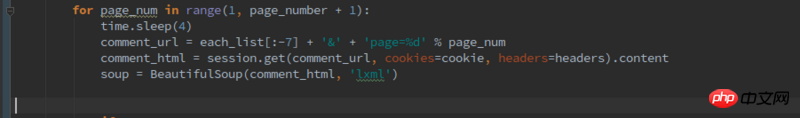

循环抓取在这里:

出现错误的代码如下:

我google过,有人说是因为requests发送http request占用太多connection资源,具体说明在

Python使用requests時遇到Failed to establish a new connection

阿神2017-04-17 17:56:35

Take the code, only 10 pages are captured here, Add the cookies yourself

# coding=utf-8

from pyquery import PyQuery as Q

import requests, threading, time

session = requests.Session()

session.headers = {

'Cookie':'',

'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.63 Safari/537.36'

}

def get_title(titles, page):

r = session.get('http://weibo.cn/gzyhl?page={0}'.format(page))

html = r.text.encode('utf-8')

for _ in Q(html).find('span.ctt'):

titles.append(Q(_).text())

start = time.time()

result, threadPool = [], []

for page in range(1, 11):

th = threading.Thread(target=get_title, args=(result, page))

th.start()

threadPool.append(th)

for th in threadPool:

threading.Thread.join(th)

end = time.time()

print 'times: {0}, total: {1}'.format(end - start, len(result))