使用requests进行get只获取到了一部分html源码,下面是我的代码

def get_url(self,url=None,proxies=None): header = { 'User-Agent' : 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:46.0) Gecko/20100101 Firefox/46.0', 'Content-Type': 'application/x-www-form-urlencoded', 'Connection' : 'Keep-Alive', 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8' } for prox in proxies: try: r=requests.get(url,proxies=prox,headers=header) if r.status_code!=200: continue else: print "使用{0}连接成功>>".format(prox) return r.content except Exception, e: return None

proxies参数是一个代理列表,这段代码会尝试使用proxies进行访问,访问成功就会返回

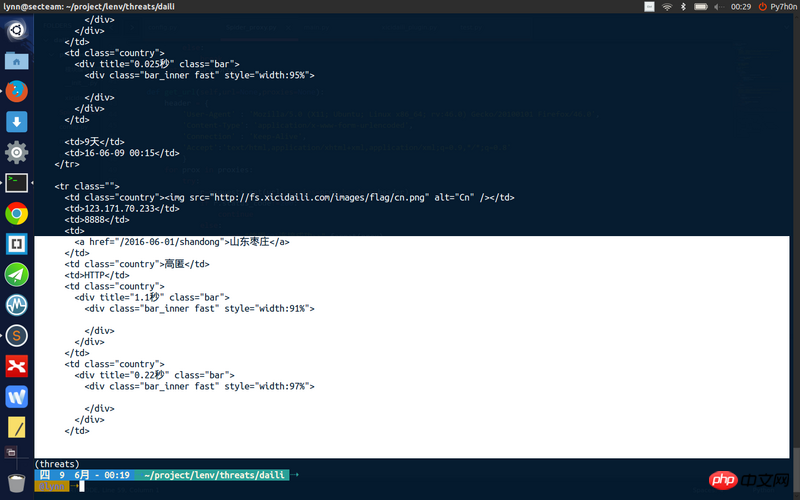

但是我获取到的页面源码不完整

巴扎黑2017-04-17 17:55:46

There are several reasons

1. Maybe some content is loaded via ajax.

So the full profile content cannot be obtained through requests.get.

It is recommended to use tools such as firebug to determine whether this is the reason.

Is this content only available after logging in?

PHP中文网2017-04-17 17:55:46

My code can get the entire content of the page, but it does not use the proxies parameter of requests.

Try to see if you can get the full content without using an agent?

My code:

import requests

headers = {

'User-Agent' : 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:46.0) Gecko/20100101 Firefox/46.0',

'Content-Type': 'application/x-www-form-urlencoded',

'Connection' : 'Keep-Alive',

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8'

}

html = requests.get('http://www.xicidaili.com/nn/', headers=headers).text

print htmlPHP中文网2017-04-17 17:55:46

I caught an Ubuntuer one...and even installed the theme...I just passed it...

怪我咯2017-04-17 17:55:46

The answer from the 1st floor is very clear. It should be that the web page returned is loaded asynchronously. It is recommended that you use fiddler to capture the packet to see if there is an asynchronous request!

ringa_lee2017-04-17 17:55:46

Let me tell you how to troubleshoot. [Old drivers don’t complain]

1. Use Chrome's network tool to capture packets (other tools are also acceptable), and compare the response with the results you captured. If they are the same, it means that this page needs to be rendered through js.

2. If the results in step 1 are inconsistent, consider the impact of other fields in the header. In general, cookies affect access rights, and user-agent affects the dom structure and content. Mainly check these two points first. (There may be some weird headers that require special processing)

3. Open a proxy test request to check for problems such as access to IP being blocked

4. If it is determined to be a page rendered by js. There are two solutions. One is to capture the api interface (requiring keen ability to discover rules). For the packet capture method, refer to 1. The second is to directly perform js rendering (related operations) on the server to obtain the final page rendering result.