需求**:

有一个15G左右的日志文件,文件中每一行都是一串数字。长度在3——12位之间不等。现在需要算出在日志文件中数字出现次数最多的前10个。**

遇到的问题:

while(!feof(fp)){

fgets(mid,1000,fp); //读取一行内容

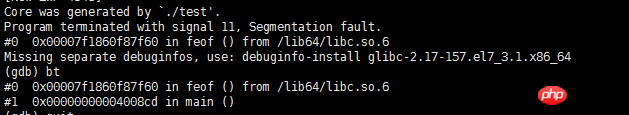

}我用这种方法读取文件,一直报 Segmentation fault (core dumped)。gbd调试情况如下:

需要能读取大文件的方法。求高手支招。

PHP中文网2017-04-17 15:27:39

The way you write this file is really inefficient, and it’s not used in any situation. Word frequency sorting of very large text files is the most typical problem solved by Hadoop.

Here is how to write the source program for similar functions:

Write Hadoop word statistics program in C language

If you really don’t want to learn Hadoop, then even if you don’t use Hadoop, you can run it directly with the pipeline command: cat numbers.txt | ./mapper | sort | ./reducer

The mapper and reducer are the two mappings in the original text respectively. converter and summarizer.

If you insist on knowing how to read this kind of data using a file, there are two options. One is to use 64-bit integers:

while(!feof(fp)) {

long long x = 0; // 定义一个64位整型变量

fscanf(fp, "%lld", &x);

// .... 可以处理数据了

}Another way is to use strings:

while(!feof(fp)) {

char mid[256];

memset(mid, 0, 256);

fscanf(fp, "%s", mid);

}In short, do not use line reading. I'm curious how you dealt with it after reading it? I think it crashed during memory allocation. Are you planning to read all the contents into memory for processing?

PHP中文网2017-04-17 15:27:39

According to the main question, which requires Linux+C, an idea is provided, as follows:

You can consider using shared memory (mmap), mapping part of the file at a time and processing it in sequence

PHP中文网2017-04-17 15:27:39

You can consider using split to split the file and then sort:

#large.txt文件每100行进行分割,分割后文件前缀为prefix_

split -l 100 large.txt prefix_

#对文件里的行按数字逆序排序

sort -rn prefix_ab