代码不长,如下,爬取的是 雪中悍刀行的所有章节内容

var http = require('http');

var $ = require('cheerio');

var async = require('async');

var iconv = require('iconv-lite');

var fs = require('fs');

var chapterNo = 1;

var url = 'http://www.biquku.com/0/761/',

hrefList = {};

var curCount = 0;

var getChapter = function(url, cb) {

++ curCount;

console.log('读取:' + url + '中, 同时有' + curCount + '并发中');

var req = http.request(url, function(res) {

var buffer_arr = [];

var buffer_len = 0;

if (res.statusCode == 200) {

res.on('data', function(chunk) {

buffer_arr.push(chunk);

buffer_len += chunk.length;

});

res.on('end', function() {

var $content = $(iconv.decode(Buffer.concat(buffer_arr, buffer_len), 'gbk')).find('#content').text();

-- curCount;

cb(null , $content);

})

} else {

console.log("status: " + res.statusCode);

getChapter(url, cb);

}

});

req.on('error', function(err) {

console.log('request-err');

console.error(err);

})

req.end();

}

var req = http.request(url, function(res) {

var buffer_arr = [];

var buffer_len = 0;

res.on('data', function(chunk) {

buffer_arr.push(chunk);

buffer_len += chunk.length;

});

res.on('end', function() {

var $html = $(iconv.decode(Buffer.concat(buffer_arr, buffer_len), 'gbk'));

var $urls = $html.find('#list>dl>dd>a');

var $a = '';

for (var i = 0; i < $urls.length; i++) {

$a = $($urls[i]);

hrefList[$a.text()] = (function(url) {

return function(cb) {

setTimeout(function() {

getChapter(url, cb);

}, 0)

}

})(url.concat($a.attr('href')));

}

console.time('novel');

async.parallelLimit(hrefList, 20, function(err, res) {

if (err) {

console.log("parallel-err:");

console.error(err);

} else {

for (var key of Object.keys(res)) {

var fileName = './' + key + '.txt';

(function(key){

fs.writeFile(fileName, res[key], function(err) {

if (err) {

console.log('writefile-err:');

console.error(err);

} else {

console.log(key + ': success');

}

})

})(key)

}

console.timeEnd('novel');

}

})

})

})

req.on('error', function(e) {

console.error(e);

})

req.end();

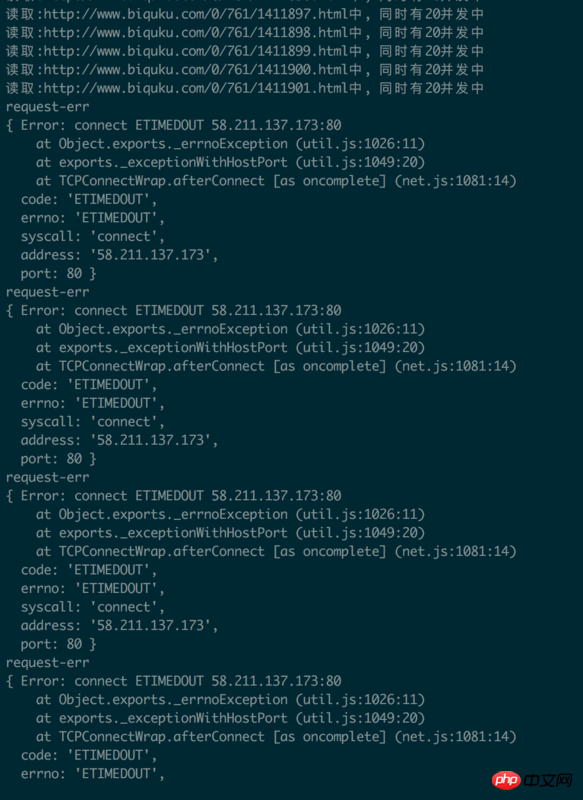

然后下面是请求报错的地方

希望大家能告诉我下,怎么解决呢?还有我这个算并发请求吗,因为我是通过setTimeout(func,0)这样来发起发起http请求的?

经过后来测试发现,200多张能成功读取,到了300就会发生错误了。。

阿神2017-04-17 14:53:15

Yours is indeed a concurrent request. Isn’t 20 a bit too much? Maybe the server can’t handle it. Try adjusting it to a smaller size, such as 10 or 5 concurrently

Update: I just ran your code on my computer and the error in the problem description did not appear. Perhaps another reason is that your network is not working properly.