PHP中文网2017-04-17 11:26:05

Modify the plan

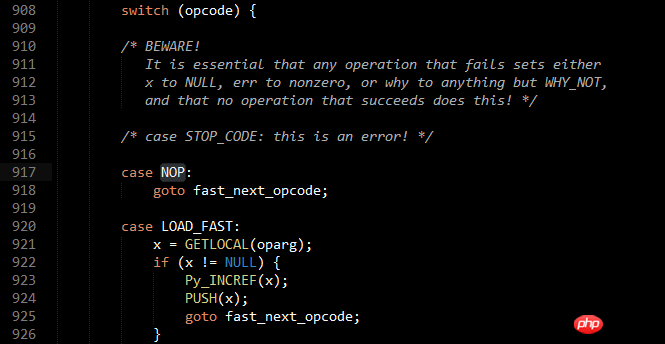

I did a test. Based on python 2.7.3, I changed all the cases in the PyEval_EvalFrameEx function to labels, and then used the labels as values feature of gcc to form an array with the 118 opcodes used in it and the corresponding labels. :

static void *label_hash[256] = {NULL};

static int initialized = 0;

if (!initialized)

{

#include "opcodes.c"

#include "labels.c"

int i, n_opcode = sizeof(opcode_list) / sizeof(*opcode_list);

for (i = 0; i < n_opcode; i++)

label_hash[opcode_list[i]] = label_list[i];

initialized = 1;

}

Then change switch (opcodes) to

void *label = label_hash[opcode];

if (label == NULL)

goto default_opcode;

goto *label;

And replace the break in each case one by one.

After compilation, it passed all tests (except test_gdb, which is the same as the unmodified version, without sys.pydebug), which means that the modification is correct.

Performance Test

The next question is performance...how to test this...I don’t have any good ideas, so I just found two pieces of code:

Direct loop 5kw times:

i = 50000000

while i > 0:

i -= 1

Run 4 times before modification: [4.858, 4.851, 4.877, 4.850], remove the largest one, average 4.853s

After modification, run 4 times: [4.558, 4.546, 4.550, 4.560], remove the largest one, average 4.551s

Performance improvement (100% - (4.551 / 4.853)) = 6.22%

Recursive Fibonacci, calculate the 38th

def fib(n):

return n if n <= 2 else fib(n - 2) + fib(n - 1)

print fib(37)

Before modification [6.227, 6.232, 6.311, 6.241], remove the largest one, average 6.233s

After modification [5.962, 5.945, 6.005, 6.037], removing the largest one, the average is 5.971s

Performance improvement (100% - (5.971 / 6.233)) = 4.20%

Conclusion

Taken together, this small change can indeed improve performance by about 5%. I don’t know how meaningful it is to you...

However, because it uses non-C standard features that are only supported by GCC, it is not convenient to transplant. According to this StackOverflow post, MSVC can support it to a certain extent, but it seems very tricky. I wonder if anyone has made such an attempt during the development of Python. Maybe the official will prefer portability? Maybe I can make a post and ask if I have time. According to @方泽图 (see the comments in his answer), Python 3.0+ introduced this optimization. See his answer for details.

reply

0