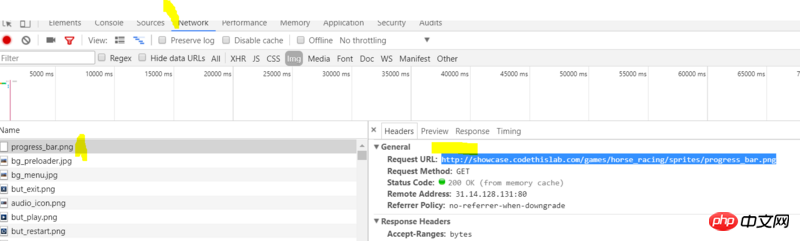

As shown in the figure, it is very troublesome to view and load images through the network by right-clicking one by one to save them. Is there any way to write a crawler to batch capture the images here?

仅有的幸福2017-06-28 09:27:48

This requirement, if you know how to crawl, is actually very simple, just a few steps:

Home page or page with pictures, get the url

Access the address of the above image url through the requests library or the urllib library

Write to local hard disk in binary format

Reference code:

import re, requests

r = requests.get("http://...页面地址..")

p = re.compile(r'相应的正则表达式匹配')

image = p.findall(r.text)[0] # 通过正则获取所有图片的url

ir = requests.get(image) # 访问图片的地址

sz = open('logo.jpg', 'wb').write(ir.content) # 将其内容写入本地

print('logo.jpg', sz,'bytes')

For more details, you can refer to the official document of requests: requests document

女神的闺蜜爱上我2017-06-28 09:27:48

Yes,

Five parts of the crawler:

Scheduler

URL deduplication

Downloader

Web page parsing

Data storage

The idea for downloading images is:

Get the content of the web page where the image is located, parse the img tag, get the image address, and then Convenient picture URL, download each picture, save the downloaded picture address in the Bloom filter to avoid repeated downloads, each time you download a picture, check whether it has been downloaded through the URL, when the picture is downloaded to the local, you can Save the image path in the database and the image file in the folder, or save the image directly in the database.

python uses request+beautifulsoup4

java uses jsoup

女神的闺蜜爱上我2017-06-28 09:27:48

If multiple websites or one website need to be crawled very deep, the method above can be directly recursive or deep traversal.