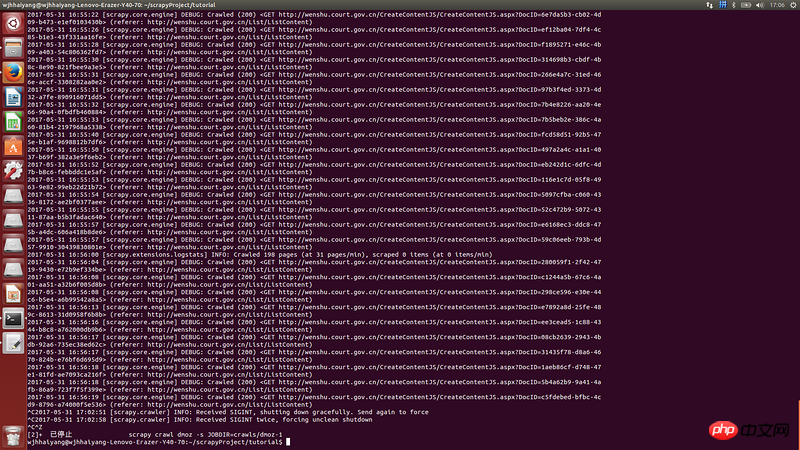

Every time it runs for about half an hour, it freezes directly. There is no error in the log. When it freezes, the CPU usage is very high

I set the download timeout in setting.py, but it is not the reason for timeout

ctrl-c cannot exit normally. After ctrl-z exits, the same problem persists when continuing to execute. It freezes again after half an hour.

高洛峰2017-06-12 09:29:08

First check TOP to see if the memory is too high or the CPU is too high, and then find out which processes are occupied

If they are all your crawler processes, then you have to check the code to see if there is anything that has not been released

In short, let’s investigate from all aspects