//方法一:长度为l的数组切成n片,追加n次

$('.btn1').click(function(event) {

var arr=['中国','美国','法国','英国','俄罗斯','朝鲜','瑞典','挪威','德国','意大利','南非','埃及','巴基斯坦','哈萨克斯坦','印度','越南','加拿大','澳大利亚'];

var result=[];

for(i=0;i<arr.length;i=i+3){

result.push(arr.slice(i,i+3));

}

for(j=0;j<result.length;j++){

var html='';

for(k=0;k<result[j].length;k++){

html+='<li>'+result[j][k]+'</li>';

}

console.log('html--'+html);

$('.list').append(html);

}

});

//方法二:长度为l的数组直接追加到dom中,循环l次

$('.btn2').click(function(event) {

var arr=['中国','美国','法国','英国','俄罗斯','朝鲜','瑞典','挪威','德国','意大利','南非','埃及','巴基斯坦','哈萨克斯坦','印度','越南','加拿大','澳大利亚'];

var html='';

for(i=0;i<arr.length;i++){

html+='<li>'+arr[i]+'</li>';

}

$('.list').append(html);

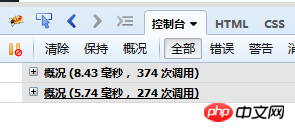

});This is the time measured by firebug: 8.3 milliseconds are added in groups (method one), 5.74 milliseconds are added directly (method two)

Personally think that grouping It will reduce dom rendering and avoid lagging. However, the results measured by firebug are contrary to my thoughts. Is it because the data is too small?

阿神2017-05-19 10:34:30

In fact, your grouping does not really share the pressure. You need to add a timer and use a time-sharing function

An example is creating a QQ friend list for WebQQ. There are usually hundreds or thousands of friends in the list. If a friend is represented by a node, when we render the list on the page, we may have to create hundreds or thousands of nodes in the page at one time.

Adding a large number of DOM nodes to the page in a short period of time will obviously overwhelm the browser. The result we see is often

stuttering or even suspended animation of the browser. The code is as follows:

var ary = [];

for ( var i = 1; i <= 1000; i++ ){

ary.push( i ); // 假设 ary 装载了 1000 个好友的数据

};

var renderFriendList = function( data ){

for ( var i = 0, l = data.length; i < l; i++ ){

var p = document.createElement( 'p' );

p.innerHTML = i;

document.body.appendChild( p );

}

};

renderFriendList( ary );

// data 数据 func 插入操作 interval 时间周期 该周期插入的项目数

var timeChunk = function(data, func, interval, count){

var obj, timer;

var start = function(){

for(var i = 0; i < Math.min(count || 1, data.length); i++){

obj = data.shift();

func(obj);

}

};

return function(){

timer = setInterval(function(){

if(data.length === 0){

return clearInterval(timer);

}

start();

}, interval);

};

}

var data= [];

for ( var i = 1; i <= 1000; i++ ){

data.push( i );

};

renderFriendList = timeChunk(data, function(n){

var p = document.createElement( 'p' );

p.innerHTML = n;

document.body.appendChild( p );

}, 200, 10);

renderFriendList();The above is excerpted from the book Design Patterns

高洛峰2017-05-19 10:34:30

The reason why your solution is slow is as follows,

$('.list').append(html);

Every time this code loops, it needs to reposition the dom element, that is, $('.list')jquery You need to obtain the .list document, which is equivalent to looping N and positioning it N times. This is of course inefficient, so your second option only positions it once and appends all the elements to it, which is obviously more efficient. As for you Array sharding cannot be reflected in js at all. How many times should it be looped, but it does not improve efficiency. It also adds an extra step of sharding. js itself is single-threaded, and the efficiency is still the same no matter how many times it is divided.

阿神2017-05-19 10:34:30

Getting thousands of pieces of data at one time shows that there is something wrong with your API design.

習慣沉默2017-05-19 10:34:30

Personally, I feel that the first method consumes more memory and time (when the volume is small). First, the above statements add up and run more times. Second, appending multiple times is definitely not as good as appending in one go

淡淡烟草味2017-05-19 10:34:30

Thousands of pieces of data obtained at one time will not all be appended at once.

The reasonable approach is to load according to the importance and secondaryness of the display. Load what you can see first, and then insert the rest into the page in batches when the process is idle.