If you want to use python to do quantitative stock trading, the first step is to obtain the historical data of the stock. Visit the http://data.eastmoney.com/sto... web page. After opening the source code of the web page, you cannot see the data in the table. It is said that it is loaded using ajax technology. I saw on the Internet that selenium and phantomJS can be used to obtain these dynamic web content, but I don't know how to obtain the complete source code. Please give me some guidance

我想大声告诉你2017-05-18 11:03:14

In fact, I just tried it. The page was not loaded using xhr. It was already in the source code of the page, and then a class was called to load the data into a table.

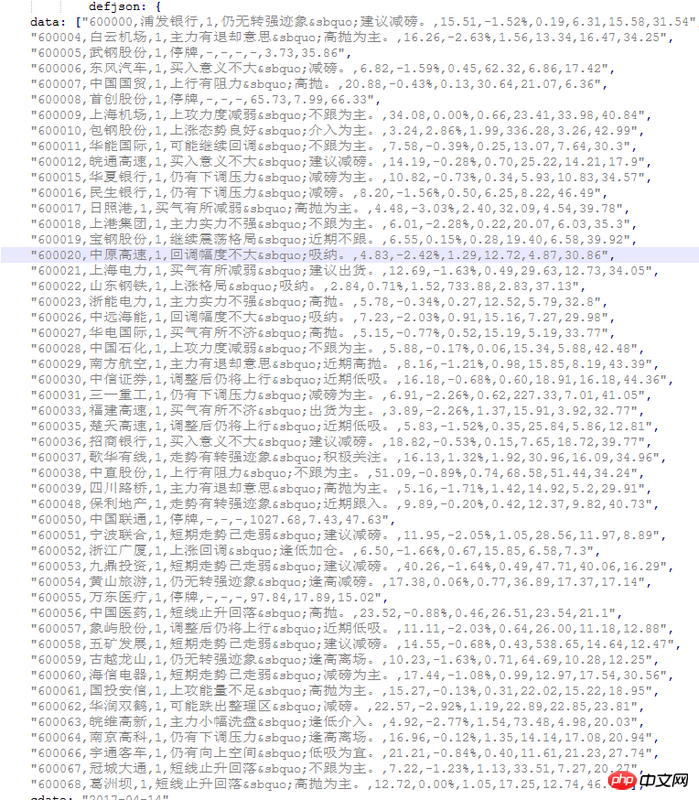

For example, the data on the home page:

Then, just use re extraction directly to extract. After getting the text, just parse it in json.

Write here first.

++++++++++++++++++++++++++++++++++++++++

Then, this website does not use xhr to load data but uses js to load json data and dynamically parse it for display. Specific analysis requires knowledge of js. If you understand it, you can try to analyze it yourself.

I tried it.

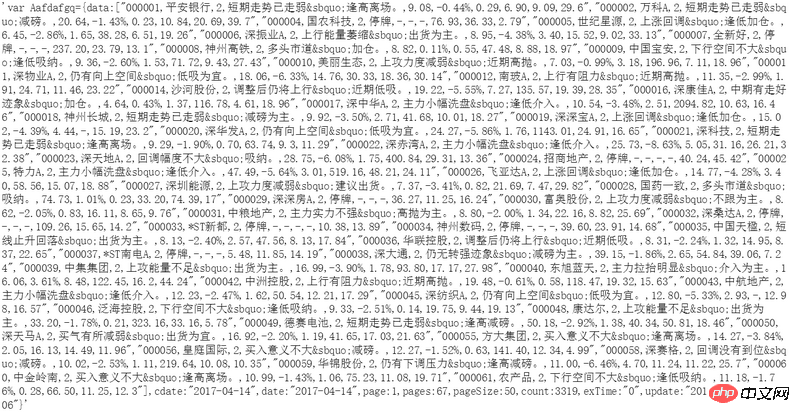

from urllib.parse import quote

import time

import requests

url = "http://datainterface.eastmoney.com/EM_DataCenter/JS.aspx?type=FD&sty=TSTC&st={sortType}\

&sr={sortRule}&p={page}&ps={pageSize}&js=var {jsname}=(x){param}"

params = {

"sortType": 1,

"sortRule": 1,

"page": 2,

"pageSize": 50,

"jsname": "Aafdafgq", # 这里使用的是随机字符串,8位

"param": "&mkt=0&rt="

}

params["param"] += str(int(time.time()/30)) # 当前时间

url = url.format(**params)

url = quote(url, safe=":=/?&()")

req = requests.get(url)

req.text

给我你的怀抱2017-05-18 11:03:14

The advantage of using this combination is that it is simple and violent, but the disadvantage is that it is less efficient.

It is equivalent to opening a browser loading page that you cannot see, and then reading the calculated results.

If you are new to learning reptiles, I recommend this animal book called python network data collection.

The instructions you need are in the chapter on collecting dynamic pages.

This book is very thin and very practical.

巴扎黑2017-05-18 11:03:14

I don’t know much about js or json, and I just started crawling. After your prompt, I checked the source code again and found that there is this data in defjson. I don't understand how to display the data in json into tbody. I can capture this data using pandas' read_html, but the last two columns will be lost. It seems that I have to look at js and json first