最近因为没小说看,也无聊,就想着用Node来写爬虫爬书下来,弄了好几天有些问题。

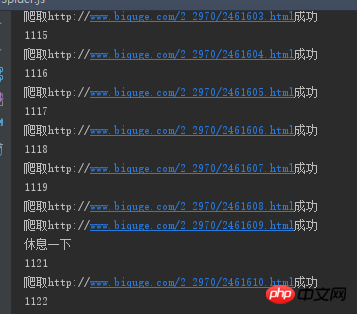

爬小说异步的话章节不是顺序排列的,所以用了sync-request进行同步操作,我爬的是笔趣阁这个网站上的书,现在由于刚学,只是做了爬单本书的。我发现在爬取的时候,会假死掉,停在那不动了,而且每次的章节数不同,我就加了个十秒的timeout超时,但是还是会出现这种假死的情况。后来百度了下,说网站是有防止爬虫的东东的,具体我也不太清楚==,我就想,那我就加个间隔咯,我让他每请求十次就休息20秒钟,再重新爬。结果!!!还是会假死,233333。所以现在有点不明白为啥了,想求教一下,给点思路。拜托各位~~The following is the code I requested. I crawled out the specific chapter list in another js and wrote it in json. Here I request each link directly:

var http = require('http')

var fs = require('fs')

var cheerio = require('cheerio')

var iconv = require('iconv-lite')

var request = require('sync-request')

var urlList = JSON.parse(fs.readFileSync('list.json', 'utf-8'))

var header = JSON.parse(fs.readFileSync('header.json'), 'utf-8')

//爬取每章节正文并存在txt中

function getContent(list,title) {

//用try catch进行错误捕获,防止报错跳出

try{

var res = request('GET',list.link,{

'timeout': 10000,

'retry': true,

'retryDelay': 10000

})

var html = iconv.decode(res.body, 'utf8')

var $ = cheerio.load(html,{

decodeEntities: false

})

var ContentTitle = $('.bookname h1').text()

var ContentText = $('#content').text().trim().replace('readx();', '').replace(/\ /g, '')

fs.appendFileSync(title+".txt", ContentTitle)

fs.appendFileSync(title+".txt", ContentText)

console.log("爬取" + list.link + "成功")

}catch(err) {

console.log("爬取" + list.link + "出错")

}

}

//为了达到间隔的调用请求做了递归调用

function getUrl(index) {

for (let i = index;i < urlList.length;i++){

if (i>0 && i%10 == 0){

getContent(urlList[i],header.title)

console.log("休息一下")

setTimeout(() => {

i++

getUrl(i)

},20000)

return

}else {

console.log(i)

getContent(urlList[i],header.title)

}

}

}

getUrl(0)

It’s the same as this. After crawling for a while, it just looks like it’s frozen. No matter how long it waits, nothing happens, and the set timeout doesn’t respond.

漂亮男人2017-05-16 13:40:49

I have been dealing with this problem for the past two days. At first I thought it was a sync-request problem, but then I changed it to something else and it still remained the same. I guessed that maybe the IP website was blocked or something. Later, I was chatting with my colleagues during lunch and asked for advice. They said that this was probably the problem. In this case, I went to get some free proxy IPs, and then when requesting, as long as the request times out or an error is reported, I will immediately switch to an IP address to make the request. In this way, I climbed a large novel yesterday. When I came to work today, I saw that it was all climbed down and there was no problem, haha. However, many free proxy IPs cannot be used, so part of the time is wasted on this. Now let’s start to see how to crawl multiple books, ↖(^ω^)↗