A newbie has just learned PHP. Currently, the development needs to use phpexcel to insert 10,000 records into the database (1 record with 43 columns). Before inserting each piece of data, check whether it exists in the database. If it does not exist, continue to insert and update the original data. Set the flag, otherwise skip the record.

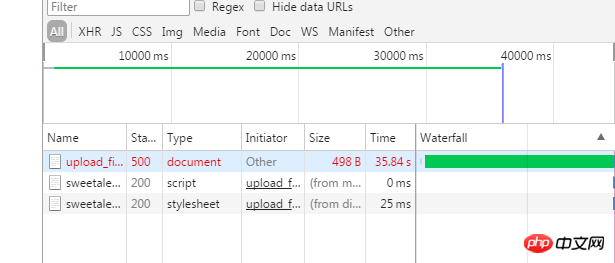

The current situation is that it takes more than 20 seconds to import 2,000 pieces of data. When importing 10,000 pieces of data, a 500 error is reported. The import fails. The file is not found in the directory where the uploaded file is stored. `$result=move_uploaded_file($_FILES'inputExcel',$uploadfile);

if(!$result)

{

die('no file!');

}

$objReader = PHPExcel_IOFactory::createReader('CSV')

->setDelimiter(',')

->setInputEncoding('GBK')

->setEnclosure('"')

->setLineEnding("\r\n")

->setSheetIndex(0);

$objPHPExcel = $objReader->load($uploadfile);

$sheet = $objPHPExcel->getSheet(0);

$highestRowNum = $sheet->getHighestRow();

$highestColumn = $sheet->getHighestColumn();

$highestColumnNum = PHPExcel_Cell::columnIndexFromString($highestColumn);

echo $highestRowNum.'+'.$highestThe number of rows and columns cannot be obtained here. Set_time_limit=0 has been added to the code, and the memory has been changed to 128M. Asking for guidance

伊谢尔伦2017-05-16 13:01:12

It should be that the memory is full. You can use yield iteration to limit the number of items inserted each time. In fact, it is a coroutine.

大家讲道理2017-05-16 13:01:12

A single import of 10,000 pieces of data will easily time out and place a heavy load on the server. You can consider splitting the 1,000 pieces of data into multiple imports. This kind of big data import can be completed in an asynchronous manner. Do not let users wait. You can also consider using coroutines instead.

漂亮男人2017-05-16 13:01:12

Add the uploaded file name to the queue. Write a script to read the file name from the queue and open the file for import. Add the script to a crontab scheduled task or write it as a service

为情所困2017-05-16 13:01:12

Since it is csv, there is no need to use PHPExcel. This open source library consumes too much memory. I used this library before, https://packagist.org/package... (it seems to only support PHP7), I won’t write the demo. I’ll look for it myself in the documentation. I can import hundreds of thousands of items without any pressure, thank you.

Introducing another one, https://packagist.org/package..., there is no pressure. I suggest you first understand what csv is. We can even write a csv import class ourselves, which feels most efficient and light. magnitude.