pembangunan bahagian belakang

pembangunan bahagian belakang Tutorial Python

Tutorial Python IRIS-RAG-Gen: Memperibadikan Aplikasi ChatGPT RAG Dikuasakan oleh Carian Vektor IRIS

IRIS-RAG-Gen: Memperibadikan Aplikasi ChatGPT RAG Dikuasakan oleh Carian Vektor IRISIRIS-RAG-Gen: Memperibadikan Aplikasi ChatGPT RAG Dikuasakan oleh Carian Vektor IRIS

Hai Komuniti,

Dalam artikel ini, saya akan memperkenalkan aplikasi saya iris-RAG-Gen .

Iris-RAG-Gen ialah aplikasi Generatif Retrieval-Augmented Generatif (RAG) AI yang memanfaatkan kefungsian Carian Vektor IRIS untuk memperibadikan ChatGPT dengan bantuan rangka kerja web Streamlit, LangChain dan OpenAI. Aplikasi ini menggunakan IRIS sebagai kedai vektor.

Ciri Aplikasi

- Terapkan Dokumen (PDF atau TXT) ke dalam IRIS

- Bersembang dengan dokumen Termakan yang dipilih

- Padamkan Dokumen yang Dimakan

- OpenAI ChatGPT

Terapkan Dokumen (PDF atau TXT) ke dalam IRIS

Ikuti Langkah Di Bawah untuk Mengambil dokumen:

- Masukkan Kunci OpenAI

- Pilih Dokumen (PDF atau TXT)

- Masukkan Penerangan Dokumen

- Klik pada Butang Dokumen Ingest

Kefungsian Ingest Document memasukkan butiran dokumen ke dalam jadual rag_documents dan mencipta jadual 'rag_document id' (id of the rag_documents) untuk menyimpan data vektor.

Kod Python di bawah akan menyimpan dokumen yang dipilih ke dalam vektor:

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.document_loaders import PyPDFLoader, TextLoader

from langchain_iris import IRISVector

from langchain_openai import OpenAIEmbeddings

from sqlalchemy import create_engine,text

<span>class RagOpr:</span>

#Ingest document. Parametres contains file path, description and file type

<span>def ingestDoc(self,filePath,fileDesc,fileType):</span>

embeddings = OpenAIEmbeddings()

#Load the document based on the file type

if fileType == "text/plain":

loader = TextLoader(filePath)

elif fileType == "application/pdf":

loader = PyPDFLoader(filePath)

#load data into documents

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=400, chunk_overlap=0)

#Split text into chunks

texts = text_splitter.split_documents(documents)

#Get collection Name from rag_doucments table.

COLLECTION_NAME = self.get_collection_name(fileDesc,fileType)

# function to create collection_name table and store vector data in it.

db = IRISVector.from_documents(

embedding=embeddings,

documents=texts,

collection_name = COLLECTION_NAME,

connection_string=self.CONNECTION_STRING,

)

#Get collection name

<span>def get_collection_name(self,fileDesc,fileType):</span>

# check if rag_documents table exists, if not then create it

with self.engine.connect() as conn:

with conn.begin():

sql = text("""

SELECT *

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = 'SQLUser'

AND TABLE_NAME = 'rag_documents';

""")

result = []

try:

result = conn.execute(sql).fetchall()

except Exception as err:

print("An exception occurred:", err)

return ''

#if table is not created, then create rag_documents table first

if len(result) == 0:

sql = text("""

CREATE TABLE rag_documents (

description VARCHAR(255),

docType VARCHAR(50) )

""")

try:

result = conn.execute(sql)

except Exception as err:

print("An exception occurred:", err)

return ''

#Insert description value

with self.engine.connect() as conn:

with conn.begin():

sql = text("""

INSERT INTO rag_documents

(description,docType)

VALUES (:desc,:ftype)

""")

try:

result = conn.execute(sql, {'desc':fileDesc,'ftype':fileType})

except Exception as err:

print("An exception occurred:", err)

return ''

#select ID of last inserted record

sql = text("""

SELECT LAST_IDENTITY()

""")

try:

result = conn.execute(sql).fetchall()

except Exception as err:

print("An exception occurred:", err)

return ''

return "rag_document"+str(result[0][0])

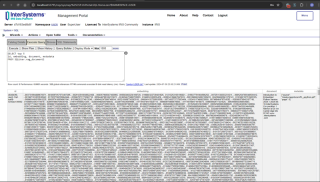

Taip perintah SQL di bawah dalam portal pengurusan untuk mendapatkan semula data vektor

SELECT top 5 id, embedding, document, metadata FROM SQLUser.rag_document2

Bersembang dengan dokumen Termakan yang dipilih

Pilih Dokumen daripada bahagian pilihan sembang pilih dan taip soalan. Aplikasi akan membaca data vektor dan mengembalikan jawapan yang berkaitan

Kod Python di bawah akan menyimpan dokumen yang dipilih ke dalam vektor:

from langchain_iris import IRISVector

from langchain_openai import OpenAIEmbeddings,ChatOpenAI

from langchain.chains import ConversationChain

from langchain.chains.conversation.memory import ConversationSummaryMemory

from langchain.chat_models import ChatOpenAI

<span>class RagOpr:</span>

<span>def ragSearch(self,prompt,id):</span>

#Concat document id with rag_doucment to get the collection name

COLLECTION_NAME = "rag_document"+str(id)

embeddings = OpenAIEmbeddings()

#Get vector store reference

db2 = IRISVector (

embedding_function=embeddings,

collection_name=COLLECTION_NAME,

connection_string=self.CONNECTION_STRING,

)

#Similarity search

docs_with_score = db2.similarity_search_with_score(prompt)

#Prepair the retrieved documents to pass to LLM

relevant_docs = ["".join(str(doc.page_content)) + " " for doc, _ in docs_with_score]

#init LLM

llm = ChatOpenAI(

temperature=0,

model_name="gpt-3.5-turbo"

)

#manage and handle LangChain multi-turn conversations

conversation_sum = ConversationChain(

llm=llm,

memory= ConversationSummaryMemory(llm=llm),

verbose=False

)

#Create prompt

template = f"""

Prompt: <span>{prompt}

Relevant Docuemnts: {relevant_docs}

"""</span>

#Return the answer

resp = conversation_sum(template)

return resp['response']

Untuk butiran lanjut, sila lawati iris-RAG-Gen halaman aplikasi pertukaran terbuka.

Terima kasih

Atas ialah kandungan terperinci IRIS-RAG-Gen: Memperibadikan Aplikasi ChatGPT RAG Dikuasakan oleh Carian Vektor IRIS. Untuk maklumat lanjut, sila ikut artikel berkaitan lain di laman web China PHP!

Menyenaraikan senarai di Python: Memilih kaedah yang betulMay 14, 2025 am 12:11 AM

Menyenaraikan senarai di Python: Memilih kaedah yang betulMay 14, 2025 am 12:11 AMTomergelistsinpython, operator youCanusethe, extendmethod, listcomprehension, oritertools.chain, eachwithspecificadvantages: 1) operatorSimpleButlessefficientficorlargelists;

Bagaimana untuk menggabungkan dua senarai dalam Python 3?May 14, 2025 am 12:09 AM

Bagaimana untuk menggabungkan dua senarai dalam Python 3?May 14, 2025 am 12:09 AMDalam Python 3, dua senarai boleh disambungkan melalui pelbagai kaedah: 1) Pengendali penggunaan, yang sesuai untuk senarai kecil, tetapi tidak cekap untuk senarai besar; 2) Gunakan kaedah Extend, yang sesuai untuk senarai besar, dengan kecekapan memori yang tinggi, tetapi akan mengubah suai senarai asal; 3) menggunakan * pengendali, yang sesuai untuk menggabungkan pelbagai senarai, tanpa mengubah suai senarai asal; 4) Gunakan itertools.chain, yang sesuai untuk set data yang besar, dengan kecekapan memori yang tinggi.

Rentetan senarai concatenate pythonMay 14, 2025 am 12:08 AM

Rentetan senarai concatenate pythonMay 14, 2025 am 12:08 AMMenggunakan kaedah Join () adalah cara yang paling berkesan untuk menyambungkan rentetan dari senarai di Python. 1) Gunakan kaedah Join () untuk menjadi cekap dan mudah dibaca. 2) Kitaran menggunakan pengendali tidak cekap untuk senarai besar. 3) Gabungan pemahaman senarai dan menyertai () sesuai untuk senario yang memerlukan penukaran. 4) Kaedah mengurangkan () sesuai untuk jenis pengurangan lain, tetapi tidak cekap untuk penyambungan rentetan. Kalimat lengkap berakhir.

Pelaksanaan Python, apa itu?May 14, 2025 am 12:06 AM

Pelaksanaan Python, apa itu?May 14, 2025 am 12:06 AMPythonexecutionistheprocessoftransformingpythoncodeIntoExecutableInstructions.1) TheinterpreterreadsTheCode, convertingIntoByteCode, yang mana -mana

Python: Apakah ciri -ciri utamaMay 14, 2025 am 12:02 AM

Python: Apakah ciri -ciri utamaMay 14, 2025 am 12:02 AMCiri -ciri utama Python termasuk: 1. Sintaks adalah ringkas dan mudah difahami, sesuai untuk pemula; 2. Sistem jenis dinamik, meningkatkan kelajuan pembangunan; 3. Perpustakaan standard yang kaya, menyokong pelbagai tugas; 4. Komuniti dan ekosistem yang kuat, memberikan sokongan yang luas; 5. Tafsiran, sesuai untuk skrip dan prototaip cepat; 6. Sokongan multi-paradigma, sesuai untuk pelbagai gaya pengaturcaraan.

Python: pengkompil atau penterjemah?May 13, 2025 am 12:10 AM

Python: pengkompil atau penterjemah?May 13, 2025 am 12:10 AMPython adalah bahasa yang ditafsirkan, tetapi ia juga termasuk proses penyusunan. 1) Kod python pertama kali disusun ke dalam bytecode. 2) Bytecode ditafsirkan dan dilaksanakan oleh mesin maya Python. 3) Mekanisme hibrid ini menjadikan python fleksibel dan cekap, tetapi tidak secepat bahasa yang disusun sepenuhnya.

Python untuk gelung vs semasa gelung: Bila menggunakan yang mana?May 13, 2025 am 12:07 AM

Python untuk gelung vs semasa gelung: Bila menggunakan yang mana?May 13, 2025 am 12:07 AMUseAforLoopWheniteratingOvereForforpecificNumbimes; Useaphileloopwhencontinuinguntilaconditionismet.forloopsareidealforknownownsequences, sementara yang tidak digunakan.

Gelung Python: Kesalahan yang paling biasaMay 13, 2025 am 12:07 AM

Gelung Python: Kesalahan yang paling biasaMay 13, 2025 am 12:07 AMPythonloopscanleadtoerrorslikeinfiniteloops, pengubahsuaianListsduringiteration, off-by-oneerrors, sifar-indexingissues, andnestedloopinefficies.toavoidthese: 1) use'i

Alat AI Hot

Undresser.AI Undress

Apl berkuasa AI untuk mencipta foto bogel yang realistik

AI Clothes Remover

Alat AI dalam talian untuk mengeluarkan pakaian daripada foto.

Undress AI Tool

Gambar buka pakaian secara percuma

Clothoff.io

Penyingkiran pakaian AI

Video Face Swap

Tukar muka dalam mana-mana video dengan mudah menggunakan alat tukar muka AI percuma kami!

Artikel Panas

Alat panas

MinGW - GNU Minimalis untuk Windows

Projek ini dalam proses untuk dipindahkan ke osdn.net/projects/mingw, anda boleh terus mengikuti kami di sana. MinGW: Port Windows asli bagi GNU Compiler Collection (GCC), perpustakaan import yang boleh diedarkan secara bebas dan fail pengepala untuk membina aplikasi Windows asli termasuk sambungan kepada masa jalan MSVC untuk menyokong fungsi C99. Semua perisian MinGW boleh dijalankan pada platform Windows 64-bit.

Hantar Studio 13.0.1

Persekitaran pembangunan bersepadu PHP yang berkuasa

Dreamweaver Mac版

Alat pembangunan web visual

DVWA

Damn Vulnerable Web App (DVWA) ialah aplikasi web PHP/MySQL yang sangat terdedah. Matlamat utamanya adalah untuk menjadi bantuan bagi profesional keselamatan untuk menguji kemahiran dan alatan mereka dalam persekitaran undang-undang, untuk membantu pembangun web lebih memahami proses mengamankan aplikasi web, dan untuk membantu guru/pelajar mengajar/belajar dalam persekitaran bilik darjah Aplikasi web keselamatan. Matlamat DVWA adalah untuk mempraktikkan beberapa kelemahan web yang paling biasa melalui antara muka yang mudah dan mudah, dengan pelbagai tahap kesukaran. Sila ambil perhatian bahawa perisian ini

Dreamweaver CS6

Alat pembangunan web visual