Peranti teknologi

Peranti teknologi AI

AI Kecekapan RNN adalah setanding dengan Transformer, seni bina baharu Google mempunyai dua keluaran berturut-turut: ia lebih kuat daripada Mamba pada skala yang sama

Kecekapan RNN adalah setanding dengan Transformer, seni bina baharu Google mempunyai dua keluaran berturut-turut: ia lebih kuat daripada Mamba pada skala yang samaKecekapan RNN adalah setanding dengan Transformer, seni bina baharu Google mempunyai dua keluaran berturut-turut: ia lebih kuat daripada Mamba pada skala yang sama

Pada Disember tahun lalu, seni bina baharu Mamba meletupkan bulatan AI dan melancarkan cabaran kepada Transformer yang sentiasa ada. Hari ini, pelancaran Google DeepMind “Hawk” dan “Griffin” menyediakan pilihan baharu untuk kalangan AI.

Tajuk kertas: Griffin: Mencampurkan Ulangan Linear Berpagar dengan Perhatian Tempatan untuk Model Bahasa Cekap -

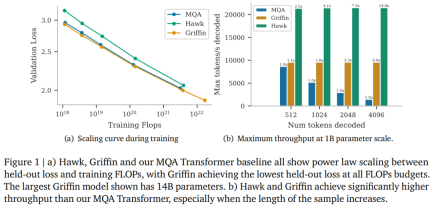

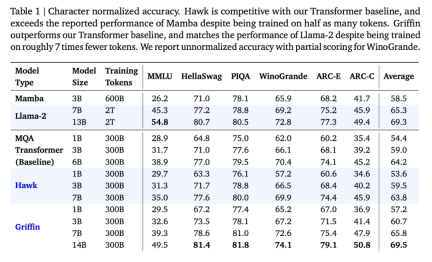

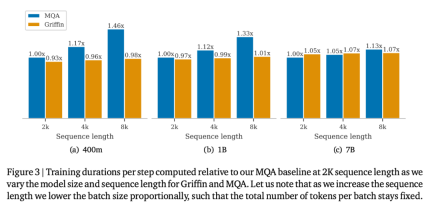

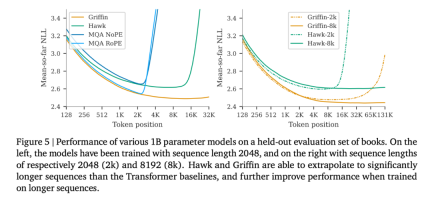

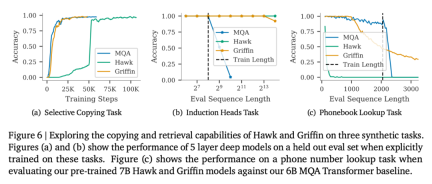

Pautan kertas: https://arxiv.org/pdf/24702.1942.194 Para penyelidik mengatakan bahawa Hawk dan Griffin mempamerkan penskalaan undang-undang kuasa antara kehilangan yang ditahan dan FLOP latihan, sehingga parameter 7B, seperti yang diperhatikan sebelum ini dalam Transformers. Antaranya, Griffin mencapai kerugian tertahan sedikit lebih rendah daripada garis dasar Transformer yang berkuasa pada semua saiz model.

Atas ialah kandungan terperinci Kecekapan RNN adalah setanding dengan Transformer, seni bina baharu Google mempunyai dua keluaran berturut-turut: ia lebih kuat daripada Mamba pada skala yang sama. Untuk maklumat lanjut, sila ikut artikel berkaitan lain di laman web China PHP!

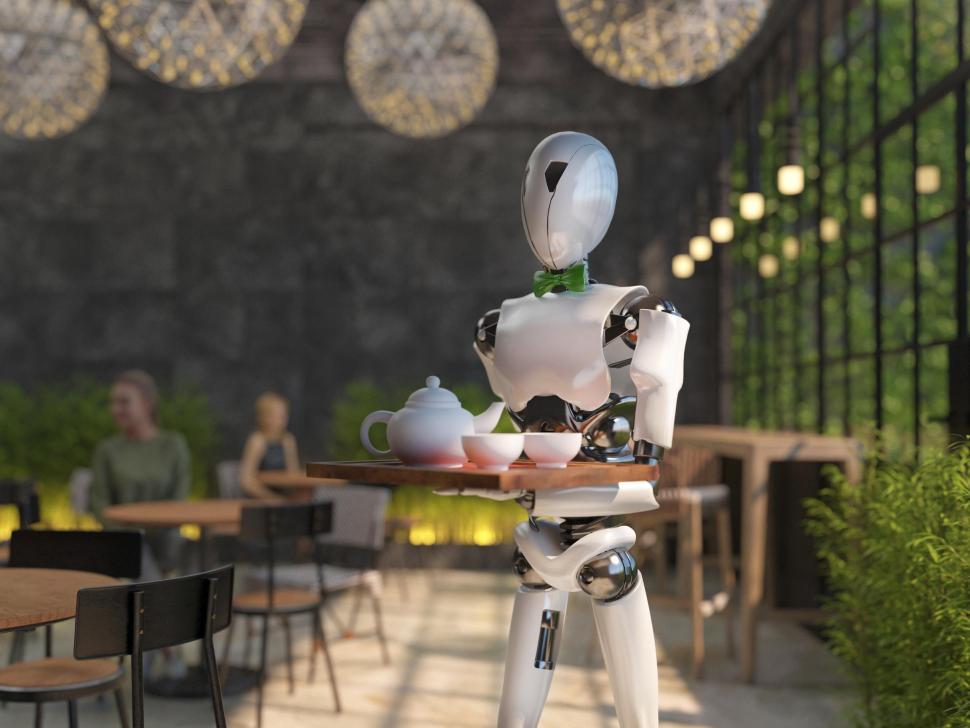

Memasak Inovasi: Bagaimana Kecerdasan Buatan Mengubah Perkhidmatan MakananApr 12, 2025 pm 12:09 PM

Memasak Inovasi: Bagaimana Kecerdasan Buatan Mengubah Perkhidmatan MakananApr 12, 2025 pm 12:09 PMAI Menambah Penyediaan Makanan Walaupun masih dalam penggunaan baru, sistem AI semakin digunakan dalam penyediaan makanan. Robot yang didorong oleh AI digunakan di dapur untuk mengautomasikan tugas penyediaan makanan, seperti membuang burger, membuat pizza, atau memasang SA

Panduan Komprehensif mengenai Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Panduan Komprehensif mengenai Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMPengenalan Memahami ruang nama, skop, dan tingkah laku pembolehubah dalam fungsi Python adalah penting untuk menulis dengan cekap dan mengelakkan kesilapan runtime atau pengecualian. Dalam artikel ini, kami akan menyelidiki pelbagai ASP

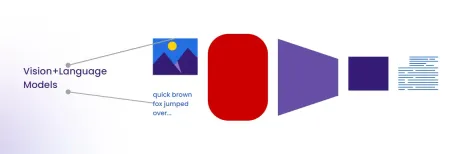

Panduan Komprehensif untuk Model Bahasa Visi (VLMS)Apr 12, 2025 am 11:58 AM

Panduan Komprehensif untuk Model Bahasa Visi (VLMS)Apr 12, 2025 am 11:58 AMPengenalan Bayangkan berjalan melalui galeri seni, dikelilingi oleh lukisan dan patung yang terang. Sekarang, bagaimana jika anda boleh bertanya setiap soalan dan mendapatkan jawapan yang bermakna? Anda mungkin bertanya, "Kisah apa yang anda ceritakan?

MediaTek meningkatkan barisan premium dengan Kompanio Ultra dan Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek meningkatkan barisan premium dengan Kompanio Ultra dan Dimensity 9400Apr 12, 2025 am 11:52 AMMeneruskan irama produk, bulan ini MediaTek telah membuat satu siri pengumuman, termasuk Kompanio Ultra dan Dimensity 9400 yang baru. Produk ini mengisi bahagian perniagaan MediaTek yang lebih tradisional, termasuk cip untuk telefon pintar

Minggu ini di AI: Walmart menetapkan trend fesyen sebelum mereka pernah berlakuApr 12, 2025 am 11:51 AM

Minggu ini di AI: Walmart menetapkan trend fesyen sebelum mereka pernah berlakuApr 12, 2025 am 11:51 AM#1 Google melancarkan Agent2Agent Cerita: Ia Isnin pagi. Sebagai perekrut berkuasa AI, anda bekerja lebih pintar, tidak lebih sukar. Anda log masuk ke papan pemuka syarikat anda di telefon anda. Ia memberitahu anda tiga peranan kritikal telah diperolehi, dijadualkan, dan dijadualkan untuk

AI Generatif Bertemu PsychobabbleApr 12, 2025 am 11:50 AM

AI Generatif Bertemu PsychobabbleApr 12, 2025 am 11:50 AMSaya akan meneka bahawa anda mesti. Kita semua seolah -olah tahu bahawa psychobabble terdiri daripada pelbagai perbualan yang menggabungkan pelbagai terminologi psikologi dan sering akhirnya menjadi tidak dapat difahami atau sepenuhnya tidak masuk akal. Semua yang anda perlu lakukan untuk memuntahkan fo

Prototaip: saintis menjadikan kertas menjadi plastikApr 12, 2025 am 11:49 AM

Prototaip: saintis menjadikan kertas menjadi plastikApr 12, 2025 am 11:49 AMHanya 9.5% plastik yang dihasilkan pada tahun 2022 dibuat daripada bahan kitar semula, menurut satu kajian baru yang diterbitkan minggu ini. Sementara itu, plastik terus menumpuk di tapak pelupusan sampah -dan ekosistem -sekitar dunia. Tetapi bantuan sedang dalam perjalanan. Pasukan Engin

Kebangkitan Penganalisis AI: Mengapa ini boleh menjadi pekerjaan yang paling penting dalam Revolusi AIApr 12, 2025 am 11:41 AM

Kebangkitan Penganalisis AI: Mengapa ini boleh menjadi pekerjaan yang paling penting dalam Revolusi AIApr 12, 2025 am 11:41 AMPerbualan baru -baru ini dengan Andy Macmillan, Ketua Pegawai Eksekutif Platform Analytics Enterprise terkemuka Alteryx, menonjolkan peranan kritikal namun kurang dihargai ini dalam revolusi AI. Seperti yang dijelaskan oleh Macmillan, jurang antara data perniagaan mentah dan maklumat siap sedia

Alat AI Hot

Undresser.AI Undress

Apl berkuasa AI untuk mencipta foto bogel yang realistik

AI Clothes Remover

Alat AI dalam talian untuk mengeluarkan pakaian daripada foto.

Undress AI Tool

Gambar buka pakaian secara percuma

Clothoff.io

Penyingkiran pakaian AI

AI Hentai Generator

Menjana ai hentai secara percuma.

Artikel Panas

Alat panas

MinGW - GNU Minimalis untuk Windows

Projek ini dalam proses untuk dipindahkan ke osdn.net/projects/mingw, anda boleh terus mengikuti kami di sana. MinGW: Port Windows asli bagi GNU Compiler Collection (GCC), perpustakaan import yang boleh diedarkan secara bebas dan fail pengepala untuk membina aplikasi Windows asli termasuk sambungan kepada masa jalan MSVC untuk menyokong fungsi C99. Semua perisian MinGW boleh dijalankan pada platform Windows 64-bit.

SublimeText3 Linux versi baharu

SublimeText3 Linux versi terkini

DVWA

Damn Vulnerable Web App (DVWA) ialah aplikasi web PHP/MySQL yang sangat terdedah. Matlamat utamanya adalah untuk menjadi bantuan bagi profesional keselamatan untuk menguji kemahiran dan alatan mereka dalam persekitaran undang-undang, untuk membantu pembangun web lebih memahami proses mengamankan aplikasi web, dan untuk membantu guru/pelajar mengajar/belajar dalam persekitaran bilik darjah Aplikasi web keselamatan. Matlamat DVWA adalah untuk mempraktikkan beberapa kelemahan web yang paling biasa melalui antara muka yang mudah dan mudah, dengan pelbagai tahap kesukaran. Sila ambil perhatian bahawa perisian ini

Muat turun versi mac editor Atom

Editor sumber terbuka yang paling popular

Pelayar Peperiksaan Selamat

Pelayar Peperiksaan Selamat ialah persekitaran pelayar selamat untuk mengambil peperiksaan dalam talian dengan selamat. Perisian ini menukar mana-mana komputer menjadi stesen kerja yang selamat. Ia mengawal akses kepada mana-mana utiliti dan menghalang pelajar daripada menggunakan sumber yang tidak dibenarkan.