コーヒー買ってきて☕

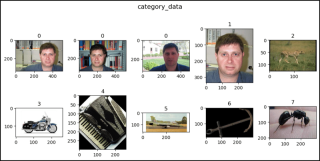

*私の投稿では Caltech 101 について説明しています。

Caltech101() は、以下に示すように Caltech 101 データセットを使用できます。

*メモ:

- 最初の引数は root(Required-Type:str または pathlib.Path) です。 *絶対パスまたは相対パスが可能です。

- 2 番目の引数は target_type(Optional-Default:"category"-Type:str またはタプルまたは str のリスト) です。 ※「カテゴリ」や「アノテーション」を設定することができます。

- 3 番目の引数は、transform(Optional-Default:None-Type:callable) です。

- 4 番目の引数は target_transform(Optional-Default:None-Type:callable) です。

- 5 番目の引数は download(Optional-Default:False-Type:bool) です。

*メモ:

- True の場合、データセットはインターネットからダウンロードされ、ルートに抽出 (解凍) されます。

- これが True で、データセットが既にダウンロードされている場合、データセットは抽出されます。

- これが True で、データセットがすでにダウンロードされ抽出されている場合は、何も起こりません。

- データセットがすでにダウンロードされ抽出されている場合は、その方が高速であるため、False にする必要があります。

- データセット (101_ObjectCategories.tar.gz および Annotations.tar) をここから data/caltech101/ に手動でダウンロードして抽出できます。

- 画像インデックスのカテゴリーについて、Faces(0)は0~434、Faces_easy(1)は435~869、Leopards(2) ) は 870 ~ 1069、 バイク(3) は 1070 ~ 1867 年、アコーディオン(4) は 1868 ~ 1922 年、飛行機(5) は 1923 ~ 2722 年、アンカー(6)は2723~2764、アリ(7)は2765~2806、バレル(8)は2807~2853、バス(9)は2854~2907など。

from torchvision.datasets import Caltech101

category_data = Caltech101(

root="data"

)

category_data = Caltech101(

root="data",

target_type="category",

transform=None,

target_transform=None,

download=False

)

annotation_data = Caltech101(

root="data",

target_type="annotation"

)

all_data = Caltech101(

root="data",

target_type=["category", "annotation"]

)

len(category_data), len(annotation_data), len(all_data)

# (8677, 8677, 8677)

category_data

# Dataset Caltech101

# Number of datapoints: 8677

# Root location: data\caltech101

# Target type: ['category']

category_data.root

# 'data/caltech101'

category_data.target_type

# ['category']

print(category_data.transform)

# None

print(category_data.target_transform)

# None

category_data.download

# <bound method caltech101.download of dataset caltech101 number datapoints: root location: data target type:>

len(category_data.categories)

# 101

category_data.categories

# ['Faces', 'Faces_easy', 'Leopards', 'Motorbikes', 'accordion',

# 'airplanes', 'anchor', 'ant', 'barrel', 'bass', 'beaver',

# 'binocular', 'bonsai', 'brain', 'brontosaurus', 'buddha',

# 'butterfly', 'camera', 'cannon', 'car_side', 'ceiling_fan',

# 'cellphone', 'chair', 'chandelier', 'cougar_body', 'cougar_face', ...]

len(category_data.annotation_categories)

# 101

category_data.annotation_categories

# ['Faces_2', 'Faces_3', 'Leopards', 'Motorbikes_16', 'accordion',

# 'Airplanes_Side_2', 'anchor', 'ant', 'barrel', 'bass',

# 'beaver', 'binocular', 'bonsai', 'brain', 'brontosaurus',

# 'buddha', 'butterfly', 'camera', 'cannon', 'car_side',

# 'ceiling_fan', 'cellphone', 'chair', 'chandelier', 'cougar_body', ...]

category_data[0]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="510x337">, 0)

category_data[1]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="519x343">, 0)

category_data[2]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="492x325">, 0)

category_data[435]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="290x334">, 1)

category_data[870]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="192x128">, 2)

annotation_data[0]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="510x337">,

# array([[10.00958466, 8.18210863, 8.18210863, 10.92332268, ...],

# [132.30670927, 120.42811502, 103.52396166, 90.73162939, ...]]))

annotation_data[1]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="519x343">,

# array([[15.19298246, 13.71929825, 15.19298246, 19.61403509, ...],

# [121.5877193, 103.90350877, 80.81578947, 64.11403509, ...]]))

annotation_data[2]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="492x325">,

# array([[10.40789474, 7.17807018, 5.79385965, 9.02368421, ...],

# [131.30789474, 120.69561404, 102.23947368, 86.09035088, ...]]))

annotation_data[435]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="290x334">,

# array([[64.52631579, 95.31578947, 123.26315789, 149.31578947, ...],

# [15.42105263, 8.31578947, 10.21052632, 28.21052632, ...]]))

annotation_data[870]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="192x128">,

# array([[2.96536524, 7.55604534, 19.45780856, 33.73992443, ...],

# [23.63413098, 32.13539043, 33.83564232, 8.84193955, ...]]))

all_data[0]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="510x337">,

# (0, array([[10.00958466, 8.18210863, 8.18210863, 10.92332268, ...],

# [132.30670927, 120.42811502, 103.52396166, 90.73162939, ...]]))

all_data[1]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="519x343">,

# (0, array([[15.19298246, 13.71929825, 15.19298246, 19.61403509, ...],

# [121.5877193, 103.90350877, 80.81578947, 64.11403509, ...]]))

all_data[2]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="492x325">,

# (0, array([[10.40789474, 7.17807018, 5.79385965, 9.02368421, ...],

# [131.30789474, 120.69561404, 102.23947368, 86.09035088, ...]]))

all_data[3]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="538x355">,

# (0, array([[19.54035088, 18.57894737, 26.27017544, 38.2877193, ...],

# [131.49122807, 100.24561404, 74.2877193, 49.29122807, ...]]))

all_data[4]

# (<pil.jpegimageplugin.jpegimagefile image mode="RGB" size="528x349">,

# (0, array([[11.87982456, 11.87982456, 13.86578947, 15.35526316, ...],

# [128.34649123, 105.50789474, 91.60614035, 76.71140351, ...]]))

import matplotlib.pyplot as plt

def show_images(data, main_title=None):

plt.figure(figsize=(10, 5))

plt.suptitle(t=main_title, y=1.0, fontsize=14)

ims = (0, 1, 2, 435, 870, 1070, 1868, 1923, 2723, 2765, 2807, 2854)

for i, j in enumerate(ims, start=1):

plt.subplot(2, 5, i)

if len(data.target_type) == 1:

if data.target_type[0] == "category":

im, lab = data[j]

plt.title(label=lab)

elif data.target_type[0] == "annotation":

im, (px, py) = data[j]

plt.scatter(x=px, y=py)

plt.imshow(X=im)

elif len(data.target_type) == 2:

if data.target_type[0] == "category":

im, (lab, (px, py)) = data[j]

elif data.target_type[0] == "annotation":

im, ((px, py), lab) = data[j]

plt.title(label=lab)

plt.imshow(X=im)

plt.scatter(x=px, y=py)

if i == 10:

break

plt.tight_layout()

plt.show()

show_images(data=category_data, main_title="category_data")

show_images(data=annotation_data, main_title="annotation_data")

show_images(data=all_data, main_title="all_data")

</pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></pil.jpegimageplugin.jpegimagefile></bound>

以上がPyTorch のカリフォルニア工科大学の詳細内容です。詳細については、PHP 中国語 Web サイトの他の関連記事を参照してください。

LinuxターミナルでPythonバージョンを表示するときに発生する権限の問題を解決する方法は?Apr 01, 2025 pm 05:09 PM

LinuxターミナルでPythonバージョンを表示するときに発生する権限の問題を解決する方法は?Apr 01, 2025 pm 05:09 PMLinuxターミナルでPythonバージョンを表示する際の許可の問題の解決策PythonターミナルでPythonバージョンを表示しようとするとき、Pythonを入力してください...

HTMLを解析するために美しいスープを使用するにはどうすればよいですか?Mar 10, 2025 pm 06:54 PM

HTMLを解析するために美しいスープを使用するにはどうすればよいですか?Mar 10, 2025 pm 06:54 PMこの記事では、Pythonライブラリである美しいスープを使用してHTMLを解析する方法について説明します。 find()、find_all()、select()、およびget_text()などの一般的な方法は、データ抽出、多様なHTML構造とエラーの処理、および代替案(SEL

Pythonオブジェクトのシリアル化と脱介入:パート1Mar 08, 2025 am 09:39 AM

Pythonオブジェクトのシリアル化と脱介入:パート1Mar 08, 2025 am 09:39 AMPythonオブジェクトのシリアル化と脱介入は、非自明のプログラムの重要な側面です。 Pythonファイルに何かを保存すると、構成ファイルを読み取る場合、またはHTTPリクエストに応答する場合、オブジェクトシリアル化と脱滑り化を行います。 ある意味では、シリアル化と脱派化は、世界で最も退屈なものです。これらすべての形式とプロトコルを気にするのは誰ですか? Pythonオブジェクトを維持またはストリーミングし、後で完全に取得したいと考えています。 これは、概念レベルで世界を見るのに最適な方法です。ただし、実用的なレベルでは、選択したシリアル化スキーム、形式、またはプロトコルは、プログラムの速度、セキュリティ、メンテナンスの自由、およびその他の側面を決定する場合があります。

TensorflowまたはPytorchで深い学習を実行する方法は?Mar 10, 2025 pm 06:52 PM

TensorflowまたはPytorchで深い学習を実行する方法は?Mar 10, 2025 pm 06:52 PMこの記事では、深い学習のためにTensorflowとPytorchを比較しています。 関連する手順、データの準備、モデルの構築、トレーニング、評価、展開について詳しく説明しています。 特に計算グラップに関して、フレームワーク間の重要な違い

Pythonの数学モジュール:統計Mar 09, 2025 am 11:40 AM

Pythonの数学モジュール:統計Mar 09, 2025 am 11:40 AMPythonの統計モジュールは、強力なデータ統計分析機能を提供して、生物統計やビジネス分析などのデータの全体的な特性を迅速に理解できるようにします。データポイントを1つずつ見る代わりに、平均や分散などの統計を見て、無視される可能性のある元のデータの傾向と機能を発見し、大きなデータセットをより簡単かつ効果的に比較してください。 このチュートリアルでは、平均を計算し、データセットの分散の程度を測定する方法を説明します。特に明記しない限り、このモジュールのすべての関数は、単に平均を合計するのではなく、平均()関数の計算をサポートします。 浮動小数点数も使用できます。 ランダムをインポートします インポート統計 fractiから

美しいスープでPythonでWebページを削る:検索とDOMの変更Mar 08, 2025 am 10:36 AM

美しいスープでPythonでWebページを削る:検索とDOMの変更Mar 08, 2025 am 10:36 AMこのチュートリアルは、単純なツリーナビゲーションを超えたDOM操作に焦点を当てた、美しいスープの以前の紹介に基づいています。 HTML構造を変更するための効率的な検索方法と技術を探ります。 1つの一般的なDOM検索方法はExです

Pythonでコマンドラインインターフェイス(CLI)を作成する方法は?Mar 10, 2025 pm 06:48 PM

Pythonでコマンドラインインターフェイス(CLI)を作成する方法は?Mar 10, 2025 pm 06:48 PMこの記事では、コマンドラインインターフェイス(CLI)の構築に関するPython開発者をガイドします。 Typer、Click、Argparseなどのライブラリを使用して、入力/出力の処理を強調し、CLIの使いやすさを改善するためのユーザーフレンドリーな設計パターンを促進することを詳述しています。

人気のあるPythonライブラリとその用途は何ですか?Mar 21, 2025 pm 06:46 PM

人気のあるPythonライブラリとその用途は何ですか?Mar 21, 2025 pm 06:46 PMこの記事では、numpy、pandas、matplotlib、scikit-learn、tensorflow、django、flask、and requestsなどの人気のあるPythonライブラリについて説明し、科学的コンピューティング、データ分析、視覚化、機械学習、Web開発、Hの使用について説明します。

ホットAIツール

Undresser.AI Undress

リアルなヌード写真を作成する AI 搭載アプリ

AI Clothes Remover

写真から衣服を削除するオンライン AI ツール。

Undress AI Tool

脱衣画像を無料で

Clothoff.io

AI衣類リムーバー

AI Hentai Generator

AIヘンタイを無料で生成します。

人気の記事

ホットツール

ドリームウィーバー CS6

ビジュアル Web 開発ツール

メモ帳++7.3.1

使いやすく無料のコードエディター

Safe Exam Browser

Safe Exam Browser は、オンライン試験を安全に受験するための安全なブラウザ環境です。このソフトウェアは、あらゆるコンピュータを安全なワークステーションに変えます。あらゆるユーティリティへのアクセスを制御し、学生が無許可のリソースを使用するのを防ぎます。

SublimeText3 英語版

推奨: Win バージョン、コードプロンプトをサポート!

ZendStudio 13.5.1 Mac

強力な PHP 統合開発環境

ホットトピック

7428

7428 15

15 1359

1359 52

52