Achetez-moi un café☕

*Mémos :

- Mon article explique CocoDetection() en utilisant train2014 avec captions_train2014.json, instances_train2014.json et person_keypoints_train2014.json, val2014 avec captions_val2014.json, instances_val2014.json et person_keypoints_val2014.json et test2017 avec image_info_test2014.json, image_info_test2015.json et image_info_test-dev2015.json.

- Mon article explique CocoDetection() utilisant train2017 avec captions_train2017.json, instances_train2017.json et person_keypoints_train2017.json, val2017 avec captions_val2017.json, instances_val2017.json et person_keypoints_val2017.json et test2017 avec image_info_test2017.json et image_info_test-dev2017.json.

- Mon article explique CocoDetection() en utilisant train2017 avec stuff_train2017.json, val2017 avec stuff_val2017.json, stuff_train2017_pixelmaps avec stuff_train2017.json, stuff_val2017_pixelmaps avec stuff_val2017.json, panoptic_train2017 avec panoptic_train2017.json, panoptic_val2017 avec panoptic_val2017.json et unlabeled2017 avec image_info_unlabeled2017.json.

- Mon message explique MS COCO.

CocoCaptions() peut utiliser l'ensemble de données MS COCO comme indiqué ci-dessous. * Ceci concerne train2014 avec captions_train2014.json, instances_train2014.json et person_keypoints_train2014.json, val2014 avec captions_val2014.json, instances_val2014.json et person_keypoints_val2014.json et test2017 avec image_info_test2014.json, image_info_test2015.json et image_info_test-dev2015.json :

*Mémos :

- Le 1er argument est root(Required-Type:str ou pathlib.Path) :

*Mémos :

- C'est le chemin vers les images.

- Un chemin absolu ou relatif est possible.

- Le 2ème argument est annFile(Required-Type:str ou pathlib.Path) :

*Mémos :

- C'est le chemin d'accès aux annotations.

- Un chemin absolu ou relatif est possible.

- Le 3ème argument est transform(Optional-Default:None-Type:callable).

- Le 4ème argument est target_transform(Optional-Default:None-Type:callable).

- Le 5ème argument est transforms(Optional-Default:None-Type:callable).

from torchvision.datasets import CocoCaptions

cap_train2014_data = CocoCaptions(

root="data/coco/imgs/train2014",

annFile="data/coco/anns/trainval2014/captions_train2014.json"

)

cap_train2014_data = CocoCaptions(

root="data/coco/imgs/train2014",

annFile="data/coco/anns/trainval2014/captions_train2014.json",

transform=None,

target_transform=None,

transforms=None

)

ins_train2014_data = CocoCaptions(

root="data/coco/imgs/train2014",

annFile="data/coco/anns/trainval2014/instances_train2014.json"

)

pk_train2014_data = CocoCaptions(

root="data/coco/imgs/train2014",

annFile="data/coco/anns/trainval2014/person_keypoints_train2014.json"

)

len(cap_train2014_data), len(ins_train2014_data), len(pk_train2014_data)

# (82783, 82783, 82783)

cap_val2014_data = CocoCaptions(

root="data/coco/imgs/val2014",

annFile="data/coco/anns/trainval2014/captions_val2014.json"

)

ins_val2014_data = CocoCaptions(

root="data/coco/imgs/val2014",

annFile="data/coco/anns/trainval2014/instances_val2014.json"

)

pk_val2014_data = CocoCaptions(

root="data/coco/imgs/val2014",

annFile="data/coco/anns/trainval2014/person_keypoints_val2014.json"

)

len(cap_val2014_data), len(ins_val2014_data), len(pk_val2014_data)

# (40504, 40504, 40504)

test2014_data = CocoCaptions(

root="data/coco/imgs/test2014",

annFile="data/coco/anns/test2014/image_info_test2014.json"

)

test2015_data = CocoCaptions(

root="data/coco/imgs/test2015",

annFile="data/coco/anns/test2015/image_info_test2015.json"

)

testdev2015_data = CocoCaptions(

root="data/coco/imgs/test2015",

annFile="data/coco/anns/test2015/image_info_test-dev2015.json"

)

len(test2014_data), len(test2015_data), len(testdev2015_data)

# (40775, 81434, 20288)

cap_train2014_data

# Dataset CocoCaptions

# Number of datapoints: 82783

# Root location: data/coco/imgs/train2014

cap_train2014_data.root

# 'data/coco/imgs/train2014'

print(cap_train2014_data.transform)

# None

print(cap_train2014_data.target_transform)

# None

print(cap_train2014_data.transforms)

# None

cap_train2014_data.coco

# <pycocotools.coco.coco at>

cap_train2014_data[26]

# (<pil.image.image image mode="RGB" size="427x640">,

# ['three zeebras standing in a grassy field walking',

# 'Three zebras are standing in an open field.',

# 'Three zebra are walking through the grass of a field.',

# 'Three zebras standing on a grassy dirt field.',

# 'Three zebras grazing in green grass field area.'])

cap_train2014_data[179]

# (<pil.image.image image mode="RGB" size="480x640">,

# ['a young guy walking in a forrest holding an object in his hand',

# 'A partially black and white photo of a man throwing ... the woods.',

# 'A disc golfer releases a throw from a dirt tee ... wooded course.',

# 'The person is in the clearing of a wooded area. ',

# 'a person throwing a frisbee at many trees '])

cap_train2014_data[194]

# (<pil.image.image image mode="RGB" size="428x640">,

# ['A person on a court with a tennis racket.',

# 'A man that is holding a racquet standing in the grass.',

# 'A tennis player hits the ball during a match.',

# 'The tennis player is poised to serve a ball.',

# 'Man in white playing tennis on a court.'])

ins_train2014_data[26] # Error

ins_train2014_data[179] # Error

ins_train2014_data[194] # Error

pk_train2014_data[26]

# (<pil.image.image image mode="RGB" size="427x640">, [])

pk_train2014_data[179] # Error

pk_train2014_data[194] # Error

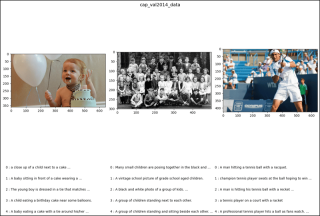

cap_val2014_data[26]

# (<pil.image.image image mode="RGB" size="640x360">,

# ['a close up of a child next to a cake with balloons',

# 'A baby sitting in front of a cake wearing a tie.',

# 'The young boy is dressed in a tie that matches his cake. ',

# 'A child eating a birthday cake near some balloons.',

# 'A baby eating a cake with a tie around ... the background.'])

cap_val2014_data[179]

# (<pil.image.image image mode="RGB" size="500x302">,

# ['Many small children are posing together in the ... white photo. ',

# 'A vintage school picture of grade school aged children.',

# 'A black and white photo of a group of kids.',

# 'A group of children standing next to each other.',

# 'A group of children standing and sitting beside each other. '])

cap_val2014_data[194]

# (<pil.image.image image mode="RGB" size="640x427">,

# ['A man hitting a tennis ball with a racquet.',

# 'champion tennis player swats at the ball hoping to win',

# 'A man is hitting his tennis ball with a recket on the court.',

# 'a tennis player on a court with a racket',

# 'A professional tennis player hits a ball as fans watch.'])

ins_val2014_data[26] # Error

ins_val2014_data[179] # Error

ins_val2014_data[194] # Error

pk_val2014_data[26] # Error

pk_val2014_data[179] # Error

pk_val2014_data[194] # Error

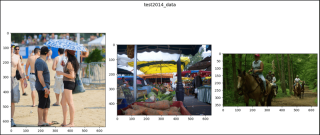

test2014_data[26]

# (<pil.image.image image mode="RGB" size="640x640">, [])

test2014_data[179]

# (<pil.image.image image mode="RGB" size="640x480">, [])

test2014_data[194]

# (<pil.image.image image mode="RGB" size="640x360">, [])

test2015_data[26]

# (<pil.image.image image mode="RGB" size="640x480">, [])

test2015_data[179]

# (<pil.image.image image mode="RGB" size="640x426">, [])

test2015_data[194]

# (<pil.image.image image mode="RGB" size="640x480">, [])

testdev2015_data[26]

# (<pil.image.image image mode="RGB" size="640x360">, [])

testdev2015_data[179]

# (<pil.image.image image mode="RGB" size="640x480">, [])

testdev2015_data[194]

# (<pil.image.image image mode="RGB" size="640x480">, [])

import matplotlib.pyplot as plt

from matplotlib.patches import Polygon, Rectangle

import numpy as np

from pycocotools import mask

def show_images(data, ims, main_title=None):

file = data.root.split('/')[-1]

fig, axes = plt.subplots(nrows=1, ncols=3, figsize=(14, 8))

fig.suptitle(t=main_title, y=0.9, fontsize=14)

x_crd = 0.02

for i, axis in zip(ims, axes.ravel()):

if data[i][1]:

im, anns = data[i]

axis.imshow(X=im)

y_crd = 0.0

for j, ann in enumerate(iterable=anns):

text_list = ann.split()

if len(text_list) > 9:

text = " ".join(text_list[0:10]) + " ..."

else:

text = " ".join(text_list)

plt.figtext(x=x_crd, y=y_crd, fontsize=10,

s=f'{j} : {text}')

y_crd -= 0.06

x_crd += 0.325

if i == 2 and file == "val2017":

x_crd += 0.06

elif not data[i][1]:

im, _ = data[i]

axis.imshow(X=im)

fig.tight_layout()

plt.show()

ims = (26, 179, 194)

show_images(data=cap_train2014_data, ims=ims,

main_title="cap_train2014_data")

show_images(data=cap_val2014_data, ims=ims,

main_title="cap_val2014_data")

show_images(data=test2014_data, ims=ims,

main_title="test2014_data")

show_images(data=test2015_data, ims=ims,

main_title="test2015_data")

show_images(data=testdev2015_data, ims=ims,

main_title="testdev2015_data")

</pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pil.image.image></pycocotools.coco.coco>

Ce qui précède est le contenu détaillé de. pour plus d'informations, suivez d'autres articles connexes sur le site Web de PHP en chinois!

Merger des listes dans Python: Choisir la bonne méthodeMay 14, 2025 am 12:11 AM

Merger des listes dans Python: Choisir la bonne méthodeMay 14, 2025 am 12:11 AMTomegelistSinpython, vous pouvez faire l'opérateur, ExtendMethod, ListComprehension, oriteroTools.chain, chacun avec des avantages spécifiques: 1) l'opératorissimplebutlessoficiesivetforlatelists; 2) ExtendisMemory-EfficientButmodifiestheoriginallist; 3)

Comment concaténer deux listes dans Python 3?May 14, 2025 am 12:09 AM

Comment concaténer deux listes dans Python 3?May 14, 2025 am 12:09 AMDans Python 3, deux listes peuvent être connectées via une variété de méthodes: 1) Utiliser l'opérateur, qui convient aux petites listes, mais est inefficace pour les grandes listes; 2) Utiliser la méthode Extende, qui convient aux grandes listes, avec une efficacité de mémoire élevée, mais modifiera la liste d'origine; 3) Utiliser * l'opérateur, qui convient à la fusion de plusieurs listes, sans modifier la liste originale; 4) Utilisez Itertools.chain, qui convient aux grands ensembles de données, avec une efficacité de mémoire élevée.

Chaînes de liste de concaténate pythonMay 14, 2025 am 12:08 AM

Chaînes de liste de concaténate pythonMay 14, 2025 am 12:08 AML'utilisation de la méthode join () est le moyen le plus efficace de connecter les chaînes à partir des listes de Python. 1) Utilisez la méthode join () pour être efficace et facile à lire. 2) Le cycle utilise les opérateurs de manière inefficace pour les grandes listes. 3) La combinaison de la compréhension de la liste et de la jointure () convient aux scénarios qui nécessitent une conversion. 4) La méthode Reduce () convient à d'autres types de réductions, mais est inefficace pour la concaténation des cordes. La phrase complète se termine.

Exécution de Python, qu'est-ce que c'est?May 14, 2025 am 12:06 AM

Exécution de Python, qu'est-ce que c'est?May 14, 2025 am 12:06 AMPythonexecutionistheprocessoftransformingpythoncodeintoexecuableInstructions.1) the IntrepreterredSthecode, convertingitintoStecode, quithepythonvirtualmachine (pvm)

Python: quelles sont les principales caractéristiquesMay 14, 2025 am 12:02 AM

Python: quelles sont les principales caractéristiquesMay 14, 2025 am 12:02 AMLes caractéristiques clés de Python incluent: 1. La syntaxe est concise et facile à comprendre, adaptée aux débutants; 2. Système de type dynamique, améliorant la vitesse de développement; 3. Rich Standard Library, prenant en charge plusieurs tâches; 4. Community et écosystème solide, fournissant un soutien approfondi; 5. Interprétation, adaptée aux scripts et au prototypage rapide; 6. Support multi-paradigme, adapté à divers styles de programmation.

Python: compilateur ou interprète?May 13, 2025 am 12:10 AM

Python: compilateur ou interprète?May 13, 2025 am 12:10 AMPython est une langue interprétée, mais elle comprend également le processus de compilation. 1) Le code Python est d'abord compilé en bytecode. 2) ByteCode est interprété et exécuté par Python Virtual Machine. 3) Ce mécanisme hybride rend Python à la fois flexible et efficace, mais pas aussi rapide qu'une langue entièrement compilée.

Python pour Loop vs While Loop: Quand utiliser lequel?May 13, 2025 am 12:07 AM

Python pour Loop vs While Loop: Quand utiliser lequel?May 13, 2025 am 12:07 AMUsaforloopwheniterating aepasquenceorfor pourpascific inumberoftimes; useawhileloopwencontinTutuntutilaconditioniseMet.ForloopsareIdealForkNown séquences, tandis que celle-ci, ce qui est en train de réaliser des étages.

Python Loops: les erreurs les plus courantesMay 13, 2025 am 12:07 AM

Python Loops: les erreurs les plus courantesMay 13, 2025 am 12:07 AMPythonloopscanleadtoerrorlikeInfiniteLoops, modificationlistDuringiteration, off-by-by-oneerrors, zéro-indexingisss et intestloopinefficisecy.toavoid this: 1) use'i

Outils d'IA chauds

Undresser.AI Undress

Application basée sur l'IA pour créer des photos de nu réalistes

AI Clothes Remover

Outil d'IA en ligne pour supprimer les vêtements des photos.

Undress AI Tool

Images de déshabillage gratuites

Clothoff.io

Dissolvant de vêtements AI

Video Face Swap

Échangez les visages dans n'importe quelle vidéo sans effort grâce à notre outil d'échange de visage AI entièrement gratuit !

Article chaud

Outils chauds

MinGW - GNU minimaliste pour Windows

Ce projet est en cours de migration vers osdn.net/projects/mingw, vous pouvez continuer à nous suivre là-bas. MinGW : un port Windows natif de GNU Compiler Collection (GCC), des bibliothèques d'importation et des fichiers d'en-tête librement distribuables pour la création d'applications Windows natives ; inclut des extensions du runtime MSVC pour prendre en charge la fonctionnalité C99. Tous les logiciels MinGW peuvent fonctionner sur les plates-formes Windows 64 bits.

Navigateur d'examen sécurisé

Safe Exam Browser est un environnement de navigation sécurisé permettant de passer des examens en ligne en toute sécurité. Ce logiciel transforme n'importe quel ordinateur en poste de travail sécurisé. Il contrôle l'accès à n'importe quel utilitaire et empêche les étudiants d'utiliser des ressources non autorisées.

DVWA

Damn Vulnerable Web App (DVWA) est une application Web PHP/MySQL très vulnérable. Ses principaux objectifs sont d'aider les professionnels de la sécurité à tester leurs compétences et leurs outils dans un environnement juridique, d'aider les développeurs Web à mieux comprendre le processus de sécurisation des applications Web et d'aider les enseignants/étudiants à enseigner/apprendre dans un environnement de classe. Application Web sécurité. L'objectif de DVWA est de mettre en pratique certaines des vulnérabilités Web les plus courantes via une interface simple et directe, avec différents degrés de difficulté. Veuillez noter que ce logiciel

Dreamweaver Mac

Outils de développement Web visuel

Version crackée d'EditPlus en chinois

Petite taille, coloration syntaxique, ne prend pas en charge la fonction d'invite de code