Today, let’s learn alsotang’s crawler tutorial, and then follow the simple crawling of CNode.

Create project craelr-demo

We first create an Express project, and then delete all the contents of the app.js file, because we do not need to display the content on the Web for the time being. Of course, we can also directly npm install express in an empty folder to use the Express functions we need.

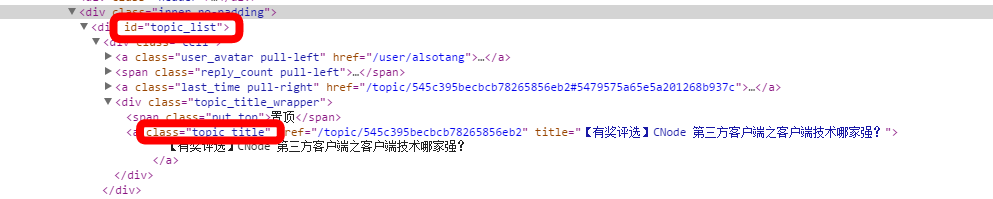

Target website analysis

As shown in the picture, this is a part of the div tag on the CNode homepage. We use this series of ids and classes to locate the information we need.

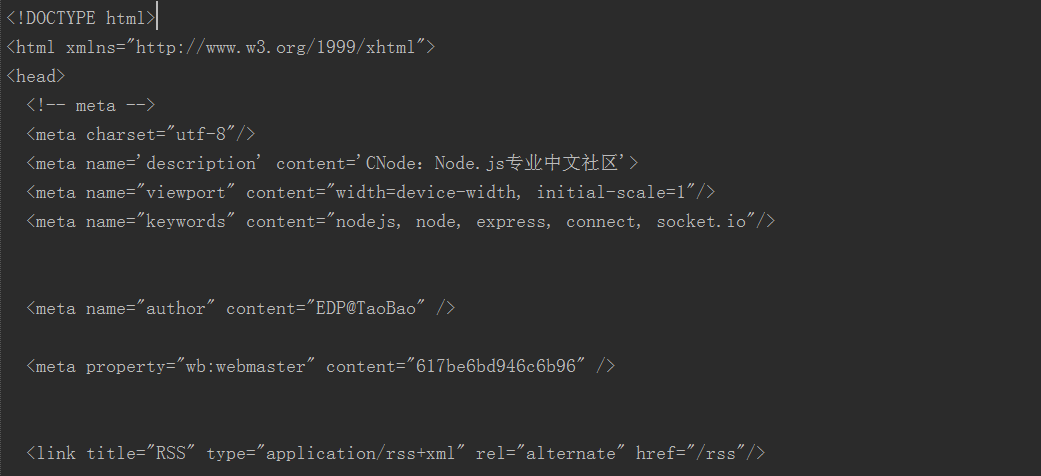

Use superagent to obtain source data

superagent is an Http library used by ajax API. Its usage is similar to jQuery. We initiate a get request through it and output the result in the callback function.

var express = require('express');

var url = require('url'); //Parse operation url

var superagent = require('superagent'); //Don't forget to npm install

for these three external dependencies var cheerio = require('cheerio');

var eventproxy = require('eventproxy');

var targetUrl = 'https://cnodejs.org/';

superagent.get(targetUrl)

.end(function (err, res) {

console.log(res);

});

Its res result is an object containing target url information, and the website content is mainly in its text (string).

Use cheerio to parse

cheerio acts as a server-side jQuery function. We first use its .load() to load HTML, and then filter elements through CSS selector.

var $ = cheerio.load(res.text);

//Filter data through CSS selector

$('#topic_list .topic_title').each(function (idx, element) {

console.log(element);

});

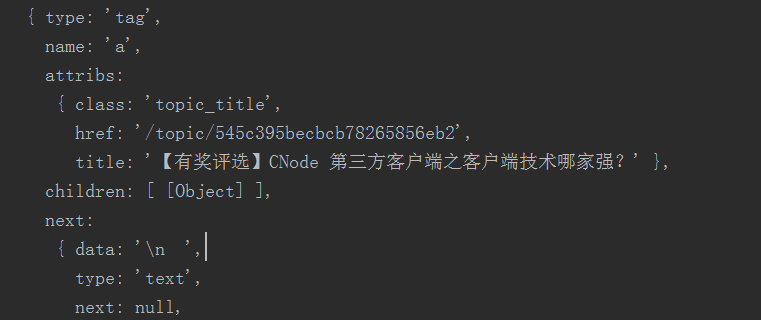

The result is an object. Call the .each(function(index, element)) function to traverse each object and return HTML DOM Elements.

The result of outputting console.log($element.attr('title')); is 广州 2014年12月06日 NodeParty 之 UC 场

Titles like console.log($element.attr('href')); are output as urls like /topic/545c395becbcb78265856eb2. Then use the url.resolve() function of NodeJS1 to complete the complete url.

superagent.get(tUrl)

.end(function (err, res) {

If (err) {

return console.error(err);

}

var topicUrls = [];

var $ = cheerio.load(res.text);

//Get all links on the homepage

$('#topic_list .topic_title').each(function (idx, element) {

var $element = $(element);

var href = url.resolve(tUrl, $element.attr('href'));

console.log(href);

//topicUrls.push(href);

});

});

Use eventproxy to concurrently crawl the content of each topic

The tutorial shows examples of deeply nested (serial) methods and counter methods. Eventproxy uses event (parallel) methods to solve this problem. When all the crawling is completed, eventproxy receives the event message and automatically calls the processing function for you.

//Step one: Get an instance of eventproxy

var ep = new eventproxy();

//Step 2: Define the callback function for listening events.

//The after method is repeated monitoring

//params: eventname(String) event name, times(Number) number of listening times, callback callback function

ep.after('topic_html', topicUrls.length, function(topics){

// topics is an array, containing the 40 pairs

in ep.emit('topic_html', pair) 40 times //.map

topics = topics.map(function(topicPair){

//use cheerio

var topicUrl = topicPair[0];

var topicHtml = topicPair[1];

var $ = cheerio.load(topicHtml);

return ({

title: $('.topic_full_title').text().trim(),

href: topicUrl,

comment1: $('.reply_content').eq(0).text().trim()

});

});

//outcome

console.log('outcome:');

console.log(topics);

});

//Step 3: Determine the

that releases the event message topicUrls.forEach(function (topicUrl) {

Superagent.get(topicUrl)

.end(function (err, res) {

console.log('fetch ' topicUrl ' successful');

ep.emit('topic_html', [topicUrl, res.text]);

});

});

The results are as follows

Extended Exercise (Challenge)

Get message username and points

Find the class name of the user who commented in the source code of the article page. The classname is reply_author. As you can see from the first element of console.log $('.reply_author').get(0), everything we need to get is here.

First, let’s crawl an article and get everything we need at once.

var userHref = url.resolve(tUrl, $('.reply_author').get(0).attribs.href);

console.log(userHref);

console.log($('.reply_author').get(0).children[0].data);

We can capture points information through https://cnodejs.org/user/username

$('.reply_author').each(function (idx, element) {

var $element = $(element);

console.log($element.attr('href'));

});

On the user information page $('.big').text().trim() is the points information.

Use cheerio’s function .get(0) to get the first element.

var userHref = url.resolve(tUrl, $('.reply_author').get(0).attribs.href);

console.log(userHref);

This is just a capture of a single article, there are still 40 that need to be modified.

JavaScript in Action: Real-World Examples and ProjectsApr 19, 2025 am 12:13 AM

JavaScript in Action: Real-World Examples and ProjectsApr 19, 2025 am 12:13 AMJavaScript's application in the real world includes front-end and back-end development. 1) Display front-end applications by building a TODO list application, involving DOM operations and event processing. 2) Build RESTfulAPI through Node.js and Express to demonstrate back-end applications.

JavaScript and the Web: Core Functionality and Use CasesApr 18, 2025 am 12:19 AM

JavaScript and the Web: Core Functionality and Use CasesApr 18, 2025 am 12:19 AMThe main uses of JavaScript in web development include client interaction, form verification and asynchronous communication. 1) Dynamic content update and user interaction through DOM operations; 2) Client verification is carried out before the user submits data to improve the user experience; 3) Refreshless communication with the server is achieved through AJAX technology.

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AM

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AMUnderstanding how JavaScript engine works internally is important to developers because it helps write more efficient code and understand performance bottlenecks and optimization strategies. 1) The engine's workflow includes three stages: parsing, compiling and execution; 2) During the execution process, the engine will perform dynamic optimization, such as inline cache and hidden classes; 3) Best practices include avoiding global variables, optimizing loops, using const and lets, and avoiding excessive use of closures.

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AMPython is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver CS6

Visual web development tools