Technology peripherals

Technology peripherals AI

AI LidaRF: Studying LiDAR Data for Street View Neural Radiation Fields (CVPR\'24)

LidaRF: Studying LiDAR Data for Street View Neural Radiation Fields (CVPR\'24)Light-realistic simulation plays a key role in applications such as autonomous driving, where advances in neural network radiated fields (NeRFs) may enable better scalability by automatically creating digital 3D assets. However, the reconstruction quality of street scenes suffers due to the high collinearity of camera motion on the streets and sparse sampling at high speeds. On the other hand, the application often requires rendering from a camera perspective that deviates from the input perspective to accurately simulate behaviors such as lane changes. LidaRF presents several insights that allow better utilization of lidar data to improve the quality of NeRF in street views. First, the framework learns geometric scene representations from LiDAR data, which are combined with an implicit mesh-based decoder to provide stronger geometric information provided by the displayed point cloud. Secondly, a robust occlusion-aware depth supervised training strategy is proposed, allowing to improve the NeRF reconstruction quality in street scenes by accumulating strong information using dense LiDAR point clouds. Third, enhanced training perspectives are generated based on the intensity of lidar points to further improve upon the significant improvements obtained in new perspective synthesis under real driving scenarios. In this way, with a more accurate geometric scene representation learned by the framework from lidar data, the method can be improved in one step and obtain better significant improvements in real driving scenarios.

The contribution of LidaRF is mainly reflected in three aspects:

(i) Mixing lidar encoding and grid features to enhance scene representation. While lidar has been used as a natural depth monitoring source, incorporating lidar into NeRF inputs offers great potential for geometric induction, but is not straightforward to implement. To this end, a grid-based representation is borrowed, but features learned from point clouds are fused into the grid to inherit the advantages of explicit point cloud representations. Through the successful launch of the 3D sensing framework, 3D sparse convolutional networks are utilized as an effective and efficient structure to extract geometric features from the local and global context of lidar point clouds.

(ii) Robust occlusion-aware depth supervision. Similar to existing work, lidar is also used here as a source of deep supervision, but in greater depth. Since the sparsity of lidar points limits its effectiveness, especially in low-texture areas, denser depth maps are generated by aggregating lidar points across neighboring frames. However, the depth map thus obtained does not take occlusions into account, resulting in erroneous depth supervision. Therefore, a robust depth supervision scheme is proposed, borrowing the method of class learning - gradually supervising the depth from the near field to the far field, and gradually filtering out the wrong depth during the NeRF training process, so as to more effectively extract the depth from the lidar. Learn depth.

(iii) Lidar-based view enhancement. Furthermore, given the view sparsity and limited coverage in driving scenarios, lidar is utilized to densify the training views. That is, the accumulated lidar points are projected into new training views; note that these views may deviate somewhat from the driving trajectory. These views projected from lidar are added to the training dataset, and they do not account for occlusion issues. However, we apply the previously mentioned supervision scheme to solve the occlusion problem, thus improving the performance. Although our method is also applicable to general scenes, in this work we focus more on the evaluation of street scenes and achieve significant improvements compared to existing techniques, both quantitatively and qualitatively.

LidaRF has also shown advantages in interesting applications that require greater deviation from the input view, significantly improving the quality of NeRF in challenging street scene applications.

LidaRF overall framework overview

LidaRF is a method for inputting and outputting corresponding densities and colors. It uses UNet to combine Huff coding and laser Radar encoding. Furthermore, enhanced training data are generated via lidar projections to train geometric predictions using the proposed robust deep supervision scheme.

#1) Hybrid representation of lidar encoding

Lidar point clouds have strong geometric guidance potential, which is important for NeRF (Neural Rendering Field) is extremely valuable. However, relying solely on lidar features for scene representation results in low-resolution rendering due to the sparse nature of lidar points (despite temporal accumulation). Additionally, because lidar has a limited field of view, for example it cannot capture building surfaces above a certain height, blank renderings occur in these areas. In contrast, our framework fuses lidar features and high-resolution spatial grid features to exploit the advantages of both and learn together to achieve high-quality and complete scene rendering.

Lidar feature extraction. The geometric feature extraction process for each lidar point is described in detail here. Referring to Figure 2, the lidar point clouds of all frames of the entire sequence are first aggregated to build a denser point cloud collection. The point cloud is then voxelized into a voxel grid, where the spatial positions of the points within each voxel unit are averaged to generate a 3D feature for each voxel unit. Inspired by the widespread success of 3D perception frameworks, scene geometry features are encoded using 3D sparse UNet on a voxel grid, which allows learning from the global context of scene geometry. 3D sparse UNet takes a voxel grid and its 3-dimensional features as input and outputs neural volumetric features. Each occupied voxel is composed of n-dimensional features.

Lidar feature query. For each sample point x along the ray to be rendered, if there are at least K nearby lidar points within the search radius R, its lidar features are queried; otherwise, its lidar features are set to null (i.e. all zeros). Specifically, the Fixed Radius Nearest Neighbor (FRNN) method is used to search for the K nearest lidar point index set related to x, denoted as . Different from the method in [9] that predetermines the ray sampling points before starting the training process, our method is real-time when performing the FRNN search, because as the NeRF training converges, the sample point distribution from the region network will dynamically tend to Focus on the surface. Following the Point-NeRF approach, our method utilizes a multilayer perceptron (MLP) F to map the lidar features of each point into a neural scene description. For the i-th neighboring point of x, F takes the lidar features and relative position as input and outputs the neural scene description as: LiDAR encoding

ϕ, using the standard inverse distance weighting method to aggregate the neural scene description of its K neighboring points

feature fusion for radiative decoding. The lidar code ϕL is concatenated with the hash code ϕh, and a multi-layer perceptron Fα is applied to predict the density α and density embedding h of each sample. Finally, through another multi-layer perceptron Fc, the corresponding color c is predicted based on the spherical harmonic encoding SH and density embedding h in the viewing direction d.

A robust supervision scheme for occlusion awareness. This paper designs a class training strategy so that the model is initially trained using closer and more reliable depth data that is less susceptible to occlusion. As training progresses, the model gradually begins to incorporate further depth data. At the same time, the model also has the ability to discard deep supervision that is unusually far away from its predictions.

Recall that due to the forward motion of the vehicle camera, the training images it produces are sparse and have limited field of view coverage, which poses challenges to NeRF reconstruction, especially when the new view deviates from the vehicle trajectory. Here, we propose to leverage LiDAR to augment training data. First, we color each lidar frame's point cloud by projecting it onto its synchronized camera and interpolating the RGB values. The colored point cloud is accumulated and projected onto a set of synthetically enhanced views, producing the synthetic image and depth map shown in Figure 2.

Experimental comparative analysis

The above is the detailed content of LidaRF: Studying LiDAR Data for Street View Neural Radiation Fields (CVPR\'24). For more information, please follow other related articles on the PHP Chinese website!

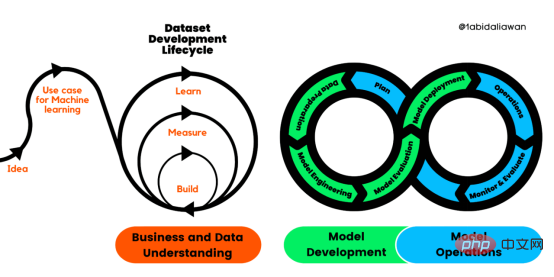

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

15年软件架构师经验总结:在ML领域,初学者踩过的五个坑Apr 11, 2023 pm 07:31 PM

15年软件架构师经验总结:在ML领域,初学者踩过的五个坑Apr 11, 2023 pm 07:31 PM数据科学和机器学习正变得越来越流行,这个领域的人数每天都在增长。这意味着有很多数据科学家在构建他们的第一个机器学习模型时没有丰富的经验,而这也是错误可能会发生的地方。近日,软件架构师、数据科学家、Kaggle 大师 Agnis Liukis 撰写了一篇文章,他在文中谈了谈在机器学习中最常见的一些初学者错误的解决方案,以确保初学者了解并避免它们。Agnis Liukis 拥有超过 15 年的软件架构和开发经验,他熟练掌握 Java、JavaScript、Spring Boot、React.JS

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft