Home >Technology peripherals >It Industry >Alibaba Cloud announced the launch of its self-developed EMO model on Tongyi App, which uses photos + audio to generate singing videos

Alibaba Cloud announced the launch of its self-developed EMO model on Tongyi App, which uses photos + audio to generate singing videos

- 王林forward

- 2024-04-26 08:00:381057browse

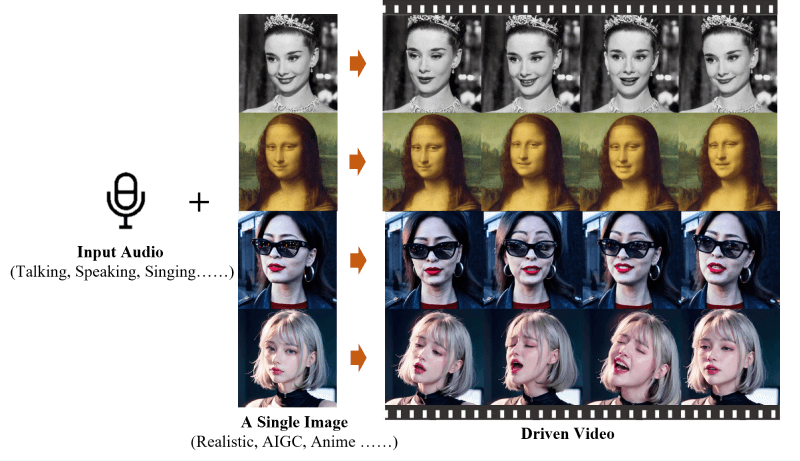

"This site reported on April 25 that EMO (Emote Portrait Alive) is a framework developed by the Alibaba Group Intelligent Computing Research Institute. It is an audio-driven AI portrait video generation system that can input a single reference image and Voice audio, generating videos with expressive facial expressions and various head postures."

Alibaba Cloud announced today that the AI model developed through the laboratory-EMO is officially launched on the general app and is open to everyone. Free for users. With this function, users can choose a template from songs, hot memes, and emoticons, and then upload a portrait photo to let EMO synthesize a singing video.

According to the introduction, Tongyi App has launched more than 80 EMO templates in the first batch, including the popular songs "Up Spring Mountain", "Wild Wolf Disco", etc. , there are also popular Internet memes such as "Bobo Chicken", "Back Hand Digging", etc., but currently no custom audio is provided.

This site is attached with the EMO official website entrance:

Official project homepage: https://humanaigc.github.io/emote-portrait-alive/

arXiv Research Paper: https://arxiv.org/abs/2402.17485

GitHub: https://github.com/HumanAIGC/EMO (Model and source code to be open source)

- Generate videos with EMO audio: EMO is able to generate videos directly from input audio (such as dialogue or songs) without relying on pre-recorded video clips or 3D facial models.

- Highly expressive and realistic: EMO-generated videos are highly expressive, capable of capturing and reproducing the nuances of human facial expressions, including subtle micro-expressions, as well as matching audio rhythms Matching head movements.

- Seamless Frame Transition: EMO ensures that the transition between video frames is natural and smooth, avoiding problems with facial distortion or jitter between frames, thus improving the overall quality of the video.

- Identity preservation: Through the FrameEncoding module, EMO is able to maintain the consistency of the character's identity during the video generation process, ensuring that the character's appearance is consistent with the input reference image.

- Stable control mechanism: EMO adopts stability control mechanisms such as speed controller and facial area controller to enhance stability during video generation and avoid problems such as video crashes.

- Flexible video duration: EMO can generate videos of any duration based on the length of the input audio, providing users with flexible creative space.

- Cross-language and cross-style: EMO’s training dataset covers a variety of languages and styles, including Chinese and English, as well as realism, anime, and 3D styles, which allows EMO to adapt Different cultures and artistic styles.

The above is the detailed content of Alibaba Cloud announced the launch of its self-developed EMO model on Tongyi App, which uses photos + audio to generate singing videos. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- How to check the remote warehouse address in Git

- How to convert branches in git

- [Compilation and Sharing] Alibaba's 15 top front-end open source projects, see which ones you have used!

- The Alibaba Beijing Headquarters Park project is progressing smoothly: it is expected to be completed in October and delivered by the end of December