Home >Technology peripherals >AI >How to use transformer to effectively correlate lidar-millimeter wave radar-visual features?

How to use transformer to effectively correlate lidar-millimeter wave radar-visual features?

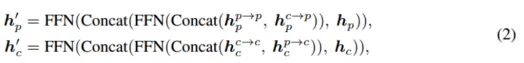

- PHPzforward

- 2024-04-19 16:01:24653browse

The author’s personal understanding

One of the basic tasks of autonomous driving is three-dimensional target detection, and many methods are now based on multi-sensor fusion. So why is multi-sensor fusion needed? Whether it is lidar and camera fusion, or millimeter wave radar and camera fusion, the main purpose is to use the complementary connection between point clouds and images to improve the accuracy of target detection. . With the continuous application of Transformer architecture in the field of computer vision, attention mechanism-based methods have improved the accuracy of fusion between multiple sensors. The two papers shared are based on this architecture and propose novel fusion methods to make greater use of the useful information of their respective modalities and achieve better fusion.

TransFusion:

Main Contributions

Lidar and camera are two important three-dimensional target detection sensors in autonomous driving , but in sensor fusion, it mainly faces the problem of low detection accuracy caused by poor image stripe conditions. The point-based fusion method is to fuse lidar and cameras through hard association, which will lead to some problems: a) simply splicing point cloud and image features, in the presence of low-quality image features, the detection performance will be seriously degraded ;b) Finding hard correlations between sparse point clouds and images wastes high-quality image features and is difficult to align. To solve this problem, a soft association method is proposed. This method treats lidar and camera as two independent detectors, cooperating with each other and taking full advantage of the advantages of the two detectors. First, a traditional object detector is used to detect objects and generate bounding boxes, and then the bounding boxes and point clouds are matched to obtain a score for which bounding box each point is associated with. Finally, the image features corresponding to the edge boxes are fused with the features generated by the point cloud. This method can effectively avoid the decline in detection accuracy caused by poor image stripe conditions. At the same time,

This paper introduces TransFusion, a fusion framework for lidar and cameras, to solve the correlation problem between the two sensors. The main contributions are as follows:

- Proposed a 3D detection fusion model based on transformer-based lidar and camera, which shows excellent robustness to poor image quality and sensor misalignment;

- Introduced several simple yet effective adjustments for object queries to improve the quality of initial bounding box predictions for image fusion, and also designed an image-guided query initialization module to handle objects that are difficult to detect in point clouds;

- Not only achieves advanced 3D detection performance in nuScenes, but also extends the model to 3D tracking tasks and achieves good results.

Detailed module explanation

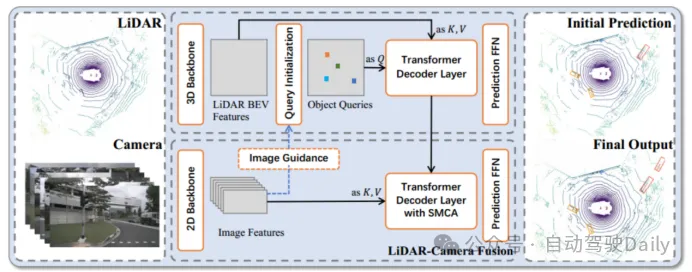

Figure 1 The overall framework of TransFusion

In order to solve the above image entries To solve the problem of differences and correlation between different sensors, a Transformer-based fusion framework - TransFusion is proposed. The model relies on standard 3D and 2D backbone networks to extract LiDAR BEV features and image features, and then consists of two layers of Transformer decoders: the first layer decoder uses sparse point clouds to generate initial bounding boxes; the second layer decoder converts the first layer The object query is combined with the image feature query to obtain better detection results. The spatial modulation attention mechanism (SMCA) and image-guided query strategy are also introduced to improve detection accuracy. Through the detection of this model, better image features and detection accuracy can be obtained.

Query Initialization

##LiDAR-Camera Fusion

If When an object contains only a small number of lidar points, only the same number of image features can be obtained, wasting high-quality image semantic information. Therefore, this paper retains all image features and uses the cross-attention mechanism and adaptive method in Transformer to perform feature fusion, so that the network can adaptively extract location and information from the image. In order to alleviate the spatial misalignment problem of LiDAR BEV features and image features coming from different sensors, aspatial modulation cross-attention module (SMCA) is designed, which passes two dimensional coordinates around the two-dimensional center of each query projection. A dimensional circular Gaussian mask weights cross-attention.

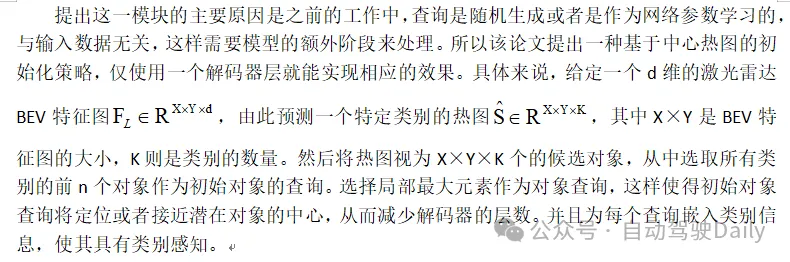

Image-Guided Query Initialization

This module uses lidar and image information as object queries at the same time, by sending image features and lidar BEV features into the cross-attention mechanism network, projecting them onto the BEV plane, and generating fused BEV features. As shown in Figure 2, multi-view image features are first folded along the height axis as the key value of the cross attention mechanism network, and the lidar BEV features are sent to the attention network as queries to obtain the fused BEV features, which are used for heat map prediction. , and averaged with the lidar-only heat map Ŝ to get the final heat map Ŝ to select and initialize the target query. Such operations enable the model to detect targets that are difficult to detect in lidar point clouds.

Experiments

Datasets and Metrics

The nuScenes dataset is a large-scale automated system for 3D detection and tracking. Driving dataset, containing 700, 150 and 150 scenes, used for training, validation and testing respectively. Each frame contains a lidar point cloud and six calibration images covering a 360-degree horizontal field of view. For 3D detection, the main metrics are mean average precision (mAP) and nuScenes detection score (NDS). mAP is defined by BEV center distance rather than 3D IoU, and the final mAP is calculated by averaging distance thresholds of 0.5m, 1m, 2m, 4m for 10 categories. NDS is a comprehensive measure of mAP and other attribute measures, including translation, scale, orientation, velocity, and other box attributes. .

The Waymo dataset includes 798 scenes for training and 202 scenes for validation. The official indicators are mAP and mAPH (mAP weighted by heading accuracy). mAP and mAPH are defined based on 3D IoU thresholds, which are 0.7 for vehicles and 0.5 for pedestrians and cyclists. These metrics are further broken down into two difficulty levels: LEVEL1 for bounding boxes with more than 5 lidar points, and LEVEL2 for bounding boxes with at least one lidar point. Unlike nuScenes' 360-degree cameras, Waymo's cameras only cover about 250 degrees horizontally.

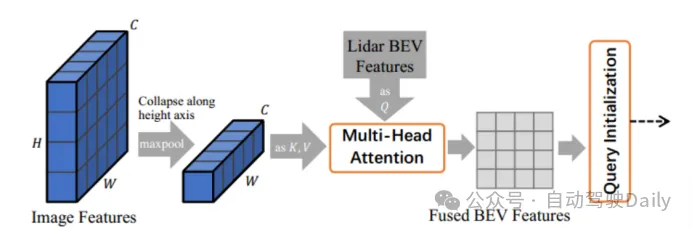

Training On the nuScenes data set, use DLA34 as the 2D backbone network of the image and freeze its weights, set the image size to 448×800; select VoxelNet as the 3D backbone network of the lidar . The training process is divided into two stages: the first stage only uses LiDAR data as input, and trains the 3D backbone 20 times with the first-layer decoder and FFN feedforward network to generate initial 3D bounding box predictions; the second stage trains the LiDAR-Camera The fusion and image-guided query initialization modules are trained for 6 times. The left image is the transformer decoder layer architecture used for initial bounding box prediction; the right image is the transformer decoder layer architecture used for LiDAR-Camera fusion.

Figure 3 Decoder layer design

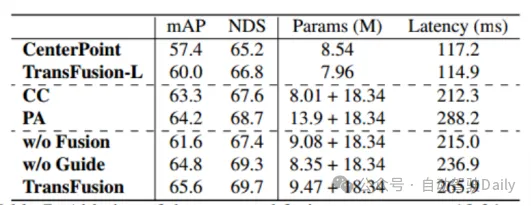

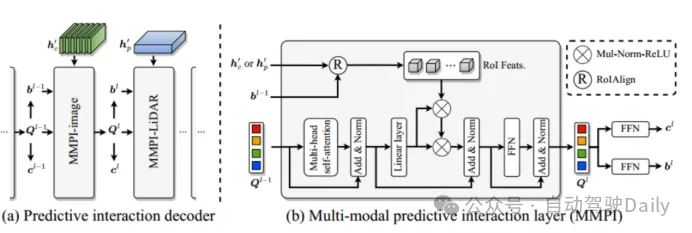

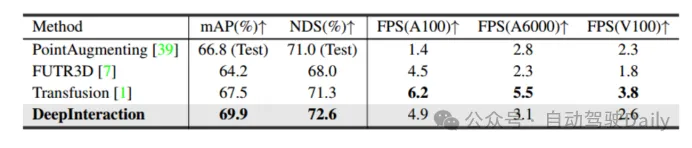

Comparison with state-of-the-art methods

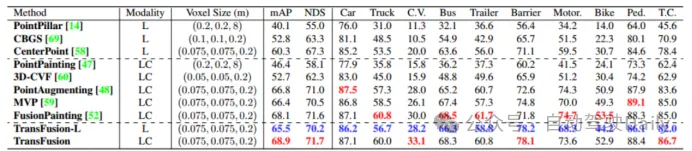

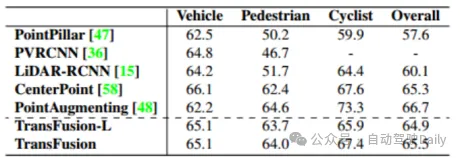

First compare TransFusion and other SOTA The performance of the method on the 3D target detection task is shown in Table 1 below, which is the result in the nuScenes test set. It can be seen that the method has reached the best performance at the time (mAP is 68.9%, NDS is 71.7%). TransFusion-L only uses lidar for detection, and its detection performance is significantly better than previous single-modal detection methods, and even exceeds some multi-modal methods. This is mainly due to the new association mechanism and query initialization. Strategy. Table 2 shows the results of LEVEL 2 mAPH on the Waymo validation set.

Table 1 Comparison with SOTA method in nuScenes test

Table 2 LEVEL 2 on Waymo validation set mAPH

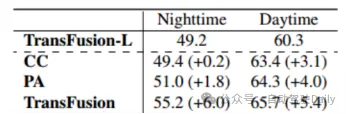

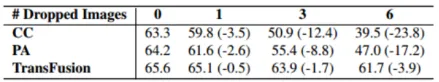

Robustness against harsh image conditions

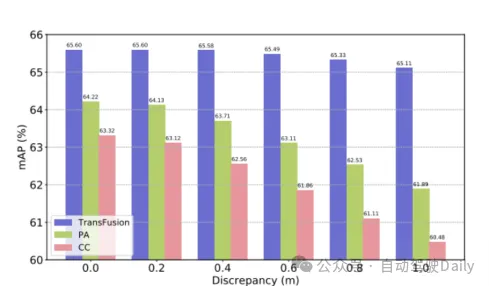

Using TransFusion-L as the benchmark, different fusion frameworks are designed to verify the robustness. The three fusion frameworks are point-by-point splicing and fusion of lidar and image features (CC), point enhancement fusion strategy (PA) and TransFusion. As shown in Table 3, by dividing the nuScenes data set into day and night, the TransFusion method will bring greater performance improvement at night. During the inference process, the features of the image are set to zero to achieve the effect of randomly discarding several images in each frame. As can be seen in Table 4, when some images are unavailable during the inference process, the detection performance will decrease significantly. , where the mAP of CC and PA dropped by 23.8% and 17.2% respectively, while TransFusion remained at 61.7%. The uncalibrated sensor will also greatly affect the performance of 3D target detection. The experimental setting randomly adds a translation offset to the transformation matrix from the camera to the lidar, as shown in Figure 4. When the two sensors are offset by 1m, the mAP of TransFusion It only decreased by 0.49%, while the mAP of PA and CC decreased by 2.33% and 2.85% respectively.

Table 3 mAP during the day and night

Table 4 mAP under different numbers of images

Figure 4 mAP

Ablation experiment with sensor misalignment

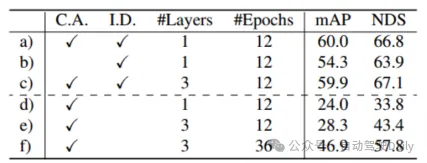

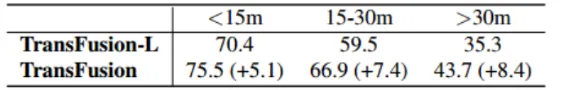

It can be seen from the results of Table 5 d)-f) that when there is no query initialization In this case, the detection performance drops a lot. Although increasing the number of training rounds and the number of decoder layers can improve the performance, it still cannot achieve the ideal effect. This also proves from the side that the proposed initialization query strategy can reduce the number of network layers. . As shown in Table 6, image feature fusion and image-guided query initialization bring mAP gains of 4.8% and 1.6% respectively. In Table 7, through the comparison of accuracy in different ranges, TransFusion's detection performance in difficult-to-detect objects or remote areas has been improved compared with lidar-only detection.

Table 5 Ablation experiment of query initialization module

Table 6 Ablation experiment of fusion part

Table 7 The distance between the object center and the self-vehicle (in meters)

Conclusion

An effective And a robust Transformer-based lidar camera 3D detection framework with a soft correlation mechanism that can adaptively determine the location and information that should be obtained from the image. TransFusion achieves state-of-the-art results on the nuScenes detection and tracking leaderboards and shows competitive results on the Waymo detection benchmark. Extensive ablation experiments demonstrate the robustness of this method to poor image conditions.

DeepInteraction:

Main contribution:

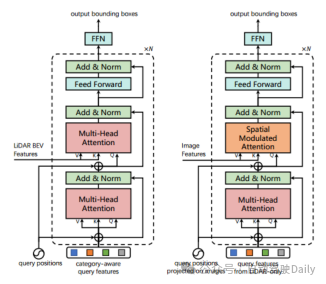

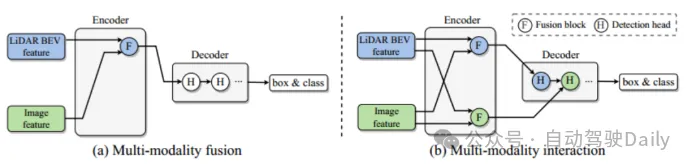

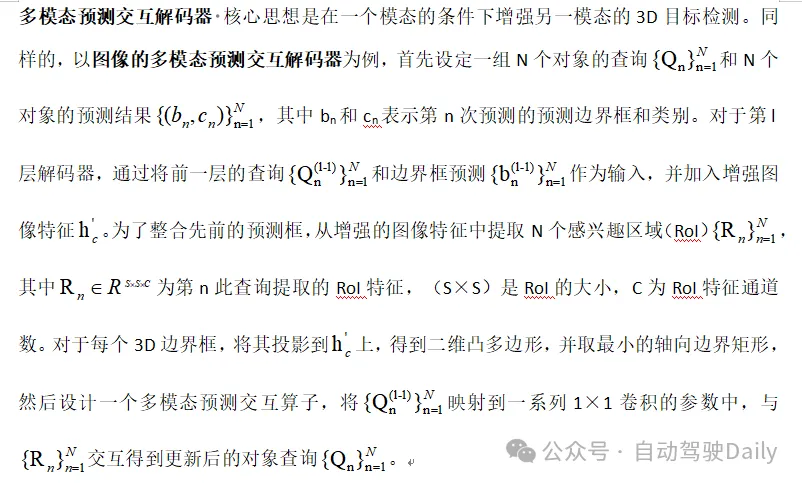

The main problem solved is that existing multi-modal fusion strategies ignore modality-specific useful information, ultimately hindering model performance. Point clouds provide necessary positioning and geometric information at low resolutions, and images provide rich appearance information at high resolutions, so cross-modal information fusion is particularly important to enhance 3D target detection performance. The existing fusion module, as shown in Figure 1(a), integrates the information of the two modalities into a unified network space. However, doing so will prevent some information from being integrated into a unified representation, which reduces some of the specific information. Representational advantages of modality. In order to overcome the above limitations, the article proposes a new modal interaction module (Figure 1(b)). The key idea is to learn and maintain two modality-specific representations to achieve interaction between modalities. The main contributions are as follows:

- Proposes a new modal interaction strategy for multi-modal three-dimensional target detection, aiming to solve the basic problem of previous modal fusion strategies losing useful information in each modality. Limitations;

- Designed a DeepInteraction architecture with a multi-modal feature interactive encoder and a multi-modal feature prediction interactive decoder.

Figure 1 Different fusion strategies

Module details

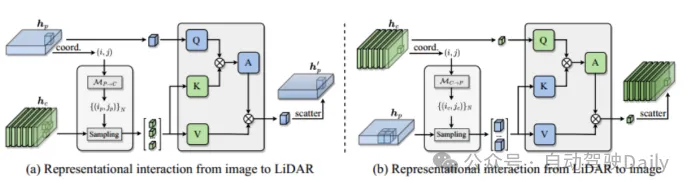

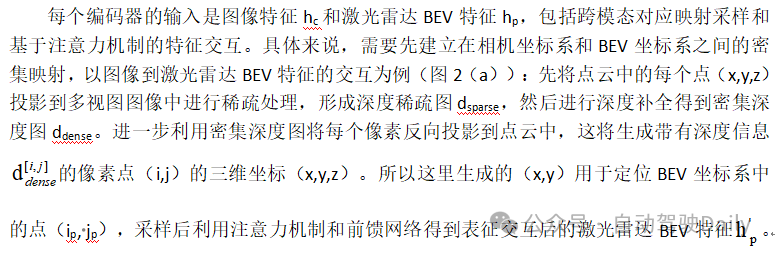

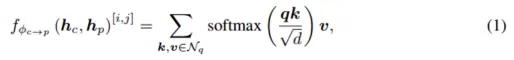

Multimodality Characterization interactive encoder Customize the encoder into a multiple-input multiple-output (MIMO) structure: take the two modal specific scene information independently extracted by the lidar and camera backbone as input, and generate two enhanced feature information. Each layer of encoder includes: i) multi-modal feature interaction (MMRI); ii) intra-modal feature learning; iii) representation integration.

Figure 2 Multimodal representation interaction module

Experiment

The data set and indicators are the same as the nuScenes data set part of TransFusion.Experimental details The backbone network of the image is ResNet50. In order to save computing costs, the input image is rescaled to 1/2 of the original size before entering the network, and the image branch is frozen during training. the weight of. The voxel size is set to (0.075m, 0.075m, 0.2m), the detection range is set to [-54m, 54m] for the X-axis and Y-axis, and [-5m, 3m] for the Z-axis. Design 2 layers of encoder layers and 5 cascaded decoder layers. In addition, two online submission test models are set up: test time increase (TTA) and model integration, and the two settings are called DeepInteraction-large and DeepInteraction-e respectively. Among them, DeepInteraction-large uses Swin-Tiny as the image backbone network, and doubles the number of channels of the convolution block in the lidar backbone network, sets the voxel size to [0.5m, 0.5m, 0.2m], uses bidirectional flipping and Rotate the yaw angle [0°, ±6.25°, ±12.5°] to increase the test time. DeepInteraction-e integrates multiple DeepInteraction-large models, and the input lidar BEV grid sizes are [0.5m, 0.5m] and [1.5m, 1.5m].

Perform data augmentation according to the configuration of TransFusion: use random rotation in the range [-π/4,π/4], random scaling coefficients [0.9,1.1], and three-axis random translation with standard deviation 0.5 and random horizontal flipping, class-balanced resampling is also used in CBGS to balance the class distribution of nuScenes. The same two-stage training method as TransFusion is used, using TransFusion-L as the baseline for lidar-only training. The Adam optimizer uses a single-cycle learning rate strategy, with a maximum learning rate of 1×10−3, weight attenuation 0.01, momentum 0.85 ~ 0.95, and follows CBGS. The lidar baseline training is 20 rounds, the lidar image fusion is 6 rounds, the batch size is 16, and 8 NVIDIA V100 GPUs are used for training.Comparison with state-of-the-art methods

Ablation experiment

Ablation of decoder Experiment

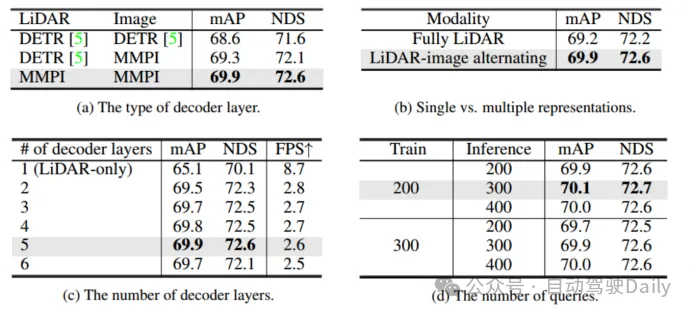

The designs of multimodal interactive prediction decoder and DETR decoder layers are compared in Table 3(a), and a hybrid design is used: using ordinary DETR decoder layer To aggregate features in the lidar representation, a multimodal predictive decoder for interaction (MMPI) is used to aggregate features in the image representation (second row). MMPI is significantly better than DETR, improving 1.3% mAP and 1.0% NDS, with design combination flexibility. Table 3(c) further explores the impact of different decoder layers on detection performance. It can be found that the performance continues to improve when adding 5 layers of decoders. Finally, different combinations of query numbers used in training and testing were compared. Under different choices, the performance was stable, but 200/300 was used as the optimal setting for training/testing.

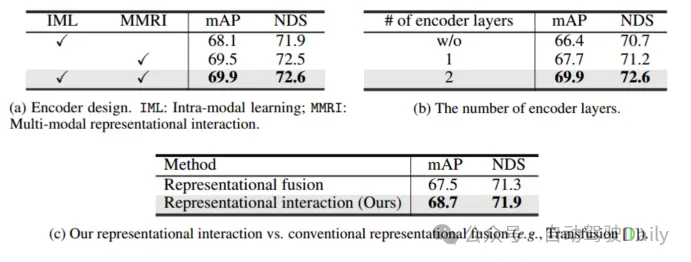

Ablation experiment of encoder

From Table 4(a ) can be observed: (1) Compared with IML, the multi-modal representation interactive encoder (MMRI) can significantly improve the performance; (2) MMRI and IML can work well together to further improve the performance. As can be seen from Table 4(b), stacking encoder layers for iterative MMRI is beneficial.

Table 4 Ablation experiment of encoder

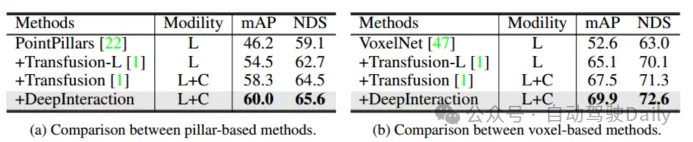

Ablation experiment of lidar backbone network

Using two different lasers Radar backbone networks: PointPillar and VoxelNet to check the generality of the framework. For PointPillars, set the voxel size to (0.2m, 0.2m) while keeping the rest of the settings the same as DeepInteraction-base. Due to the proposed multi-modal interaction strategy, DeepInteraction shows consistent improvements over the lidar-only baseline when using either backbone (5.5% mAP for the voxel-based backbone and 4.4% mAP for the pillar-based backbone) ). This reflects the versatility of DeepInteraction among different point cloud encoders.

Table 5 Evaluation of different lidar backbones

Conclusion

In this work, it is presented A new 3D target detection method, DeepInteraction, is developed to explore the inherent multi-modal complementary properties. The key idea is to maintain two modality-specific representations and establish an interplay between them for representation learning and predictive decoding. This strategy is specifically designed to address the fundamental limitation of existing one-sided fusion methods, namely that the image representation is underutilized due to its auxiliary source character processing.

Summary of the two papers:

The above two papers are based on three-dimensional target detection based on lidar and camera fusion, which can also be seen from DeepInteraction It is based on further work of TransFusion. From these two papers, we can conclude that one direction of multi-sensor fusion is to explore more efficient dynamic fusion methods to focus on more effective information of different modalities. Of course, all this is based on high-quality information in both modalities. Multimodal fusion will have very important applications in future fields such as autonomous driving and intelligent robots. As the information extracted from different modalities gradually becomes richer, more and more information will be available to us. So how to combine these Using data more efficiently is also a question worth thinking about.

The above is the detailed content of How to use transformer to effectively correlate lidar-millimeter wave radar-visual features?. For more information, please follow other related articles on the PHP Chinese website!