Home >Technology peripherals >AI >Tsinghua team launches new platform: using decentralized AI to break computing power shortage

Tsinghua team launches new platform: using decentralized AI to break computing power shortage

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2024-04-17 18:16:14784browse

Recently, a piece of data points out the astonishing growth in the demand for computing power in the AI field -

According to estimates from industry experts, Sora launched by OpenAI requires about 4200-10500 in the training process. It takes 1 month to train on Zhang NVIDIA H100, and when the model is generated to the inference stage, the computing cost will quickly exceed the training stage.

If this trend continues, it may be difficult for GPU supply to meet the continued demand for large models.

However, there has been a new trend overseas recently, which may provide new solutions to the upcoming "computing power shortage" - decentralized AI.

Three weeks ago, on March 23, Stability AI suddenly issued an announcement announcing the resignation of company CEO Emad Mostaque. Emad Mostaque himself revealed his next move to pursue the “dream of decentralized AI.”

However, due to the difficulty in solving technical pain points such as the uncertainty and instability of decentralized networks, it is difficult for the last wave of decentralized AI to be truly implemented in the era of large models.

Recently, Qubits discovered that a Tsinghua team that started an overseas business focused on decentralized AI and created NetMind.AI. In 2023, NetMind released a white paper detailing the decentralized computing power sharing platform NetMind Power. This platform aims to solve the pain points of decentralized AI in the era of large models.

1. Let every developer afford GPU

In September 2021, NetMind.AI launched a decentralized computing platform project called NetMind Power.

There is a large amount of purchased computing power in the world: purchased computing power in traditional data centers, underutilized computing power owned by small and medium-sized enterprises, and scattered GPUs owned by individuals. These computing power are either idle or used for games and video rendering. At the same time, AI computing power is becoming increasingly scarce. AI researchers, small and medium-sized enterprises, especially AI startups, and traditional companies participating in AI projects are all trapped by the high cost and high threshold of AI computing power.

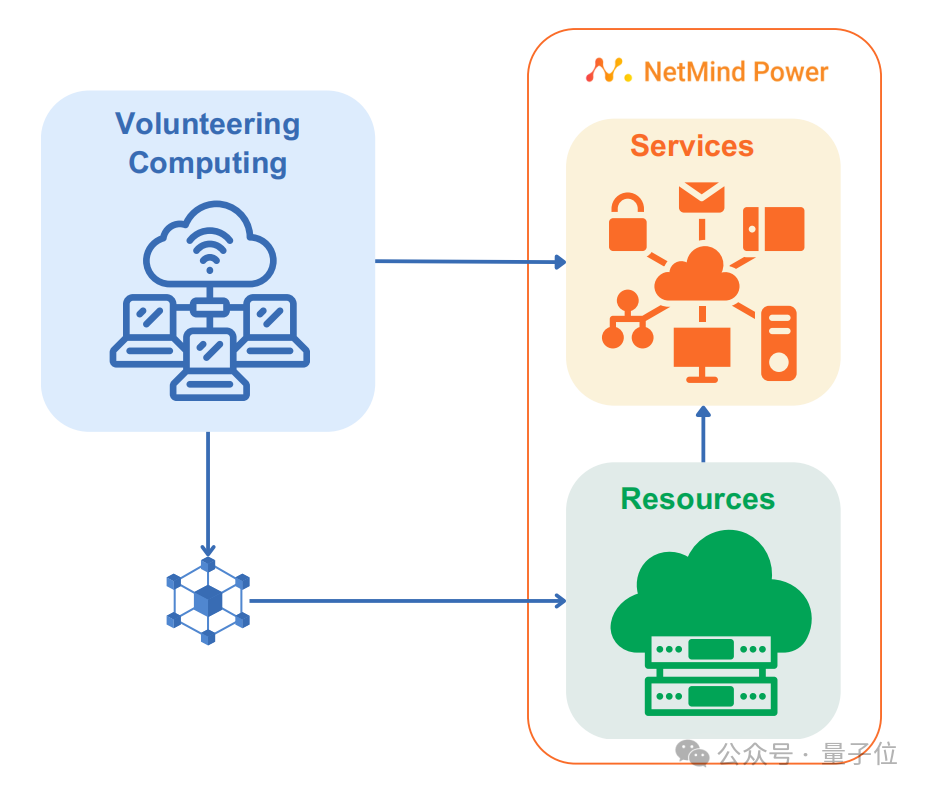

NetMind Power has created a computing network dedicated to centralization, leveraging the core technology developed by NetMind to leverage global computing resources and provide easy-to-use and affordable AI computing services for the AI industry.

△NetMind Power is an economical choice for obtaining computing power, providing users with efficient and affordable computing resource solutions.

△NetMind Power is an economical choice for obtaining computing power, providing users with efficient and affordable computing resource solutions.

Currently, NetMind Power has collected thousands of graphics cards, including H100, A100, 4090, 3090.

Four highlights of the platform:

1. Decentralized dynamic cluster - creating reliable and efficient AI applications based on extremely uncertain computing power

Power platform Utilizing P2P-based dynamic distributed cluster technology, combined with its unique routing, clustering algorithms and neural networks, thousands of computing nodes are woven into a powerful network cluster architecture to specifically serve high-level needs such as AI applications.

When users perform AI-related operations on the Power platform, such as model training, fine-tuning or inference, Power's decentralized network can optimize the performance in computing nodes around the world in a very short time. The algorithm quickly allocates the most appropriate computing resources to provide services to users.

At the same time, Power provides dynamic cluster strategies for B-side users, which can intelligently reorganize and configure nodes in a few seconds, and provide customizable, highly scalable and highly redundant exclusive clusters.

2. Complete AI ecosystem: lower the threshold for computing power usage and expand decentralized network application scenarios

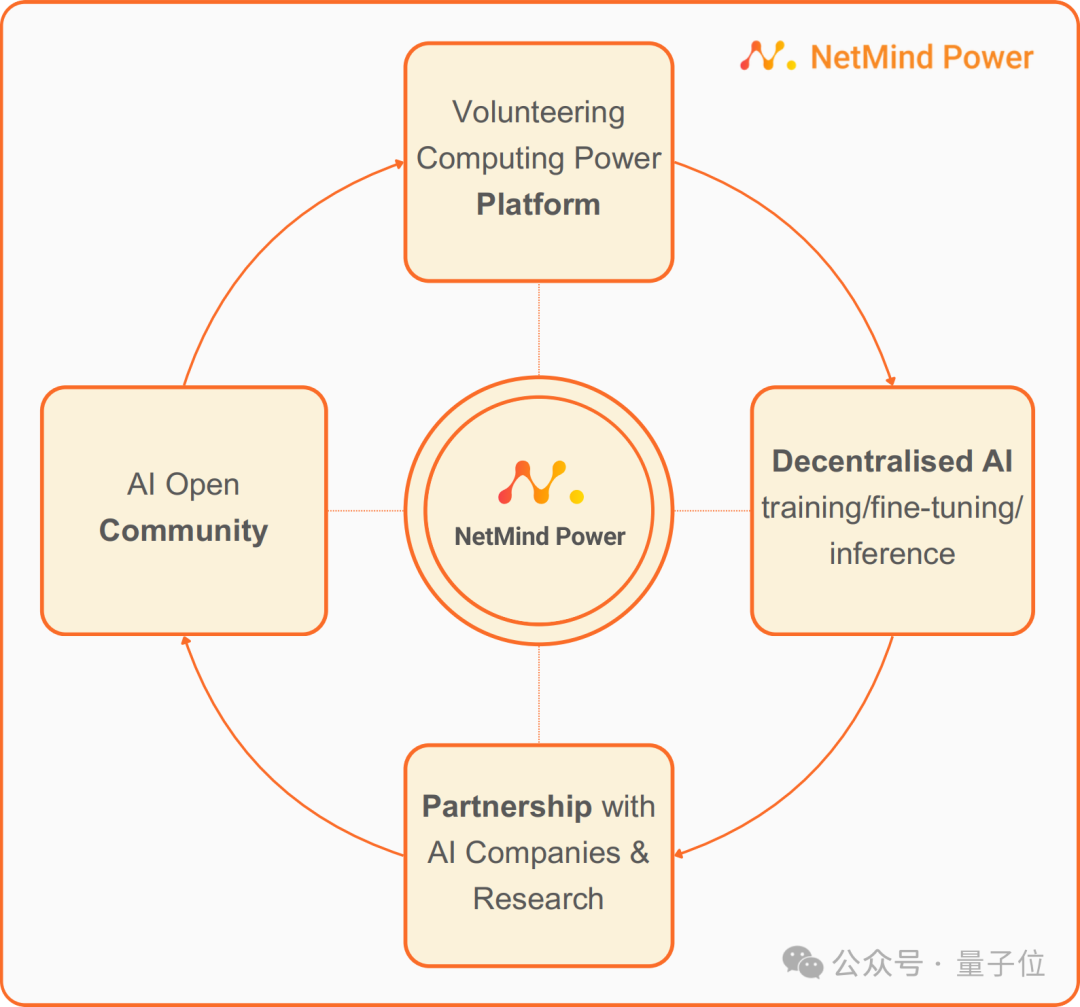

With NetMind’s years of accumulation in the AI field, Power Network provides basic computing power services in addition to , will also include AI ecological bases such as open source model libraries, AI data sets, data and model encryption, as well as all-round services such as model training, inference, and deployment, creating a MaaS (Model as a Service) platform to provide computing power providers with Empowering both parties on the AI application side.

Targeted at AI projects for scientific researchers, small and medium-sized enterprises in the AI field, and traditional enterprises, Power’s MaaS platform will significantly reduce the threshold for using computing power, especially for small and medium-sized enterprises and traditional enterprises that do not have professional AI development capabilities. This is particularly important.

For traditional computing power providers, more users can be reached with the help of Power network. Furthermore, with the help of Power's MaaS platform, they can expand application scenarios and obtain higher profits. In this way, Power Network can incorporate traditional small and medium-sized centralized computing power into the decentralized computing power network, thereby greatly expanding the scale of the network.

3. Asynchronous training algorithm - solve network bottlenecks and tap the potential of idle computing power

In the current field of machine learning, especially in large-scale language model training, it is usually necessary to achieve synchronous distributed training between GPUs through dedicated GPU cables or high-bandwidth internal networks, which inevitably increases the complexity of training. thresholds and costs.

NetMind Power breaks the barriers of network speed and bandwidth in distributed training through self-developed model segmentation and data asynchronous technologies. Even training nodes distributed in different corners of the earth can participate synchronously. Huge model training work is underway.

4. Model encryption and data isolation - solving security problems in decentralized networks

Power provides unique model encryption technology to ensure that in decentralized volunteer computing scenarios, AI model and data security for users. All network communications are encrypted to ensure the security of data transmission; data isolation and model splitting ensure that no single node in the decentralized network can obtain complete data and models, greatly improving security.

Picture

Picture

2. Another team with a Tsinghua background that has been entrepreneurship overseas for many years

The core team of NetMind.AI comes from Tsinghua University. Has been working in the AI field for more than 10 years.

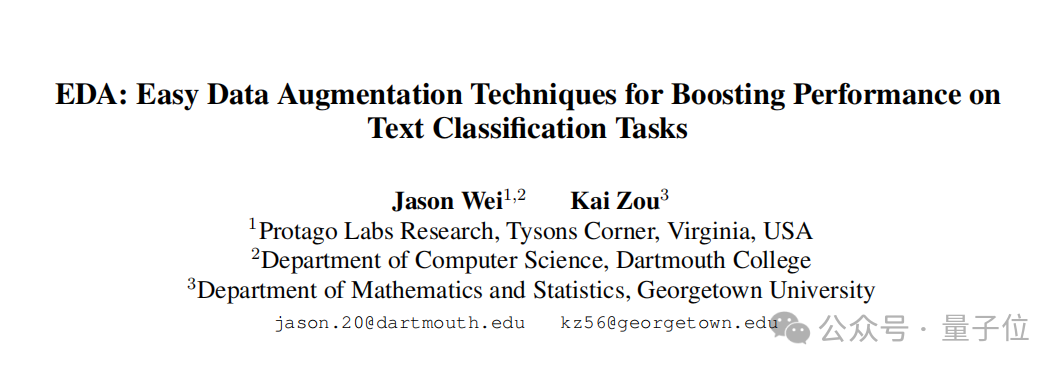

The company’s founder and CEO, Kai Zou, graduated from Tsinghua University’s basic science class in mathematics and physics in 2010, and received a master’s degree in mathematics and statistics from Georgetown University in 2013.

He is a serial entrepreneur who has led both ProtagoLabs and the non-profit organization AGI Odyssey. At the same time, he is also an angel investor and has invested in many AI start-ups including Haiper.ai, Auto Edge, Qdot and Orbit.

It is worth noting that the paper "EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks" published by Kai Zou and OpenAI researcher Jason Wei has been cited more than 2,000 times. The CEO and his team firmly believe that the platform they built should provide resources for scholars who actually do academic research and corporate engineers who promote the development of AI.

Picture

Picture

The company’s CTO received a master’s degree in computer science from George Washington University in 2016; before joining NetMind.AI, he served as a senior executive at Microsoft Team leader; he has extensive experience in Web3, blockchain technology, distributed systems, Kubernetes, cloud computing, Azure and AWS, etc.; and has professional skills in edge computing, full-stack development, and machine learning.

3. The ultimate ideal: bringing AI into every household

Behind NetMind’s vision of decentralized AI, there is actually the ideal of inclusive technology.

Looking back at the history of the development of IT technology, the trend of decentralization often emerges at moments when the centralization of computing resources increases sharply. As a bottom-up force, it fights against giants who try to monopolize all resources, thus Start a new wave of technological inclusiveness and make new technologies truly popular in every corner of the world.

Today’s large model market may be at such a moment.

Looking at the large model market, after a whole year of vigorous development, there are not many startups that can really gain a foothold. Except for a few star unicorns, the future of large models seems to be converging on technology giants such as Microsoft, Google, and Nvidia. Over time, a few companies may develop monopoly control over pricing, availability, and access to computing resources.

At this time, a democratized narrative like NetMind Power is needed to write a new blueprint for the AGI story.

Currently, NetMind has cooperated in the academic and commercial fields -

In terms of academics, NetMind Power has currently cooperated with many top universities at home and abroad, including the top universities in the field of computer science, the University of Cambridge, Oxford University, Carnegie Mellon University, Northwestern University, Tsinghua University, Huazhong University of Science and Technology, Rice University, Fudan University, Shanghai Jiao Tong University, etc.

Picture

Picture

In terms of business, NetMind Power provides enterprises with AI computing power solutions based on decentralized networks, allowing enterprises to focus on model development and Product Innovation. More and more companies are accelerating the launch of innovative AI products with the help of Netmind Power. For example, Haiper.ai, the Wensheng video team that has been gaining momentum in North America recently, has deeply integrated its model training and inference with the NetMind Power platform.

In the future, NetMind Power will gradually grow into a decentralized AI community and accelerate global AI innovation.

In the future, NetMind Power will gradually grow into a decentralized AI community and accelerate global AI innovation.

Machine learning practitioners, academic researchers and companies on the AI application side can find the computing power and models they need on the NetMind Power platform, and can also host their own trained models on the platform. , and even provide it to other users on the platform and charge a certain fee from it.

Users can not only use the corresponding computing power on the platform to solve their own training needs, but also provide their trained models to more people or companies in need through the platform, passing them on layer by layer.

Extending the timeline, to truly realize AGI, the universalization and democratization of AI are inevitable prerequisites. Today, NetMind.AI, which took the lead, is making its own contribution, looking for more partners, and taking a small, solid step towards the democratic AGI era.

The above is the detailed content of Tsinghua team launches new platform: using decentralized AI to break computing power shortage. For more information, please follow other related articles on the PHP Chinese website!