Home >Technology peripherals >It Industry >Opera becomes the first browser to integrate native LLM

Opera becomes the first browser to integrate native LLM

- 王林forward

- 2024-04-04 09:07:11766browse

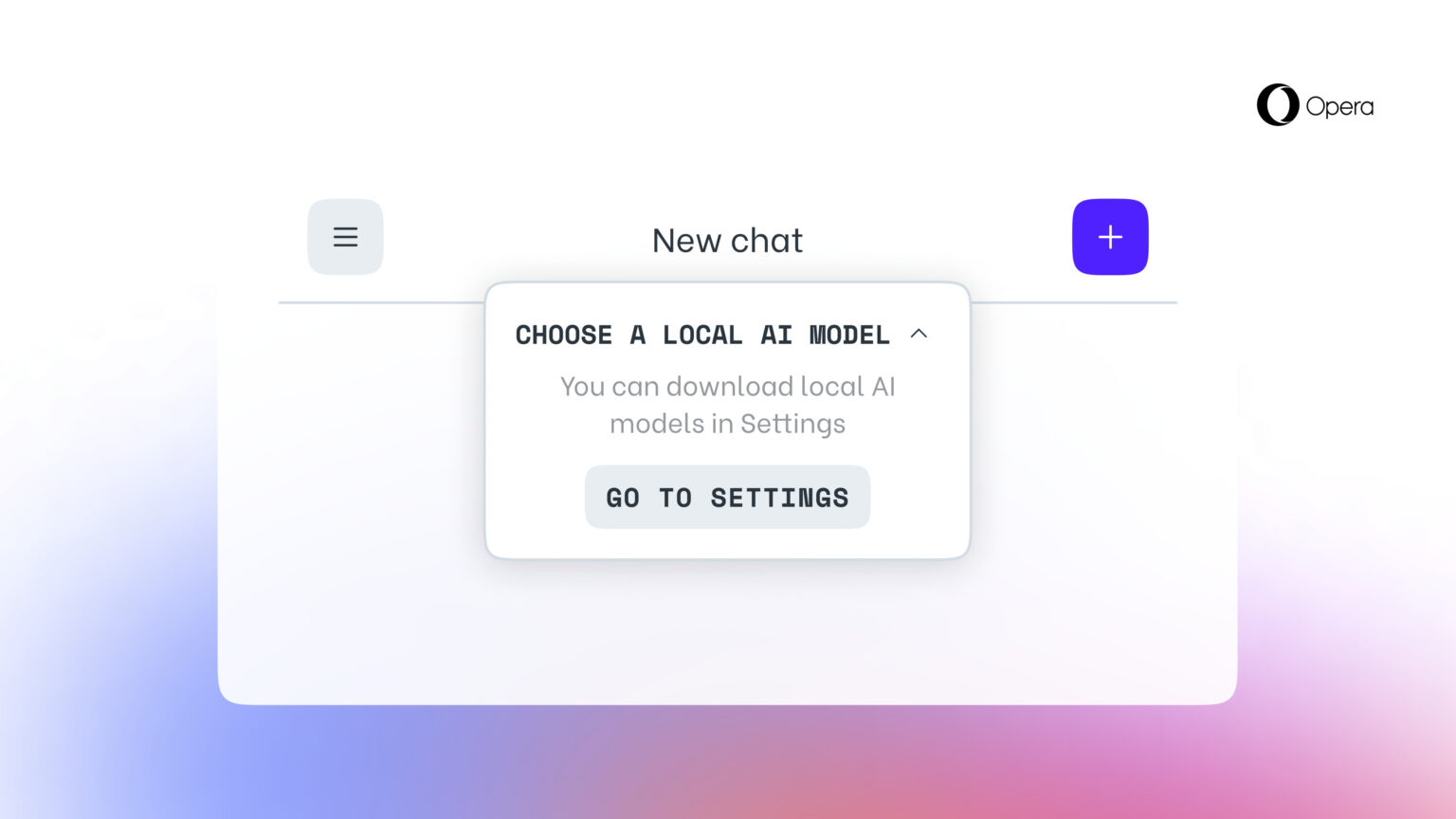

Opera today released the latest version of Opera One, which supports 150 local large language models (LLM) on a trial basis, which means Opera has become the first mainstream browser with built-in local AI models.

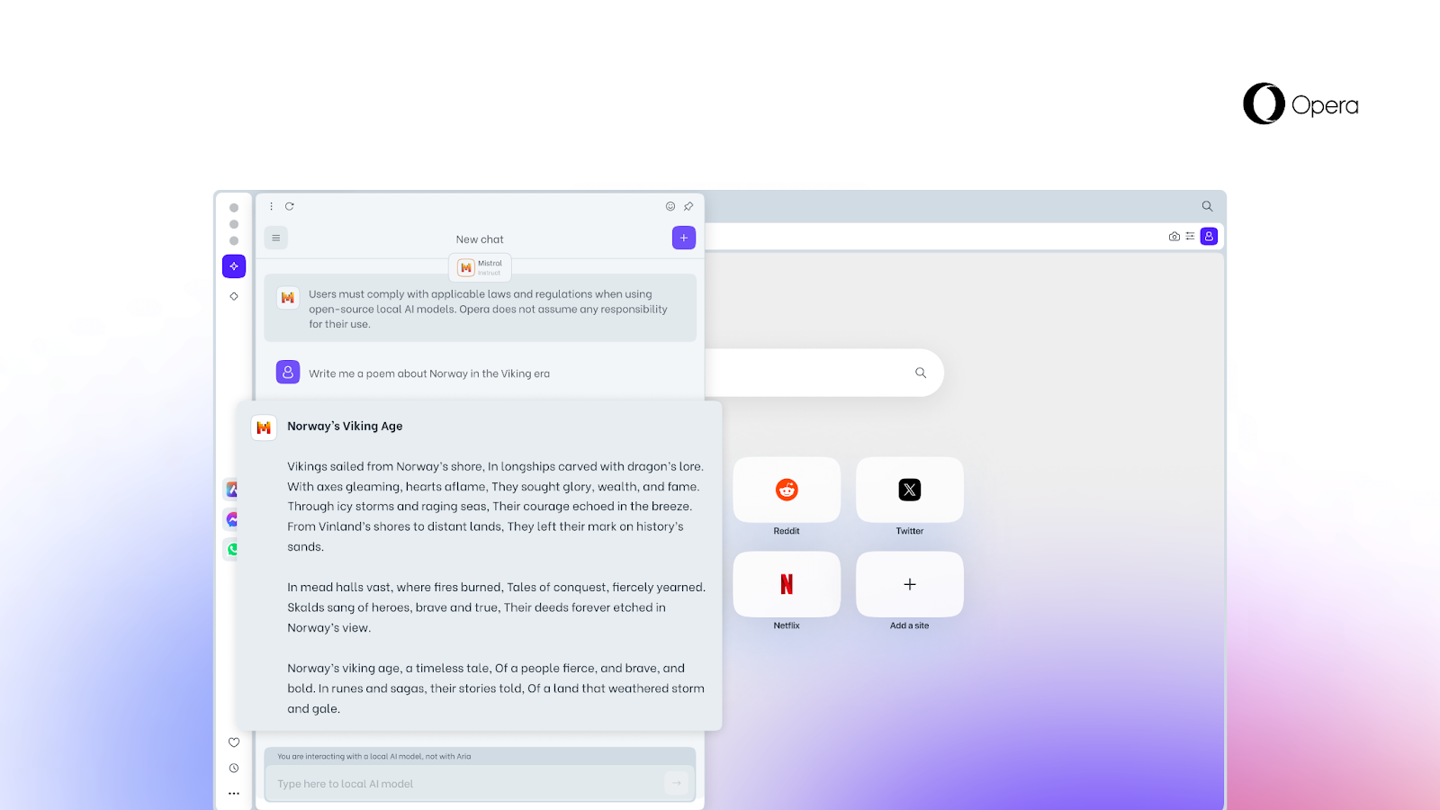

Compared with cloud AI, this type of local AI model can provide stronger privacy and processing speed, but the processing effect of cloud AI with a larger number of parameters may be compromised.

Opera integrates a variety of popular LLMs, such as Meta’s Llama and Vicuna, Google’s Gemma, Mistral AI’s Mixtral and other well-known models.

This site reminds that interested users can now download the Opera One Developer version through the Opera developer website. This version supports the option to download a variety of local LLMs, requiring 2-10 GB of storage per model.

The above is the detailed content of Opera becomes the first browser to integrate native LLM. For more information, please follow other related articles on the PHP Chinese website!