Home >Hardware Tutorial >Hardware Review >Lanzhou insists on making every effort: To implement the ToB scene, a large model with 10B parameters is enough

Lanzhou insists on making every effort: To implement the ToB scene, a large model with 10B parameters is enough

- 王林forward

- 2024-03-21 12:21:40976browse

Focus, perfection, reputation, speed and cost.

Zhou Ming is the founder and CEO of Lanzhou Technology. He proposed the "Nine-Character Rule for Large Model Implementation", which was based on Lei Jun's "Seven-Character Rule" for the Internet and added the word "cost". .

He called 2024 the first year of the launch of large models, but at the same time firmly stated that this does not mean that there is gold everywhere.

As for the specific implementation of the large model, Zhou Ming set an example with the large model entrepreneurial team Lanzhou Technology he led - using Lanzhou's "one horizontal and N vertical" system, taking the Mencius large model as the Basic, scenario-oriented, product release.

To put it simply, it is a two-wheel drive of technology and application. While actively researching and mastering cutting-edge technologies, we are committed to ensuring the effective application of these technologies.

On March 18, at the launch of Lanzhou’s large-scale model technology and products, Lanzhou Technology also signed a strategic cooperation contract with Zero One Wish.

At the scene, Kai-fu Lee, Chairman of Sinovation Ventures and CEO of Zero-One Everything, shared that the best large-scale model intelligence in the world has already reached 3 times the average human intelligence level. That is to say, the average person can only answer 33 of 100 questions correctly, but the best large AI model can answer more than 99 questions correctly.

He looked forward to the four major trends in the future of the AI 2.0 era:

The most revolutionary AI 2.0 applications should be AI-First / AI-Native: the applications that finally stand out belong to those who dare to go all out Pioneers in new technologies. The introduction of large language models has brought huge impetus to AI-First applications.

Large models start with text and will be expanded to "all modes" in the future: cross-modal generation technology is a turning point in realizing cognitive and decision-making intelligence. The information in the real world is a comprehensive system of text, audio, vision, sensors and various human touch sensations. To simulate the real world more accurately, it is necessary to open up various modal capabilities, such as text-image, text-video and other cross-modal systems. Modal or even full-modal comprehensive capabilities.

AI 2.0 will go beyond conversations, from chat tools to smart productivity tools: user experience, as well as future interactive interfaces and business models will undergo major changes.

AI 2.0 will go physical and greatly boost social productivity: embodied intelligence can allow robots to manufacture robots, further realizing intelligent planning of AI 2.0 production lines.

Focus on the research and development of 10B-100B parameter large models

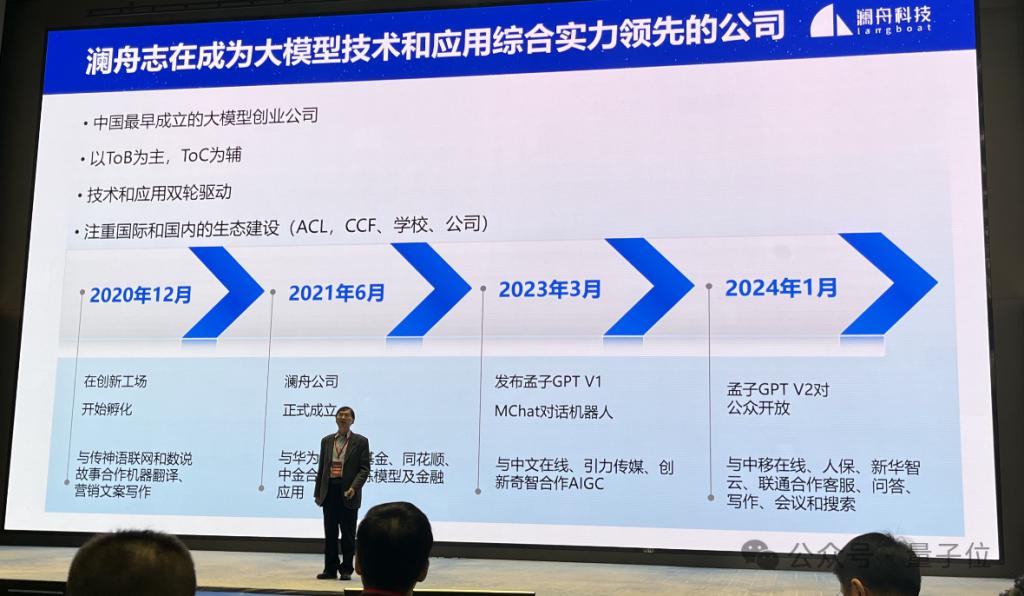

Lanzhou Technology was established in June 2021. It is one of the first teams in China to start a large model business.

In March last year, Lanzhou released Mencius GPT V1 (MChat); in January this year, Mencius large model GPT V2 (including Mencius large model - standard, Mencius large model - lightweight, Mencius large model - finance , Mencius' Large Model - Encoding) is open to the public.

Recently, the Lanzhou team completed the large model training of Mengzi3-13B.

One more thing, there is a big contributor behind the Mencius large model training, that is, the Mengzi-3 data set with a total size of 3T tokens, including web pages, codes, books, and papers. and other high-quality data sources.

According to reports, at the end of this month (March 30), the Mengzi3-13B model will be open sourced in GitHub, HuggingFace, Moda and Shizhi AI communities.

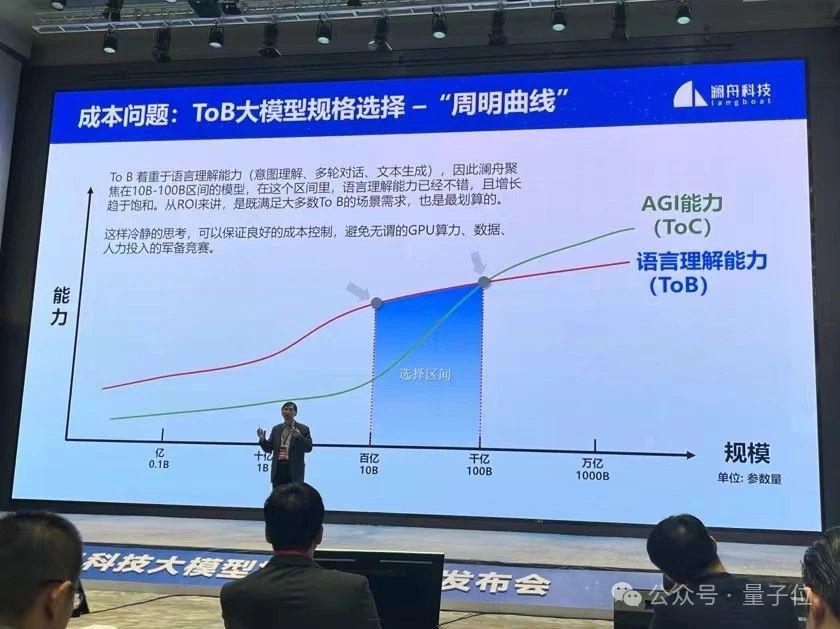

Why is the open source Mencius model version 13B? Zhou Ming answered this question head on.

First of all, Lanzhou clearly focuses on serving ToB scenarios, supplemented by ToC.

Practice has found that the parameters of large models most frequently used in ToB scenarios are mostly 7B, 13B, 40B, and 100B, and the overall parameters are concentrated between 10B-100B.

Secondly, within this range, from the perspective of ROI, it not only meets the needs of the scene, but is also the most cost-effective.

So for a long time, Lanzhou’s goal has been to build a large industry model within the 10B-100B parameter scale. This makes it difficult to understand why 13B was chosen for the open source version.

Zhou Ming explained that he himself is actually a believer in Scaling law, but entrepreneurship is different from scientific research.

" First, a large model of this size can already solve 80% of the problems; second, it is relatively stable for the team and will not feel restless due to the ever-expanding model scale competition." Zhou Ming He added that such calm thinking can ensure good cost control and avoid unnecessary competition for GPU computing power, data, and manpower.

"One horizontal N vertical" system

At the press conference, Lanzhou announced its own one horizontal N vertical system.

"Yiheng" is the model layer, which is each model developed based on Mencius' large model technology;

"N vertical" is the most important technology for ToB applications based on Mencius' large model and products.

It is understood that Lanzhou is currently focusing on the financial industry, auxiliary programming and other fields, aiming to create a large vertical model that is closer to industry scenarios through more comprehensive, professional and high-quality field data.

Based on the "One Horizon" of Mencius' GPT general model, Li Jingmei, partner and chief product officer of Lanzhou Technology, introduced Lanzhou's application-capable products. Including:

AI document understanding: covering professional PDF document parsing capabilities and information extraction capabilities, providing better basic capabilities for document understanding in the RAG solution;

AI document Q&A: based on Enterprise needs to provide solution capabilities for privatized enterprise intelligent knowledge base construction;

AI document-assisted writing: supports users to upload multiple documents as reference materials, supports customized multi-level questions and writing outlines, and is composed of large models Empower automation to generate complete first drafts of articles as required;

Machine translation platform: focusing on translation between the world's major languages centered on Chinese and professional translation in more than 20 fields;

LAN Zhouzhihui: a product focusing on intelligent analysis and question-and-answer of conference content. It is a large-model native intelligent conference assistant built on large models;

Lanzhou AI Search: a search engine in the era of large language models.

In the past year, the field of large models has been changing with each passing day.

We will do whatever OpenAI is doing. It is okay in the short term, but not in the long term. We must have our own innovative ideas.

Zhou Ming expressed his views on how to maximize strengths and avoid weaknesses and find his own unique path of innovation.

The most critical first step is for the company to have a clear positioning. The positioning of Lanzhou Technology is significantly different from other large-model startups in China.

He gave an example. Lanzhou is positioned as a comprehensive company for "large-model technology enterprise scenario applications." "We hope to be able to understand applications best from a technical perspective and understand technology best from an application perspective. At the same time, we hope to Technology and applications form an ecological connection that allows both parties to iterate quickly."

At the same time, it is still necessary to focus on and implement implementation - through implementation, create value and drive innovation.

And it should be noted that innovation and implementation complement each other.

Don’t blindly innovate or blindly implement, but connect the two together to allow it to iterate quickly.

Finally, Zhou Ming also reminded him sincerely:

This is the first year that the big model is launched, so there is gold everywhere, right? No, I can responsibly say that we have not explored many places.

For example, how to solve the last mile of landing a large model? What is the business model of the big model? How to strengthen delivery capabilities? How to improve product commercialization?

The implementation of the large model has actually just begun.

— Contact the author —

The above is the detailed content of Lanzhou insists on making every effort: To implement the ToB scene, a large model with 10B parameters is enough. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Biny - an ultra-lightweight PHP framework open sourced by Tencent

- What software can be used to open and edit ai files?

- Solution to solve Java thread pool task execution error exception (ThreadPoolTaskExecutionErrorExceotion)

- Methods to solve Java network connection interruption timeout error exception (ConnectionInterruptedTimeoutErrorExceotion)

- Xiaomi CEO Lei Jun announced that ThePaper OS packaging has been completed, and the first Xiaomi Mi 14 series will be equipped with it.