Learn linear algebra well and play with recommendation systems

Author| Wang Hao

##Reviewer| Chonglou

Speaking of 21 century Internet technology, in addition to Python/Rust/Go etc. The birth of a series of new programming languages and the vigorous development of information retrieval technology are also a highlight. The first pure technology business model on the Internet was search engine technology represented by Google and Baidu. However, what everyone doesn’t expect is that the recommendation system was born a long time ago. As early as 1992 , the first recommendation system in human history was published in the form of a paper. At this time, Google and Baidu had not yet been born.

Unlike search engines, which are considered to be a necessity, many unicorns were soon born. Technology companies with recommendation systems as their core technology will not appear until the rise of Toutiao and Douyin in the 2010s. There is no doubt that Toutiao and Douyin have become the most successful representative companies in recommendation systems. If the first-generation information retrieval technology search engine was pre-empted by Americans, then the second-generation information retrieval technology recommendation system is firmly controlled by the Chinese. And we have now encountered the third generation of information retrieval technology —— Information retrieval based on large language models. At present, the first movers are European and American countries, but China and the United States are currently moving forward together. In recent years, the authoritative conference in the field of recommendation systems

RecSys has frequently awarded the best paper award to sequence Recommendation(Sequential Recommendation). This shows that this field is paying more and more attention to vertical applications. There is a vertical application of recommendation system that is so important, but it has not made huge waves so far. This field is scenario-based recommendation (Context-aware Recommendation), referred to as CARS. We occasionally see some CARS Workshop, but these Workshop papers There are no more than 10 articles per year, and there is not much to do. CARS

What can it be used for? First of all CARS is already used by fast food companies such as Burger King. It can also recommend music to the user based on the scene while the user is driving the car. In addition, we can think about it, is it possible for us to recommend travel plans to users based on weather conditions? Or recommend meals to users based on their physical condition? In fact, as long as we give full play to our imagination, we can always find different practical applications for CARS .

However, the question arises, since CARS is so widely used, why do so few people publish papers? The reason is simple, because CARS There are almost no public datasets available. Currently the best public dataset for CARS is the LDOS-CoMoDa dataset from Slovenia. Apart from this, it is difficult to find other data sets. LDOS-CoMoDa uses the form of survey to provide users with scene data when watching movies, making the majority of researchers engaged in CARS research become possible. The data was made public from around 2012 to 2013 , but currently very few people know about this data collection.

Getting back to business, this article mainly introduces the MatMat / MovieMat algorithm and the PowerMat algorithm. These algorithms are powerful tools for solving CARS problems. Let's first take a look at how MatMat defines the CARS problem: We first redefine the user rating matrix, we put the user rating matrix Replace each rating value with a square matrix. The diagonal elements of the square matrix are the original score values, and the off-diagonal elements are scene information.

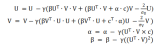

We define below the loss function of the MatMat algorithm, which modifies the classic matrix decomposition The loss function has the following form:

where U and V are all matrices. In this way, we change the vector dot product in the original matrix factorization. Turn vector dot multiplication into matrix multiplication. Let’s take the following example:

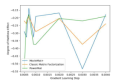

Let’s do some performance testing on MovieLens Small Dataset Comparative experiments, the following results are obtained:

It can be seen that the MatMat algorithm is better than Classic matrix factorization algorithm. Let’s check the fairness of the recommendation system again:

It can be seen that MatMat still performs equally well in terms of fairness indicators. The solution process of MatMat is relatively complicated. Even the author who invented the algorithm did not write the derivation process in the paper. But as the saying goes, if you learn linear algebra well, you will not be afraid of traveling all over the world. I believe that smart readers will be able to derive the relevant formulas and implement this algorithm. MatMat The original address of the algorithm paper can be found at the following link: https://www.php.cn/link/9b8c60725a0193e78368bf8b84c37fb2 . This paper is the Best Paper Report Award of the International Academic Conference IEEE ICISCAE 2021 .

MatMat algorithm is applied in the field of scene-based movie recommendation. The movie instance of this algorithm is named MovieMat. The rating matrix of MovieMat is defined as follows:

The author then conducted a comparative experiment :

On the LDOS-CoMoDa data collection, MovieMat The performance is much higher than that of classic matrix decomposition. Let’s take a look at the fairness evaluation results:

##In terms of fairness, classic matrix decomposition has achieved better results than MovieMat results. The original paper of MovieMat can be found at the following link: https://www.php.cn/link/f4ec6380c50a68a7c35d109bec48aebf .

We sometimes encounter such problems. What should we do when we arrive at a new location and only have scene data but no user rating data? It doesn’t matter, Ratidar Technologies LLC (Beijing Daping Qizhi Network Technology Co., Ltd.) invented ## based on zero-sample learning #CARS Algorithm—— PowerMat. The original paper of PowerMat can be found at the following link: https://www.php.cn/link/1514f187930072575629709336826443 . The inventor of

PowerMat borrowed from MAP and DotMat, the following MAP function is defined: where U is the user feature vector, V is the item feature Vector, R is the user rating value, and C is the scene variable. Specifically, we get the following formula: Using stochastic gradient descent to solve this problem, we get the following formula: Through observation, we found that there are no variables related to input data in this set of formulas, so PowerMat is A zero-shot learning algorithm that is relevant only to scenarios. This algorithm can be applied in the following scenarios: tourists plan to travel to a certain place, but have never been there, so they only have weather and other scene data. We can use PowerMat to recommend check-in attractions to tourists etc. The following is the comparison data between PowerMat and other algorithms: Through this picture, we found that PowerMat and MovieMat are evenly matched, is not are comparable, and the results are better than the classic matrix decomposition algorithm. The picture below shows that even in terms of fairness indicator, PowerMat still performs strongly: Through comparative experiments, we found that PowerMat is excellent CARS algorithm. Internet data engineers often say that data is above all else. And around the 2010 era, there was a strong trend on the Internet that was bullish on data and bearish on algorithms. CARS is a good example. Because the vast majority of people do not have access to relevant data, the development of this field has been greatly restricted. Thanks to Slovenian researchers for making the LDOS-CoMoDa data collection public, we have the opportunity to develop this field. We also hope that more and more people will pay attention to CARS, implement CARS, and contribute to CARS Financing…… Wang Hao, former head of Funplus Artificial Intelligence Laboratory. He has held technology and technology executive positions in ThoughtWorks, Douban, Baidu, Sina and other companies. Working in Internet companies, financial technology, gaming and other companies for #13 years, he has profound insights and rich experience in fields such as artificial intelligence, computer graphics and blockchain. Published 42 papers in international academic conferences and journals, and won IEEE SMI 2008 Best Paper Award, ICBDT 2020 / IEEE ICISCAE 2021 / AIBT 2023 / ICSIM 2024 Best Paper Report Award.

About the author

The above is the detailed content of Learn linear algebra well and play with recommendation systems. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools