Technology peripherals

Technology peripherals AI

AI Further accelerating implementation: compressing the end-to-end motion planning model of autonomous driving

Further accelerating implementation: compressing the end-to-end motion planning model of autonomous drivingOriginal title: On the Road to Portability: Compressing End-to-End Motion Planner for Autonomous Driving

Paper link: https://arxiv.org/pdf/2403.01238.pdf

Code link: https://github.com/tulerfeng/PlanKD

Author affiliation: Beijing Institute of Technology ALLRIDE.AI Hebei Provincial Key Laboratory of Big Data Science and Intelligent Technology

Thesis Idea

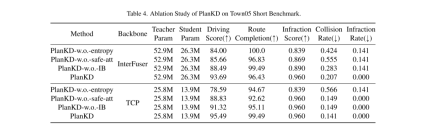

The end-to-end motion planning model is equipped with a deep neural network and has shown great potential in realizing fully autonomous driving. However, overly large neural networks make them unsuitable for deployment on resource-constrained systems, which undoubtedly require more computing time and resources. To address this problem, knowledge distillation offers a promising approach by compressing models by having a smaller student model learn from a larger teacher model. Nonetheless, how to apply knowledge distillation to compress motion planners has so far been unexplored. This paper proposes PlanKD, the first knowledge distillation framework tailored for compressed end-to-end motion planners. First, given that driving scenarios are inherently complex and often contain information that is irrelevant to planning or even noisy, transferring this information would not be beneficial to the student planner. Therefore, this paper designs a strategy based on information bottleneck, which only distills planning-related information instead of migrating all information indiscriminately. Second, different waypoints in the output planned trajectory may vary in importance to motion planning, and slight deviations in some critical waypoints may lead to collisions. Therefore, this paper designs a safety-aware waypoint-attentive distillation module to assign adaptive weights to different waypoints based on importance to encourage student models to imitate more critical waypoints more accurately, thereby improving overall safety. Experiments show that our PlanKD can significantly improve the performance of small planners and significantly reduce their reference time.

Main Contributions:

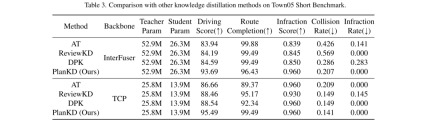

- This paper constructs the first attempt to explore dedicated knowledge distillation methods to compress end-to-end motion planners in autonomous driving.

- This paper proposes a general and innovative framework PlanKD, which enables student planners to inherit planning-related knowledge in the middle layer and facilitates accurate matching of key waypoints to improve safety. .

- Experiments show that PlanKD in this article can significantly improve the performance of small planners, thereby providing a more portable and efficient solution for deployment with limited resources.

Network Design:

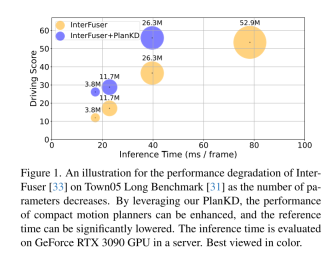

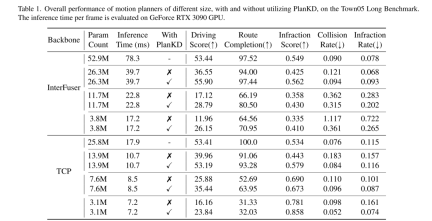

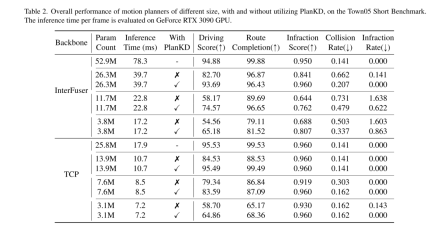

End-to-end motion planning has recently emerged as a promising direction in autonomous driving [3, 10, 30, 31, 40, 47, 48], which directly maps raw sensor data to planned actions. This learning-based paradigm exhibits the advantages of reducing heavy reliance on hand-crafted rules and mitigating error accumulation within complex cascade modules (usually detection-tracking-prediction-planning) [40, 48]. Despite their success, the bulky architecture of deep neural networks in motion planners poses challenges for deployment in resource-constrained environments, such as autonomous delivery robots that rely on the computing power of edge devices. Furthermore, even in conventional vehicles, computing resources on-board devices are often limited [34]. Therefore, directly deploying deep and large planners inevitably requires more computing time and resources, which makes it challenging to respond quickly to potential hazards. To alleviate this problem, a straightforward approach is to reduce the number of network parameters by using a smaller backbone network, but this paper observes that the performance of the end-to-end planning model will drop sharply, as shown in Figure 1. For example, although the inference time of InterFuser [33], a typical end-to-end motion planner, was reduced from 52.9M to 26.3M, its driving score also dropped from 53.44 to 36.55. Therefore, it is necessary to develop a model compression method suitable for end-to-end motion planning.

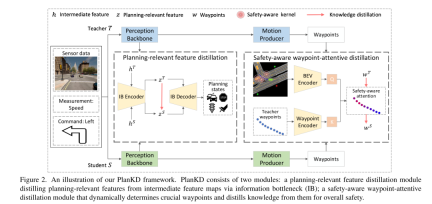

In order to obtain a portable motion planner, this article uses knowledge distillation [19] to compress the end-to-end motion planning model. Knowledge distillation (KD) has been widely studied for model compression in various tasks, such as object detection [6, 24], semantic segmentation [18, 28], etc. The basic idea of these works is to train a simplified student model by inheriting knowledge from a larger teacher model and use the student model to replace the teacher model during deployment. While these studies have achieved significant success, directly applying them to end-to-end motion planning leads to suboptimal results. This stems from two emerging challenges inherent in motion planning tasks: (i) Driving scenarios are complex in nature [46], involving multiple dynamic and static objects, complex background scenes, and multifaceted roads and traffic Diverse information including information. However, not all of this information is useful for planning. For example, background buildings and distant vehicles are irrelevant or even noisy to planning [41], while nearby vehicles and traffic lights have a deterministic impact. Therefore, it is crucial to automatically extract only planning-relevant information from the teacher model, which previous KD methods cannot achieve. (ii) Different waypoints in the output planning trajectory usually have different importance for motion planning. For example, when navigating an intersection, waypoints in a trajectory that are close to other vehicles may have higher importance than other waypoints. This is because at these points, the self-vehicle needs to actively interact with other vehicles, and even small deviations can lead to collisions. However, how to adaptively determine key waypoints and accurately imitate them is another significant challenge of previous KD methods.

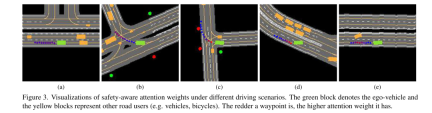

To address the above two challenges, this paper proposes the first knowledge distillation method tailored for end-to-end motion planners in compressed autonomous driving, called PlanKD. First, this paper proposes a strategy based on the information bottleneck principle [2], whose goal is to extract planning-related features that contain minimal and sufficient planning information. Specifically, this paper maximizes the mutual information between the extracted planning-related features and the true value of the planning state defined in this paper, while minimizing the mutual information between the extracted features and intermediate feature maps. This strategy enables this paper to extract key planning-relevant information only at the middle layer, thereby enhancing the effectiveness of the student model. Second, in order to dynamically identify key waypoints and imitate them faithfully, this paper adopts an attention mechanism [38] to calculate each waypoint and its attention weight between it and the associated context in the bird's-eye view (BEV). To promote accurate imitation of safety-critical waypoints during distillation, we design a safety-aware ranking loss that encourages giving higher attention weight to waypoints close to moving obstacles. Accordingly, the security of student planners can be significantly enhanced. The evidence shown in Figure 1 shows that the driving score of student planners can be significantly improved with our PlanKD. Furthermore, our method can reduce the reference time by about 50% while maintaining comparable performance to the teacher planner on the Town05 Long Benchmark.

Figure 1. Schematic diagram of the performance degradation of InterFuser[33] as the number of parameters decreases on Town05 Long Benchmark [31]. By leveraging our PlanKD, we can improve the performance of compact motion planners and significantly reduce reference times. Inference times are evaluated on a GeForce RTX 3090 GPU on the server.

Figure 2. Schematic diagram of the PlanKD framework of this article. PlanKD consists of two modules: a planning-related feature distillation module that extracts planning-related features from intermediate feature maps through information bottlenecks (IB); a safety-aware waypoint-attentive distillation module that dynamically determines key waypoints and Extract knowledge from it to enhance overall security.

Experimental results:

Figure 3. Visualization of safety-aware attention weights in different driving scenarios. The green blocks represent the ego-vehicle and the yellow blocks represent other road users (e.g. cars, bicycles). The redder a waypoint is, the higher its attention weight.

Summary:

This paper proposes PlanKD, a knowledge distillation method tailored for compressed end-to-end motion planners. The proposed method can learn planning-related features through information bottlenecks to achieve effective feature distillation. Furthermore, this paper designs a safety-aware waypoint-attentive distillation mechanism to adaptively decide the importance of each waypoint for waypoint distillation. Extensive experiments validate the effectiveness of our approach, demonstrating that PlanKD can serve as a portable and secure solution for resource-limited deployments.

Citation:

Feng K, Li C, Ren D, et al. On the Road to Portability: Compressing End-to-End Motion Planner for Autonomous Driving[ J]. arXiv preprint arXiv:2403.01238, 2024.

The above is the detailed content of Further accelerating implementation: compressing the end-to-end motion planning model of autonomous driving. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

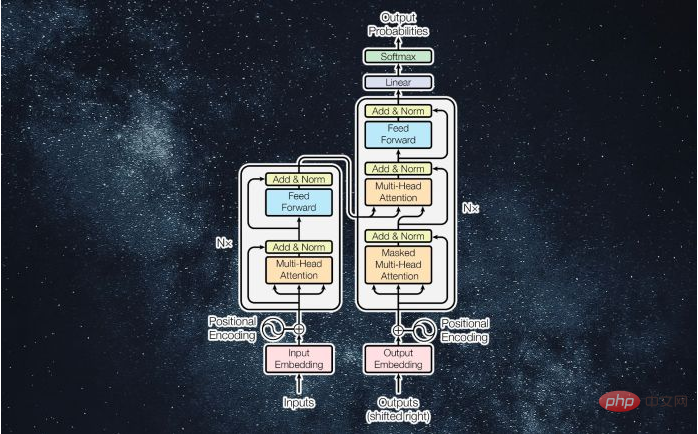

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.