Technology peripherals

Technology peripherals AI

AI DeepMind CEO: LLM+tree search is the AGI technology line. AI research relies on engineering capabilities. Closed-source models are safer than open-source models.

DeepMind CEO: LLM+tree search is the AGI technology line. AI research relies on engineering capabilities. Closed-source models are safer than open-source models.Google suddenly switched to 996 mode after February, launching 5 models in less than a month.

And DeepMind CEO Hassabis himself has also been promoting his own products everywhere, exposing a lot of behind-the-scenes development insider information.

In his view, although technological breakthroughs are still needed, the road to AGI has now emerged for mankind.

The merger of DeepMind and Google Brain marks that the development of AI technology has entered a new era.

Q: DeepMind has always been at the forefront of technology. For example, in a system like AlphaZero, the internal intelligent agent can achieve the final goal through a series of thoughts. Does this mean that large language models (LLM) can also join the ranks of this kind of research?

Hassabis believes that large-scale models have huge potential and need to be further optimized to improve their prediction accuracy and thereby build more reliable models of the world. While this step is crucial, it may not be enough to build a complete artificial general intelligence (AGI) system.

On this basis, we are developing a planning mechanism similar to AlphaZero to formulate plans to achieve specific world goals through the world model.

This includes stringing together different chains of thinking or reasoning, or using tree searches to explore a vast space of possibilities.

These are the missing links in our current large-scale model.

Q: Starting from pure reinforcement learning (RL) methods, is it possible to move directly to AGI?

#It seems that large language models will form the basis of prior knowledge, and then further research can be carried out on this basis.

Theoretically, it is possible to completely adopt the method of developing AlphaZero.

Some people in DeepMind and the RL community are working in this direction. They start from scratch and do not rely on any prior knowledge or data to completely build a new knowledge system.

I believe that leveraging existing world knowledge - such as information on the web and data we already collect - will be the fastest way to achieve AGI.

We now have scalable algorithms that can absorb this information - Transformers. We can completely use these existing models as prior knowledge for prediction and learning.

Therefore, I believe that the final AGI system will definitely include today's large models as part of the solution.

But having a large model alone is not enough, we also need to add more planning and search capabilities to it.

Q: Faced with the huge computing resources required by these methods, how can we break through?

Even a system like AlphaGo is quite expensive due to the need to perform calculations on each node of the decision tree.

We are committed to developing sample-efficient methods and strategies for reusing existing data, such as experience replay, as well as exploring more efficient methods.

In fact, if the world model is good enough, your search can be more efficient.

Take Alpha Zero as an example. Its performance in games such as Go and chess exceeds the world championship level, but its search range is much smaller than traditional brute force search methods.

This shows that improving the model can make searches more efficient and thus reach further targets.

But when defining the reward function and goal, how to ensure that the system develops in the right direction will be one of the challenges we face.

Why can Google produce 5 models in half a month?

Q: Can you talk about why Google and DeepMind are working on so many different models at the same time?

Because we have been conducting basic research, we have a large amount of basic research work covering a variety of different innovations and directions.

This means that while we are building the main model track, the core Gemini model, there are also many more exploratory projects underway.

When these exploration projects achieve some results, we will merge them into the main branch into the next version of Gemini, which is why you will see 1.5 released immediately after 1.0, Because we're already working on the next version, and because we have multiple teams working on different timescales, cycling between each other, that's how we can continue to progress.

I hope this will become our new normal, releasing products at this high speed, but of course, but also being very responsible, keeping in mind that releasing safe models is our number one priority.

Q: I wanted to ask about your most recent big release, Gemini 1.5 Pro, your new Gemini Pro 1.5 model can handle up to one million tokens. Can you explain what this means and why context window is an important technical indicator?

Yes, this is very important. The long context can be thought of as the working memory of the model, i.e. how much data it can remember and process at one time.

The longer the context you have, the accuracy of it is also important, the accuracy of recalling things from the long context is equally important, the more data you can take into account and context.

So a million means you can handle huge books, full movies, huge amounts of audio content, like full code bases.

If you have a shorter context window, such as only one hundred thousand levels, then you can only process fragments of it, and the model cannot reason about the entire corpus that you are interested in. or search.

So this actually opens up possibilities for all kinds of new use cases that can't be done with a small context.

Q: I've heard from AI researchers that the problem with these large context windows is that they are very computationally intensive. For example, if you uploaded an entire movie or a biology textbook and asked questions about it, it would require more processing power to process all of that and respond. If a lot of people do this, the costs can add up quickly. Did Google DeepMind come up with some clever innovation to make these huge context windows more efficient, or did Google just bear the cost of all this extra computation?

Yes, this is a completely new innovation because without innovation you cannot have such a long context.

But this still requires a high computational cost, so we are working hard to optimize it.

If you fill up the entire context window. Initial processing of uploaded data may take several minutes.

But that’s not too bad if you consider that it’s like watching an entire movie or reading the entire War and Peace in a minute or two, and then you can answer any questions about it.

Then what we want to make sure is that once you upload and process a document, video, or audio, subsequent questions and answers should be faster.

That's what we're working on right now and we're very confident that we can get it down to a matter of seconds.

Q: You said you have tested the system with up to 10 million tokens. What is the effect?

worked very well in our tests. Because the computing cost is still relatively high, the service is not currently available.

But in terms of accuracy and recall, it performs very well.

Q: I want to ask you about Gemini. What special things can Gemini do that previous Google language models or other models couldn't do?

Well, I think what's exciting about Gemini, especially version 1.5, is that it's inherently multimodal and we built it from the ground up to be able to handle anything Types of input: text, image, code, video.

If you combine it with long context, you can see its potential. For example, you can imagine that you are listening to an entire lecture, or that there is an important concept you want to understand and you want to fast forward to there.

So now we can put the entire code base into a context window, which is very useful for new programmers getting started. Let's say you're a new engineer starting work on Monday. Typically you have hundreds of thousands of lines of code to look at. How do you access a function?

You need to ask the experts on the code base. But now you can actually use Gemini as a coding assistant, in this fun way. It will return some summary telling you where the important parts of the code are, and you can start working.

I think having this ability is very helpful and makes your daily workflow more efficient.

I'm really looking forward to seeing how Gemini performs when integrated into something like slack, and your general workflow. What will the workflow of the future look like? I think we're just starting to experience the changes.

Google’s top priority for open source is security

Q: I’d like to turn now to Gemma, a series of lightweight open source models you just released. Today, whether to release underlying models through open source, or keep them closed, seems to be one of the most controversial topics. Until now, Google has kept its underlying model closed source. Why choose open source now? What do you think of the criticism that making underlying models available through open source increases the risk and likelihood that they will be used by malicious actors?

Yes, I have actually discussed this issue publicly many times.

One of the main concerns is that open source and open research in general are clearly beneficial. But there is a specific problem here, and that is related to AGI and AI technologies, because they are universal.

Once you publish them, malicious actors can use them for harmful purposes.

Of course, once you open source something, you have no real way to take it back. Unlike things like API access, if you find something downstream that no one has considered before For harmful use cases, you can simply cut off access.

I think this means the bar for security, robustness and accountability is even higher. As we get closer to AGIs, they will have more powerful capabilities, so we have to be more careful about what they might be used for by malicious actors.

I have yet to hear a good argument from those who support open source, such as the open source extremists, many of whom are colleagues I respect in academia who How do you answer this question, which is consistent with protecting against open source models that would allow more malicious actors to access the model?

We need to think more about these issues as these systems become more powerful.

Q: So, why didn’t Gemma worry you about this issue?

Yes, of course, as you will notice, Gemma only offers Lightweight versions, so they are relatively small.

Actually, the smaller size is more useful for developers because usually individual developers, academics or small teams want to work quickly on their laptops, so they are made for that Optimized.

Because they are not cutting edge models, they are small models and we feel reassured because the capabilities of these models have been rigorously tested and we know very well what they are capable of for a model of this size There are no big risks.

Why DeepMind merged with Google Brain

Q: Last year, when Google Brain and DeepMind merged, some people I know in the AI industry felt Worry. They worry that Google has historically given DeepMind considerable latitude to work on various research projects it deems important.

With the merger, DeepMind may have to be redirected to things that are beneficial to Google in the short term, rather than these larger Long-term basic research projects. It's been a year since the merger, has this tension between short-term interest in Google and possible long-term AI advancements changed what you can work on?

Yes, everything was very good this first year as you mentioned. One reason is that we think now is the right time, and I think it's the right time from a researcher's perspective.

Maybe let's go back five or six years, when we were doing things like AlphaGo, in the field of AI, we had been exploratory research on how to reach AGI, what breakthroughs were needed, what should be bets on, And in that case, you want to do a broad set of things, so I think that's a very exploratory stage.

I think over the last two or three years it has become clear what the main components of AGI will be, as I mentioned before, although we still need new innovations.

I think you just saw the long context of Gemini1.5 and I think there are a lot of new innovations like that that are going to be required, so the basics Research remains as important as ever.

But now we also need to work in the engineering direction, which is to expand and utilize known technologies and push them to their limits. It requires very creative engineering at scale, from prototypes to level of hardware to data center scale, and the efficiency issues involved.

Another reason is that if you were manufacturing some AI-driven products five or six years ago, you would have had to build an AI that was completely different from the AGI research track.

It can only perform tasks in special scenarios for specific products. It is a kind of customized AI, "hand-made AI".

But the situation is different today. To do AI for products, the best way now is to use general AI technologies and systems because they have reached sufficient levels of complexity and capability.

So actually this is a convergence point, so you can now see that the research track and the product track have been merged together.

For example, we are now going to make an AI voice assistant. The opposite is a chatbot that truly understands language. They are now integrated, so there is no need to consider that dichotomy now. Or coordinate a tense relationship.

The second reason is that having a tight feedback loop between research and real-world application is actually very beneficial to research.

Because of the way products allow you to really understand how your model performs, you can have academic metrics, but the real test is when millions of users use your product, they Do you find it useful, do you find it helpful, do you find it beneficial to the world.

You're obviously going to get a lot of feedback, and that will then lead to very rapid improvements to the underlying model, so I think we're in this very, very exciting stage right now.

The above is the detailed content of DeepMind CEO: LLM+tree search is the AGI technology line. AI research relies on engineering capabilities. Closed-source models are safer than open-source models.. For more information, please follow other related articles on the PHP Chinese website!

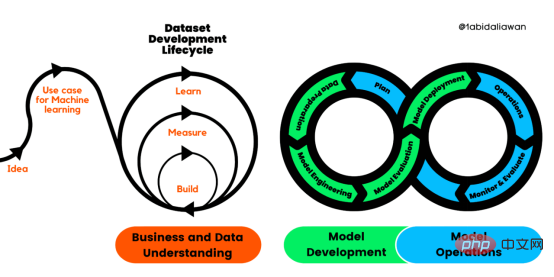

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 Chinese version

Chinese version, very easy to use

Dreamweaver Mac version

Visual web development tools