Technology peripherals

Technology peripherals AI

AI A few lines of code stabilize UNet! Sun Yat-sen University and others proposed the ScaleLong diffusion model: from questioning Scaling to becoming Scaling

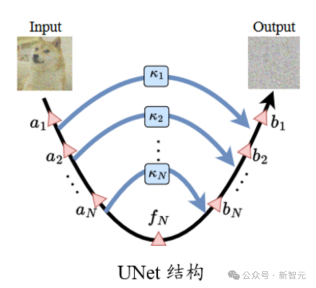

A few lines of code stabilize UNet! Sun Yat-sen University and others proposed the ScaleLong diffusion model: from questioning Scaling to becoming ScalingIn the standard UNet structure, the scaling coefficient  on the long skip connection is generally 1.

on the long skip connection is generally 1.

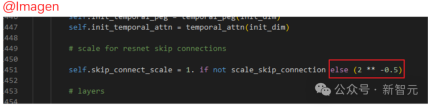

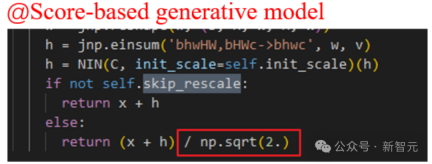

However, in some well-known diffusion model work, such as Imagen, Score-based generative model, and SR3, etc., they all set  , and found that such a setting can effectively accelerate the training of the diffusion model.

, and found that such a setting can effectively accelerate the training of the diffusion model.

However, Imagen and other models have limited support for skip connection. There is no specific analysis of the Scaling operation in the original paper, but it is said that this setting will help speed up the training of the diffusion model.

First of all, this kind of empirical display makes us unclear about what role this setting plays?

In addition, we don’t know whether we can only set  , or can we use other constants?

, or can we use other constants?

Are the "status" of skip connections at different locations the same? Why use the same constant?

The author has a lot of questions about this...

Picture

Picture

Generally speaking, compared with ResNet and Transformer structures, UNet is not "deep" in actual use, and is less prone to optimizations such as gradient disappearance that are common in other "deep" neural network structures. question.

In addition, due to the particularity of the UNet structure, shallow features are connected to deep locations through long skip connections, thus further avoiding problems such as gradient disappearance.

Then think about it the other way around, if such a structure is not paid attention to, will it lead to problems such as excessive gradients and parameter (feature) oscillation due to updates?

Picture

Picture

#By visualizing the characteristics and parameters of the diffusion model task during the training process, it can be found that there is indeed instability Phenomenon.

The instability of parameters (features) affects the gradient, which in turn affects parameter updates. Ultimately this process has a greater risk of undesirable interference with performance. Therefore, we need to find ways to control this instability.

Further, for the diffusion model. The input of UNet is a noisy image. If the model is required to accurately predict the added noise, this requires the model to have strong robustness to the input against additional disturbances.

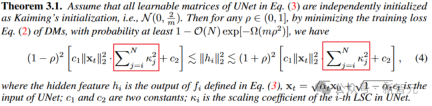

From theorem 3.1, the oscillation range of the middle layer feature (the width of the upper and lower bounds) is directly related to the sum of the squares of the scaling coefficient. Appropriate scaling coefficients help alleviate feature instability.

However, it should be noted that if the scaling coefficient is directly set to 0, the shock is indeed optimally alleviated. (Manual dog head)

But if UNet degrades to a skip-less situation, the instability problem is solved, but the representation ability is also lost. This is a trade-off between model stability and representational capabilities.

Picture

Picture

Similarly, from the perspective of parameter gradient. Theorem 3.3 also reveals that the scaling coefficient controls the magnitude of the gradient.

Picture

Picture

Further, Theorem 3.4 also reveals that scaling on the long skip connection can also affect the robustness of the model to input disturbances. bounds to improve the stability of the diffusion model to input disturbances.

Become Scaling

Through the above analysis, we understand the importance of scaling on Long skip connection for stable model training ,  is also applicable to the above analysis.

is also applicable to the above analysis.

Next, we will analyze what kind of scaling can have better performance. After all, the above analysis can only show that scaling is good, but it cannot determine what kind of scaling is the best or better. good.

A simple way is to introduce a learnable module for long skip connection to adaptively adjust scaling. This method is called Learnable Scaling (LS) Method. We use a SENet-like structure, as shown below (the U-ViT structure considered here is very well organized, like!)

Picture

Picture

Judging from the results of this article, LS can indeed effectively stabilize the training of the diffusion model! Further, we try to visualize the coefficients learned in LS.

As shown in the figure below, we will find that these coefficients show an exponential downward trend (note that the first long skip connection here refers to the connection connecting the first and last ends of UNet), and the first coefficient is almost close to Yu 1, this phenomenon is also amazing!

Picture

Picture

Based on this series of observations (please refer to the paper for more details), we further proposed the Constant Scaling (CS) Method , that is, no learnable parameters are required:

CS strategy is the same as the original scaling operation using  without additional parameters, resulting in almost no additional computational cost.

without additional parameters, resulting in almost no additional computational cost.

Although CS does not perform as well as LS in stable training most of the time, it is still worth a try for the existing  strategies.

strategies.

The implementation of the above CS and LS is very simple and only requires a few lines of code. For each (hua) formula (li) and each (hu) type (shao) UNet structure, the feature dimensions may need to be aligned. (Manual dog head 1)

Recently, some follow-up work, such as FreeU, SCEdit and other work, have also revealed the importance of scaling on skip connection. Everyone is welcome to try and promote it.

The above is the detailed content of A few lines of code stabilize UNet! Sun Yat-sen University and others proposed the ScaleLong diffusion model: from questioning Scaling to becoming Scaling. For more information, please follow other related articles on the PHP Chinese website!

undress free porn AI tool websiteMay 13, 2025 am 11:26 AM

undress free porn AI tool websiteMay 13, 2025 am 11:26 AMhttps://undressaitool.ai/ is Powerful mobile app with advanced AI features for adult content. Create AI-generated pornographic images or videos now!

How to create pornographic images/videos using undressAIMay 13, 2025 am 11:26 AM

How to create pornographic images/videos using undressAIMay 13, 2025 am 11:26 AMTutorial on using undressAI to create pornographic pictures/videos: 1. Open the corresponding tool web link; 2. Click the tool button; 3. Upload the required content for production according to the page prompts; 4. Save and enjoy the results.

undress AI official website entrance website addressMay 13, 2025 am 11:26 AM

undress AI official website entrance website addressMay 13, 2025 am 11:26 AMThe official address of undress AI is:https://undressaitool.ai/;undressAI is Powerful mobile app with advanced AI features for adult content. Create AI-generated pornographic images or videos now!

How does undressAI generate pornographic images/videos?May 13, 2025 am 11:26 AM

How does undressAI generate pornographic images/videos?May 13, 2025 am 11:26 AMTutorial on using undressAI to create pornographic pictures/videos: 1. Open the corresponding tool web link; 2. Click the tool button; 3. Upload the required content for production according to the page prompts; 4. Save and enjoy the results.

undressAI porn AI official website addressMay 13, 2025 am 11:26 AM

undressAI porn AI official website addressMay 13, 2025 am 11:26 AMThe official address of undress AI is:https://undressaitool.ai/;undressAI is Powerful mobile app with advanced AI features for adult content. Create AI-generated pornographic images or videos now!

UndressAI usage tutorial guide articleMay 13, 2025 am 10:43 AM

UndressAI usage tutorial guide articleMay 13, 2025 am 10:43 AMTutorial on using undressAI to create pornographic pictures/videos: 1. Open the corresponding tool web link; 2. Click the tool button; 3. Upload the required content for production according to the page prompts; 4. Save and enjoy the results.

![[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyright](https://img.php.cn/upload/article/001/242/473/174707263295098.jpg?x-oss-process=image/resize,p_40) [Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AM

[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AMThe latest model GPT-4o released by OpenAI not only can generate text, but also has image generation functions, which has attracted widespread attention. The most eye-catching feature is the generation of "Ghibli-style illustrations". Simply upload the photo to ChatGPT and give simple instructions to generate a dreamy image like a work in Studio Ghibli. This article will explain in detail the actual operation process, the effect experience, as well as the errors and copyright issues that need to be paid attention to. For details of the latest model "o3" released by OpenAI, please click here⬇️ Detailed explanation of OpenAI o3 (ChatGPT o3): Features, pricing system and o4-mini introduction Please click here for the English version of Ghibli-style article⬇️ Create Ji with ChatGPT

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AM

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AMAs a new communication method, the use and introduction of ChatGPT in local governments is attracting attention. While this trend is progressing in a wide range of areas, some local governments have declined to use ChatGPT. In this article, we will introduce examples of ChatGPT implementation in local governments. We will explore how we are achieving quality and efficiency improvements in local government services through a variety of reform examples, including supporting document creation and dialogue with citizens. Not only local government officials who aim to reduce staff workload and improve convenience for citizens, but also all interested in advanced use cases.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Chinese version

Chinese version, very easy to use

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver Mac version

Visual web development tools