Home >Technology peripherals >AI >Apple shows off new AI model MGIE, which can refine pictures in one sentence

Apple shows off new AI model MGIE, which can refine pictures in one sentence

- PHPzforward

- 2024-02-08 11:33:251335browse

News on February 8th: Compared with Microsoft’s rise, Apple’s layout in the field of AI appears to be much low-key, but this does not mean that Apple is in this field. There is no achievement in the field. Apple recently released a new open source artificial intelligence model called "MGIE", which can edit images based on natural language instructions.

Source: VentureBeat Produced in collaboration with Midjourney

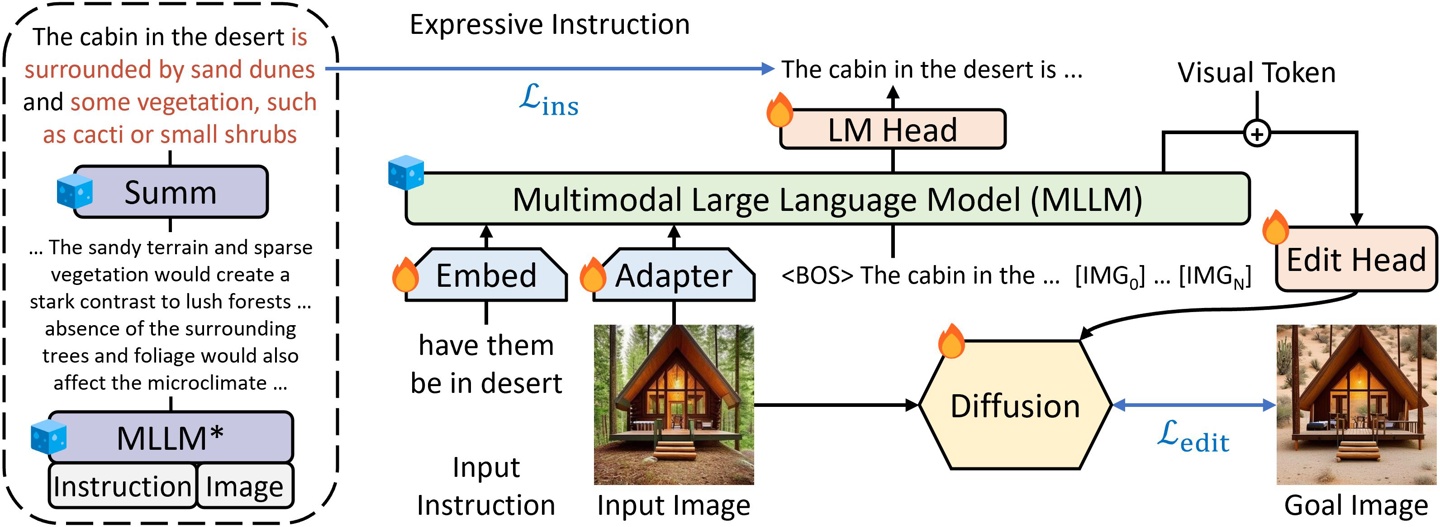

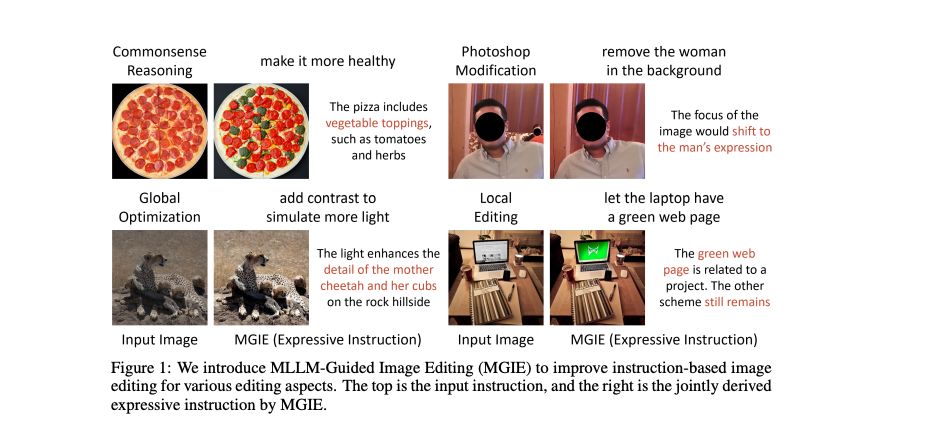

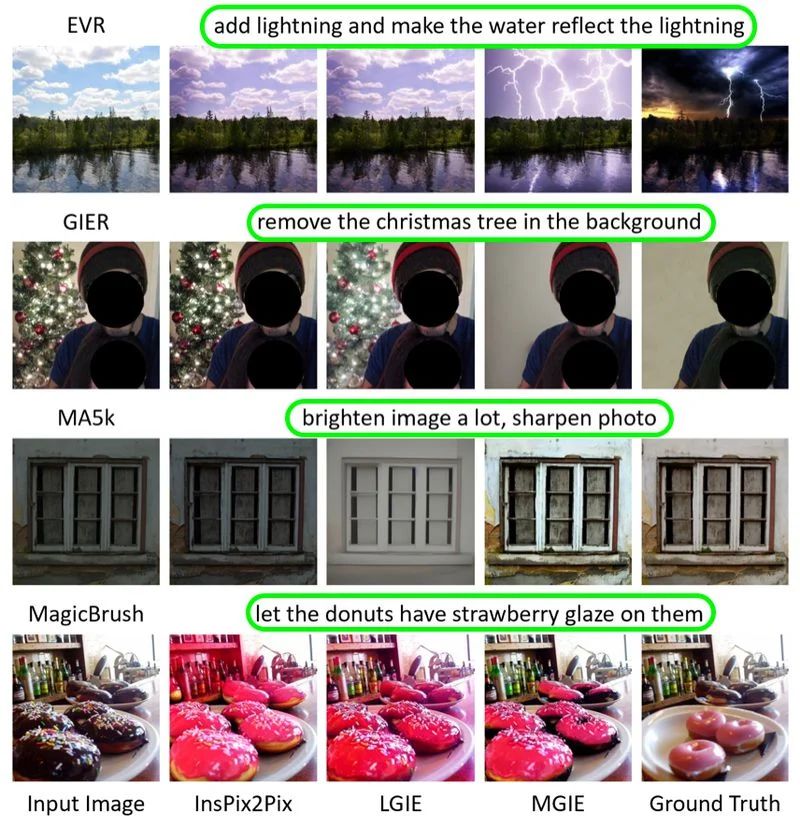

MGIE (MLLM-Guided Image Editing) is an exploit Multimodal Large Language Model (MLLM) is a technology that interprets user instructions and performs pixel-level operations. It can understand the user's natural language commands and perform Photoshop-like modifications, global photo optimization, and local editing. Through MGIE, users can easily perform various edits on pictures without being familiar with complex image processing software. This technology simplifies the image editing process while also providing a more intuitive and efficient editing method.

Apple, in collaboration with researchers at the University of California, Santa Barbara, announced research results related to MGIE at the 2024 International Conference on Learning Representations (ICLR). ICLR is one of the most important conferences in the field of artificial intelligence research.

Before introducing MGIE, this site will briefly introduce MLLM (Multimodal Language Learning Model). MLLM is a powerful artificial intelligence model that is unique in that it can process text and images simultaneously, thereby enhancing instruction-based image editing capabilities. MLLM has shown excellent capabilities in cross-modal understanding and visual perceptual response generation, however, it has not yet been widely used in image editing tasks.

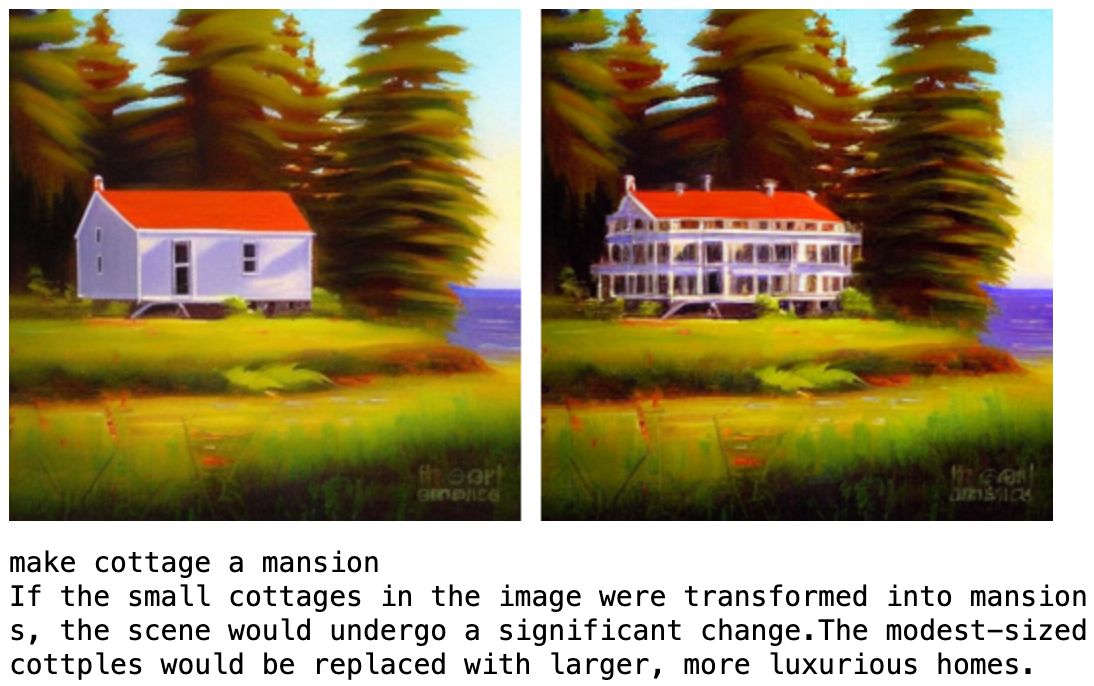

MGIE integrates MLLMs into the image editing process in two ways: First, it leverages MLLMs to derive precise and expressive instructions from user input. These instructions are concise and clear, providing clear guidance for the editing process.

For example, when entering "Make the sky bluer", MGIE can generate the command "Increase the saturation of the sky area by 20%".

Secondly, it uses MLLM to generate visual imagination, a potential representation of the desired edit. This representation captures the essence of editing and can be used to guide pixel-level operations. MGIE employs a novel end-to-end training scheme that jointly optimizes instruction derivation, visual imagination, and image editing modules.

#MGIE can handle a variety of editing situations, from simple color adjustments to complex object manipulation. The model can also perform global and local editing based on the user's preferences. Some of the features and functions of MGIE include:

- Instruction-based expression editing: MGIE can generate concise and clear instructions to effectively guide the editing process. This not only improves editing quality but also enhances the overall user experience.

- Photoshop Style Editing: MGIE can perform common Photoshop style editing like cropping, resizing, rotating, flipping and adding filters. The mockup can also apply more advanced edits, such as changing the background, adding or removing objects, and blending images.

- Global Photo Optimization: MGIE can optimize the overall quality of your photos, such as brightness, contrast, sharpness and color balance. The model can also apply artistic effects such as sketching, painting and caricature.

- Local Editing: MGIE can edit specific areas or objects in an image, such as face, eyes, hair, clothes, and accessories. The model can also modify the properties of these areas or objects, such as shape, size, color, texture, and style.

MGIE is an open source project on GitHub. Users can click here to find the code, data and pre-trained models. The project also provides a demo notebook showing how to use MGIE to complete various editing tasks.

The above is the detailed content of Apple shows off new AI model MGIE, which can refine pictures in one sentence. For more information, please follow other related articles on the PHP Chinese website!