1. Introduction

After the Tucson AI Day was held last year, I always had the idea to summarize our work in long-distance perception in text form. I happened to have some time recently, so I decided to write an article to record the research process in the past few years. The content covered in this article can be found in the Tucson AI Day video [0] and our publicly published papers, but it does not contain specific engineering details or technical secrets.

As we all know, Tucson focuses on autonomous truck driving technology. Trucks have longer braking distances and longer lane changes than cars. As a result, Tucson has a unique advantage in competing with other autonomous driving companies. As a member of Tucson, I am responsible for LiDAR sensing technology, and now I will introduce in detail the related content of using LiDAR for long-distance sensing.

When the company first joined, the mainstream LiDAR sensing solution was usually the BEV (Bird's Eye View) solution. However, the BEV here is not the abbreviation of the well-known Battery Electric Vehicle, but refers to a solution that projects LiDAR point clouds into the BEV space and combines 2D convolution and 2D detection heads for target detection. I personally think that the LiDAR sensing technology used by Tesla should be called "the fusion technology of multi-view cameras in the BEV space." As far as I can tell, the earliest record of the BEV solution is the paper "MV3D" published by Baidu at the CVPR17 conference [1]. Many subsequent research works, including the solutions actually used by many companies that I know, adopt the method of projecting LiDAR point clouds into the BEV space for target detection, and can be classified as BEV solutions. This solution is widely used in practical applications. To summarize, when I first joined the company, the mainstream LiDAR sensing solution usually projected the LiDAR point cloud into the BEV space, and then combined 2D convolution and a 2D detection head for target detection. The LiDAR sensing technology used by Tesla can be called "the fusion technology of multi-view cameras in the BEV space." The paper "MV3D" published by Baidu at the CVPR17 conference was an early record of the BEV solution. Subsequently, many companies also adopted similar solutions for target detection.

The BEV perspective feature used by MV3D[1]

The BEV perspective feature used by MV3D[1]

One of the great benefits of the BEV scheme is that it can directly apply mature 2D detectors, but it also has a fatal shortcoming: it limits Covered the range of perception. As you can see from the picture above, because a 2D detector is to be used, it must form a 2D feature map. At this time, a distance threshold must be set for it. In fact, there are still LiDAR points outside the range of the picture above, but was discarded by this truncation operation. Is it possible to increase the distance threshold until the location is covered? It's not impossible to do this, but LiDAR has very few point clouds in the distance due to problems such as scanning mode, reflection intensity (attenuating with distance to the fourth power), occlusion, etc., so it is not cost-effective.

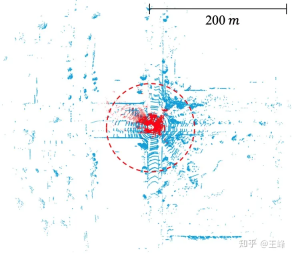

The academic community has not paid much attention to this issue of the BEV scheme, mainly due to the limitations of the data set. The annotation range of current mainstream data sets is usually less than 80 meters (such as nuScenes' 50 meters, KITTI's 70 meters and Waymo's 80 meters). Within this distance range, the size of the BEV feature map does not need to be large. However, in the industrial world, the mid-range LiDAR used can generally achieve a scanning range of 200 meters, and in recent years, some long-range LiDARs have been released, which can achieve a scanning range of 500 meters. It should be noted that the area and calculation amount of the feature map increase quadratically as the distance increases. Under the BEV scheme, the amount of calculation required to handle a range of 200 meters is already considerable, let alone a range of 500 meters. Therefore, this problem needs more attention and resolution in the industry.

The scanning range of lidar in the public data set. KITTI (red dot, 70m) vs. Argoverse 2 (blue dot, 200m)

After recognizing the limitations of the BEV solution, we finally found a feasible alternative after years of research. The research process was not easy and we experienced many setbacks. Generally, papers and reports will only emphasize successes and not mention failures, but the experience of failure is also very valuable. Therefore, we decided to share our research journey through a blog. Next, I will describe it step by step according to the timeline.

2. Point-based solution

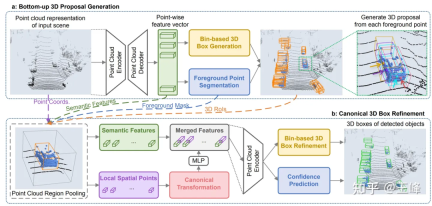

At CVPR19, Hong Kong Chinese published a point cloud detector called PointRCNN[2]. Unlike traditional methods, PointRCNN performs calculations directly on point cloud data without converting it to BEV (bird's eye view) form. Therefore, this point cloud-based solution can theoretically achieve long-distance sensing.

But we found a problem after trying it. The number of point clouds in one frame of KITTI can be downsampled to 16,000 points for detection without much loss of points. However, our LiDAR combination has more than 100,000 points in one frame. If Downsampling by 10 times will obviously greatly affect the detection accuracy. If downsampling is not performed, there are even O(n^2) operations in the backbone of PointRCNN. As a result, although it does not take bev, the calculation amount is still unbearable. These time-consuming operations are mainly due to the disordered nature of the point cloud itself, which means that all points must be traversed whether downsampling or neighborhood retrieval. Since there are many ops involved and they are all standard ops that have not been optimized, there is no hope of optimizing to real-time in the short term, so this route was abandoned.

However, this research is not wasted. Although the calculation amount of backbone is too large, its second stage is only performed on the foreground, so the calculation amount is still relatively small. After directly applying the second stage of PointRCNN to the first stage detector of the BEV scheme, the accuracy of the detection frame will be greatly improved. During the application process, we also discovered a small problem with it. After solving it, we summarized it and published it in an article [3] published on CVPR21. You can also check it out on this blog:

王峰: LiDAR R-CNN: A fast and versatile two-stage 3D detector

3. Range-View scheme

After the Point-based scheme failed, We turned our attention to Range View. LiDARs back then were all mechanically rotating. For example, a 64-line lidar would scan 64 rows of point clouds with different pitch angles. For example, if each row scanned 2048 points, it would be enough. Form a 64*2048 range image.

Comparison of RV, BEV, and PV

Comparison of RV, BEV, and PV

In Range View, the point cloud is no longer sparse but densely arranged together, and distant targets are in the range image The upper part is relatively small, but it will not be discarded, so it can theoretically be detected.

Maybe because it is more similar to the image, the research on RV is actually earlier than BEV. The earliest record I can find is also from Baidu’s paper [4]. Baidu is really the Whampoa Military Academy of autonomous driving. , the earliest applications of both RV and BEV came from Baidu.

So I tried it out casually. Compared with the BEV method, RV’s AP dropped by 30-40 points... I found that the detection on the 2d range image was actually pretty good. But the effect of the output 3D frame is very poor. At that time, when we analyzed the characteristics of RV, we felt that it had all the disadvantages of images: non-uniform object scales, mixed foreground and background features, and unclear long-distance target features. However, it did not have the advantage of rich semantic features in images, so I was relatively pessimistic about this solution at the time.

Because regular employees still have to do the implementation work after all, it is better to leave such exploratory questions to interns. Later, two interns were recruited to study this problem together. They tried it on the public data set, and sure enough, they also lost 30 points... Fortunately, the two interns were more capable. Through a series of efforts and reference to other After correcting some details of the paper, the points were brought to a level similar to the mainstream BEV method, and the final paper was published on ICCV21 [5].

Although the points are increased, the problem has not been completely solved. At that time, it has become a consensus that lidar needs multi-frame fusion to improve the signal-to-noise ratio. Because the points are small for long-distance targets, it is even more necessary to stack frames to increase the number of points. amount of information. In the BEV solution, multi-frame fusion is very simple. Just add a timestamp to the input point cloud and then superimpose multiple frames. The entire network can increase the points without changing it. However, under RV, many tricks have been changed and nothing has been achieved. Similar effect.

And at this time, LiDAR has also moved from mechanical rotation to solid/semi-solid in terms of hardware technical solutions. Most solid/semi-solid LiDAR can no longer form a range image and forcibly construct a range. image will lose information, so this path was eventually abandoned.

4. Sparse Voxel scheme

As mentioned before, the problem with the Point-based scheme is that the irregular arrangement of point clouds requires traversal for issues such as downsampling and neighborhood retrieval. All point clouds result in too high a computational burden, while under the BEV scheme the data is organized but there are too many blank areas, resulting in an excessively high computational burden. Combining the two, performing voxelization in dotted areas to make it regular, and not expressing in undotted areas to prevent invalid calculations seems to be a feasible path. This is the sparse voxel solution.

Because Yan Yan, the author of SECOND[6], joined Tucson, we tried the backbone of sparse conv in the early days. However, because spconv is not a standard op, the spconv implemented by ourselves still passed Slow enough to detect in real time, sometimes even slower than dense conv, so it was put aside for the time being.

Later, the first LiDAR capable of scanning 500m: Livox Tele15 arrived, and the long-range LiDAR sensing algorithm was imminent. I tried the BEV solution but it was too expensive, so I tried the spconv solution again. For a moment, because the fov of Tele15 is relatively narrow and the point cloud in the distance is also very sparse, spconv can barely achieve real-time performance.

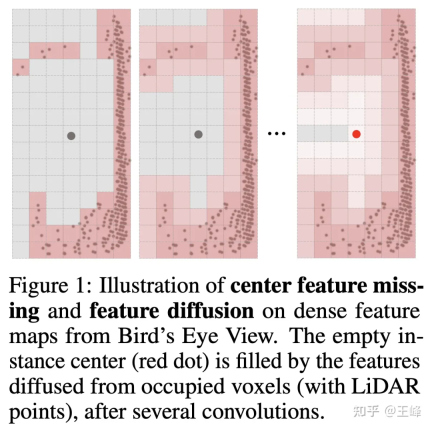

But if you don’t shoot bev, the detection head cannot use the more mature anchor or center assign in 2D detection. This is mainly because the lidar scans the surface of the object, and the center position is not necessarily a point (as shown in the figure below) (shown), it is naturally impossible to assign a foreground target without a point. In fact, we have tried many assign methods internally. We will not go into details about the actual methods used by the company here. The intern also tried an assign scheme and published it on NIPS2022 [7]. You can read his interpretation. :

明月不知无愿:Fully Sparse 3D Object Detector

But if you want to apply this algorithm 500m forward, backward and With the LiDAR combination of 150m on the left and right, it is still insufficient. It just so happened that the intern used to draw on the ideas of Swin Transformer and wrote an article on Sparse Transformer before chasing popularity [8]. It also took a lot of effort to brush up from more than 20 points bit by bit (thanks to the intern for guiding me, tql ). At that time, I felt that the Transformer method was still very suitable for irregular point cloud data, so I also tried it on the company's data set.

Unfortunately, this method has always failed to beat the BEV method on the company's data set, and the difference is close to 5 points. Looking back now, there may still be some tricks or training techniques that I have not mastered. It stands to reason that Transformer The expressive ability is not weaker than conv, but I did not continue to try it later. However, at this time, the assign method has been optimized and reduced a lot of calculations, so I wanted to try spconv again. The surprising result is that directly replacing the Transformer with spconv can achieve the same accuracy as the BEV method at close range. Quite, it can also detect long-distance targets.

It was also at this time that Yan Yan made the second version of spconv[9]. The speed was greatly improved, so computing delay was no longer a bottleneck. Finally, long-distance LiDAR perception cleared everything Obstacles can now be run on the car in real time.

Later, we updated the LiDAR arrangement and increased the scanning range to 500m forward, 300m backward, and 150m left and right. This algorithm also runs well. I believe that with the continuous improvement of computing power in the future , computing latency will become less and less of a problem.

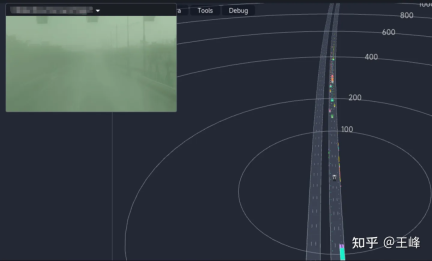

The final long-distance detection effect is shown below. You can also look at the position around 01:08:30 in the Tucson ai day video to see the dynamic detection effect:

Although it is the final fusion result, because the visibility of the foggy image on this day is very low, the results basically come from LiDAR perception.

5. Postscript

From the point-based method, to the range image method, to the Transformer and sparse conv methods based on sparse voxel, the exploration of long-distance perception It cannot be said that it was smooth sailing, it was simply a road full of thorns. In the end, it was actually with the continuous improvement of computing power and the continuous efforts of many colleagues that we achieved this step. I would like to thank Tucson chief scientist Wang Naiyan and all colleagues and interns in Tucson. Most of the ideas and engineering implementations were not done by me. I am very ashamed. They serve more as a link between the past and the future.

I haven’t written such a long article for a long time. It was written like a running account without forming a touching story. In recent years, fewer and fewer colleagues insist on doing L4, and L2 colleagues have gradually turned to purely visual research. LiDAR perception is gradually marginalized visibly to the naked eye, although I still firmly believe that one more direct ranging sensor is better. choice, but industry insiders seem to increasingly disagree. As I see more and more BEV and Occupancy on the resumes of the fresh blood, I wonder how long LiDAR sensing can continue, and how long I can persist. Writing such an article may also serve as a commemoration.

I’m in tears late at night, I don’t understand what I’m talking about, I’m sorry.

The above is the detailed content of Warning! Long-distance LiDAR sensing. For more information, please follow other related articles on the PHP Chinese website!

在 CARLA自动驾驶模拟器中添加真实智体行为Apr 08, 2023 pm 02:11 PM

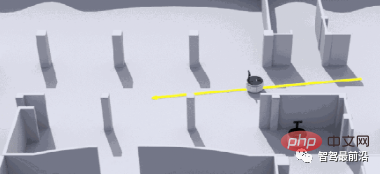

在 CARLA自动驾驶模拟器中添加真实智体行为Apr 08, 2023 pm 02:11 PMarXiv论文“Insertion of real agents behaviors in CARLA autonomous driving simulator“,22年6月,西班牙。由于需要快速prototyping和广泛测试,仿真在自动驾驶中的作用变得越来越重要。基于物理的模拟具有多种优势和益处,成本合理,同时消除了prototyping、驾驶员和弱势道路使用者(VRU)的风险。然而,主要有两个局限性。首先,众所周知的现实差距是指现实和模拟之间的差异,阻碍模拟自主驾驶体验去实现有效的现实世界

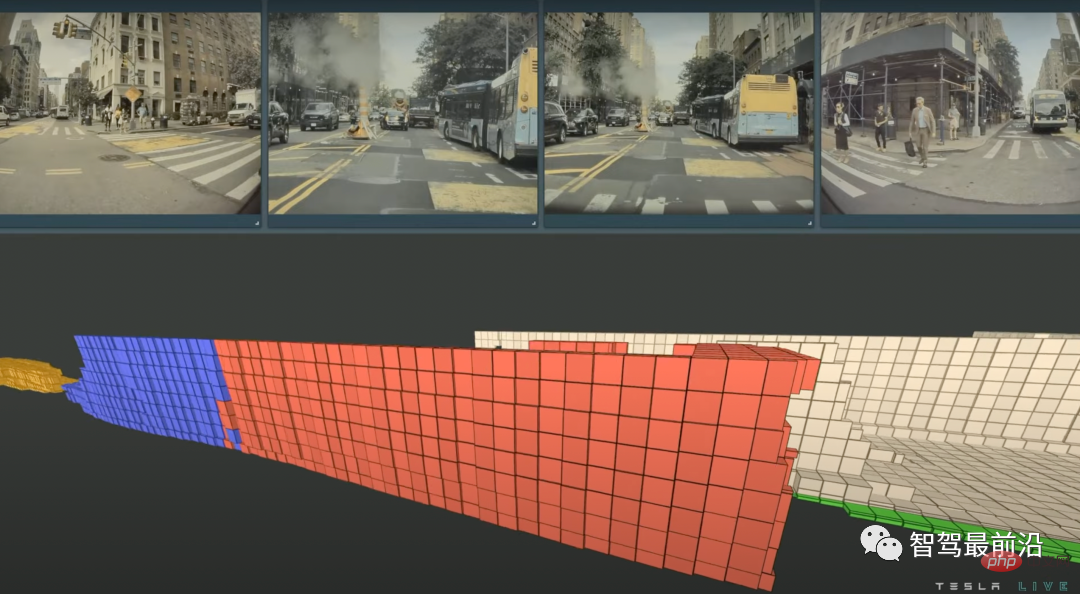

特斯拉自动驾驶算法和模型解读Apr 11, 2023 pm 12:04 PM

特斯拉自动驾驶算法和模型解读Apr 11, 2023 pm 12:04 PM特斯拉是一个典型的AI公司,过去一年训练了75000个神经网络,意味着每8分钟就要出一个新的模型,共有281个模型用到了特斯拉的车上。接下来我们分几个方面来解读特斯拉FSD的算法和模型进展。01 感知 Occupancy Network特斯拉今年在感知方面的一个重点技术是Occupancy Network (占据网络)。研究机器人技术的同学肯定对occupancy grid不会陌生,occupancy表示空间中每个3D体素(voxel)是否被占据,可以是0/1二元表示,也可以是[0, 1]之间的

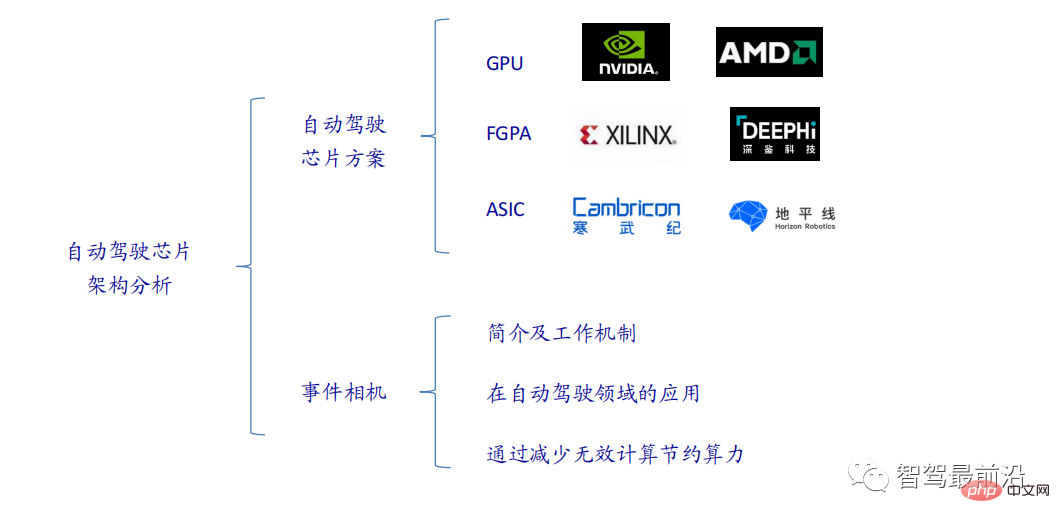

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM当前主流的AI芯片主要分为三类,GPU、FPGA、ASIC。GPU、FPGA均是前期较为成熟的芯片架构,属于通用型芯片。ASIC属于为AI特定场景定制的芯片。行业内已经确认CPU不适用于AI计算,但是在AI应用领域也是必不可少。 GPU方案GPU与CPU的架构对比CPU遵循的是冯·诺依曼架构,其核心是存储程序/数据、串行顺序执行。因此CPU的架构中需要大量的空间去放置存储单元(Cache)和控制单元(Control),相比之下计算单元(ALU)只占据了很小的一部分,所以CPU在进行大规模并行计算

自动驾驶汽车激光雷达如何做到与GPS时间同步?Mar 31, 2023 pm 10:40 PM

自动驾驶汽车激光雷达如何做到与GPS时间同步?Mar 31, 2023 pm 10:40 PMgPTP定义的五条报文中,Sync和Follow_UP为一组报文,周期发送,主要用来测量时钟偏差。 01 同步方案激光雷达与GPS时间同步主要有三种方案,即PPS+GPRMC、PTP、gPTPPPS+GPRMCGNSS输出两条信息,一条是时间周期为1s的同步脉冲信号PPS,脉冲宽度5ms~100ms;一条是通过标准串口输出GPRMC标准的时间同步报文。同步脉冲前沿时刻与GPRMC报文的发送在同一时刻,误差为ns级别,误差可以忽略。GPRMC是一条包含UTC时间(精确到秒),经纬度定位数据的标准格

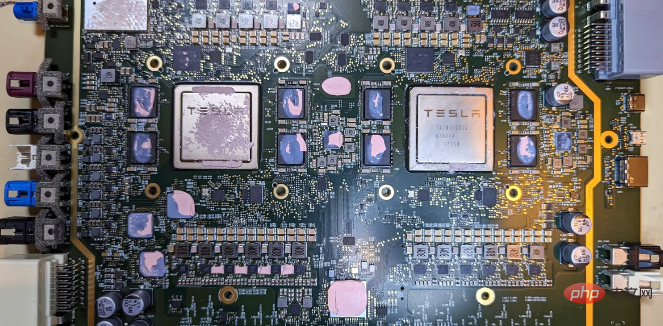

特斯拉自动驾驶硬件 4.0 实物拆解:增加雷达,提供更多摄像头Apr 08, 2023 pm 12:11 PM

特斯拉自动驾驶硬件 4.0 实物拆解:增加雷达,提供更多摄像头Apr 08, 2023 pm 12:11 PM2 月 16 日消息,特斯拉的新自动驾驶计算机,即硬件 4.0(HW4)已经泄露,该公司似乎已经在制造一些带有新系统的汽车。我们已经知道,特斯拉准备升级其自动驾驶硬件已有一段时间了。特斯拉此前向联邦通信委员会申请在其车辆上增加一个新的雷达,并称计划在 1 月份开始销售,新的雷达将意味着特斯拉计划更新其 Autopilot 和 FSD 的传感器套件。硬件变化对特斯拉车主来说是一种压力,因为该汽车制造商一直承诺,其自 2016 年以来制造的所有车辆都具备通过软件更新实现自动驾驶所需的所有硬件。事实证

端到端自动驾驶中轨迹引导的控制预测:一个简单有力的基线方法TCPApr 10, 2023 am 09:01 AM

端到端自动驾驶中轨迹引导的控制预测:一个简单有力的基线方法TCPApr 10, 2023 am 09:01 AMarXiv论文“Trajectory-guided Control Prediction for End-to-end Autonomous Driving: A Simple yet Strong Baseline“, 2022年6月,上海AI实验室和上海交大。当前的端到端自主驾驶方法要么基于规划轨迹运行控制器,要么直接执行控制预测,这跨越了两个研究领域。鉴于二者之间潜在的互利,本文主动探索两个的结合,称为TCP (Trajectory-guided Control Prediction)。具

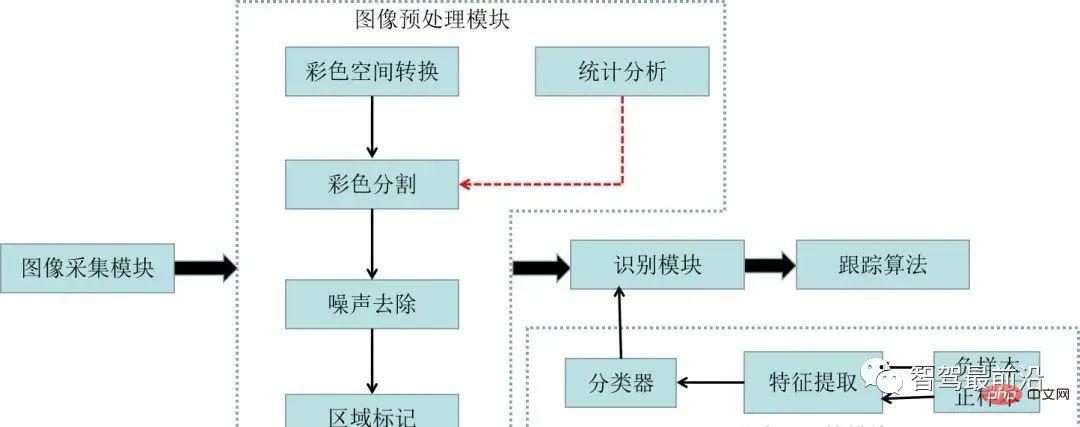

一文聊聊自动驾驶中交通标志识别系统Apr 12, 2023 pm 12:34 PM

一文聊聊自动驾驶中交通标志识别系统Apr 12, 2023 pm 12:34 PM什么是交通标志识别系统?汽车安全系统的交通标志识别系统,英文翻译为:Traffic Sign Recognition,简称TSR,是利用前置摄像头结合模式,可以识别常见的交通标志 《 限速、停车、掉头等)。这一功能会提醒驾驶员注意前面的交通标志,以便驾驶员遵守这些标志。TSR 功能降低了驾驶员不遵守停车标志等交通法规的可能,避免了违法左转或者无意的其他交通违法行为,从而提高了安全性。这些系统需要灵活的软件平台来增强探测算法,根据不同地区的交通标志来进行调整。交通标志识别原理交通标志识别又称为TS

一文聊聊SLAM技术在自动驾驶的应用Apr 09, 2023 pm 01:11 PM

一文聊聊SLAM技术在自动驾驶的应用Apr 09, 2023 pm 01:11 PM定位在自动驾驶中占据着不可替代的地位,而且未来有着可期的发展。目前自动驾驶中的定位都是依赖RTK配合高精地图,这给自动驾驶的落地增加了不少成本与难度。试想一下人类开车,并非需要知道自己的全局高精定位及周围的详细环境,有一条全局导航路径并配合车辆在该路径上的位置,也就足够了,而这里牵涉到的,便是SLAM领域的关键技术。什么是SLAMSLAM (Simultaneous Localization and Mapping),也称为CML (Concurrent Mapping and Localiza

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 Linux new version

SublimeText3 Linux latest version

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),