Technology peripherals

Technology peripherals AI

AI MoE Large Model Making Guide: Zero-Based Manual Building Methods, Master-Level Tutorials Revealed

MoE Large Model Making Guide: Zero-Based Manual Building Methods, Master-Level Tutorials RevealedThe legendary "magic weapon" of GPT-4 - the MoE (Mixed Expert) architecture, can be used by yourself!

There is a machine learning guru on Hugging Face who shared how to build a complete MoE system from scratch.

This project is called MakeMoE by the author, and details the process from attention construction to the formation of a complete MoE model.

According to the author, MakeMoE was inspired by and based on the makemore of OpenAI founding member Andrej Karpathy.

makemore is a teaching project for natural language processing and machine learning, intended to help learners understand and implement some basic models.

Similarly, MakeMoE also helps learners gain a deeper understanding of the hybrid expert model in the step-by-step building process.

So, what exactly does this "Hand Rubbing Guide" talk about?

Build MoE model from scratch

Compared with Karpathy's makemore, MakeMoE replaces the isolated feedforward neural network with a sparse mixture of experts, while adding the necessary gating logic.

At the same time, because the ReLU activation function needs to be used in the process, the default initialization method in makemore is replaced by the Kaiming He method.

If you want to create a MoE model, you must first understand the self-attention mechanism.

The model first transforms the input sequence into parameters represented by queries (Q), keys (K) and values (V) through linear transformation.

These parameters are then used to calculate attention scores, which determine how much attention the model pays to each position in the sequence when generating each token.

In order to ensure the autoregressive characteristics of the model when generating text, that is, it can only predict the next token based on the already generated token, the author uses a multi-head causal self-attention machine mechanism.

This mechanism uses a mask to set the attention scores of unprocessed positions to negative infinity, so that the weights of these positions will become zero.

Multi-head causality allows the model to perform multiple such attention calculations in parallel, with each head focusing on different parts of the sequence.

After completing the configuration of the self-attention mechanism, you can create the expert module. The "expert module" here is a multi-layer perceptron.

Each expert module contains a linear layer that maps the embedding vector to a larger dimension, and then through a nonlinear activation function (such as ReLU), and another linear layer to map the vector back to the original Embed dimensions.

This design enables each expert to focus on processing different parts of the input sequence, and uses the gating network to decide which experts should be activated when generating each token.

#So, the next step is to start building the component for allocating and managing experts - the gate control network.

The gated network here is also implemented through a linear layer, which maps the output of the self-attention layer to the number of expert modules.

The output of this linear layer is a score vector, each score represents the importance of the corresponding expert module to the currently processed token.

The gated network will calculate the top-k values of this score vector and record its index, and then select the top-k largest scores from them to weight the corresponding expert module output.

In order to increase the explorability of the model during the training process, the author also introduced noise to avoid that all tokens tend to be processed by the same experts.

This noise is usually achieved by adding random Gaussian noise to the fractional vector.

After obtaining the results, the model selectively multiplies the first k values with the outputs of the top k experts of the corresponding token, and then adds them to form a weighted sum to form the model Output.

Finally, put these modules together to get a MoE model.

For the above entire process, the author has provided the corresponding code, you can learn more about it in the original article.

In addition, the author also produced end-to-end Jupyter notes, which can be run directly while learning each module.

If you are interested, learn it quickly!

Original address: https://huggingface.co/blog/AviSoori1x/makemoe-from-scratch

Note version (GitHub): https://github. com/AviSoori1x/makeMoE/tree/main

The above is the detailed content of MoE Large Model Making Guide: Zero-Based Manual Building Methods, Master-Level Tutorials Revealed. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

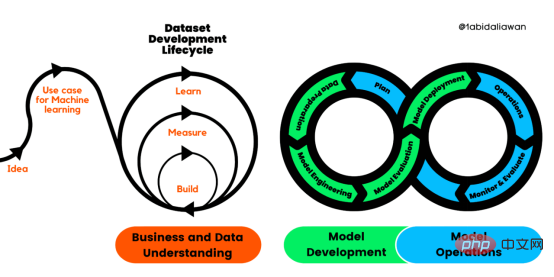

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Linux new version

SublimeText3 Linux latest version

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.