Technology peripherals

Technology peripherals AI

AI Transformer's groundbreaking work was opposed, ICLR review raised questions! The public accuses black-box operations, LeCun reveals similar experience

Transformer's groundbreaking work was opposed, ICLR review raised questions! The public accuses black-box operations, LeCun reveals similar experienceIn December last year, two researchers from CMU and Princeton released the Mamba architecture, which instantly shocked the AI community!

As a result, this paper, which was expected to "subvert Transformer's hegemony" by everyone, was revealed today to be suspected of being rejected? !

This morning, Cornell University associate professor Sasha Rush first discovered that this paper, which is expected to be a foundational work, seems to be rejected by ICLR 2024.

And said, "To be honest, I don't understand. If it is rejected, what chance do we have?"

As you can see on OpenReview, the scores given by the four reviewers are 3, 6, 8, and 8.

#Although this score may not cause the paper to be rejected, a score as low as 3 points is outrageous.

Niu Wen scored 3 points, and LeCun even came out to cry out for injustice

This paper was published by two researchers from CMU and Princeton University. A new architecture Mamba is proposed.

This SSM architecture is comparable to Transformers in language modeling, and can also scale linearly, while having 5 times the inference throughput!

Paper address: https://arxiv.org/pdf/2312.00752.pdf

At that time As soon as the paper came out, it immediately shocked the AI community. Many people said that the architecture that overthrew Transformer was finally born.

Now, Mamba’s paper is likely to be rejected, which many people cannot understand.

Even Turing giant LeCun participated in this discussion, saying that he had encountered similar "injustices."

"I think back then, I had the most citations. The papers I submitted on Arxiv alone were cited more than 1,880 times, but they were never accepted."

LeCun is famous for his work in optical character recognition and computer vision using convolutional neural networks (CNN), and is therefore famous for his work in optical character recognition and computer vision. Won the Turing Award in 2019.

However, his paper "Deep Convolutional Network Based on Graph Structure Data" published in 2015 has never been accepted by the top conference.

Paper address: https://arxiv.org/pdf/1506.05163.pdf

Depth Learning AI researcher Sebastian Raschka said that despite this, Mamba has had a profound impact on the AI community.

Recently, a large wave of research is based on the Mamba architecture, such as MoE-Mamba and Vision Mamba.

Interestingly, Sasha Rush, who broke the news that Mamba was given a low score, also published a new paper based on such research today—— MambaByte.

In fact, the Mamba architecture already has the attitude of "a single spark can start a prairie fire". In academic circles, The influence of the circle is getting wider and wider.

Some netizens said that Mamba papers will begin to occupy arXiv.

"For example, I just saw this paper proposing MambaByte, a token-less selective state space model. Basically, it adapts Mamba SSM to directly Learning from original tokens."

Tri Dao of the Mamba paper also forwarded this research today.

Such a popular paper was given a low score. Some people said that it seems that peer reviewers really don’t pay attention to marketing. How loud is the voice.

The reason why Mamba’s paper was given a low score

The reason why Mamba’s paper was given a low score what is it then?

You can see that the reviewer who gave the review a score of 3 has a confidence level of 5, which means that he is very sure of this score.

In the review, the questions he raised were divided into two parts: one was questioning the model design, and the other was questioning the experiment. .

Model design

- Mamba’s design motivation is to solve the shortcomings of the loop model while improving the performance of the Transformer model. efficiency. There are many studies along this direction: S4-diagonal [1], SGConv [2], MEGA [3], SPADE [4], and many efficient Transformer models (such as [5]). These models all achieve near-linear complexity, and the authors need to compare Mamba with these works in terms of model performance and efficiency. Regarding model performance, some simple experiments (such as language modeling on Wikitext-103) are enough.

- Many attention-based Transformer models exhibit the ability to length generalize, that is, the model can be trained on shorter sequence lengths and then on longer sequence lengths carry out testing. Some examples include relative position encoding (T5) and Alibi [6]. Since SSM is generally continuous, does Mamba have this length generalization ability?

Experiment

- The authors need to compare to a stronger baseline . The authors acknowledge that H3 was used as motivation for the model architecture. However, they did not compare with H3 experimentally. As can be seen from Table 4 of [7], on the Pile data set, the ppl of H3 are 8.8 (125M), 7.1 (355M) and 6.0 (1.3B) respectively, which are greatly better than Mamba. Authors need to show comparison with H3.

- For the pre-trained model, the author only shows the results of zero-shot inference. This setup is quite limited and the results do not demonstrate Mamba's effectiveness very well. I recommend the authors to conduct more experiments with long sequences, such as document summarization, where the input sequences will naturally be very long (e.g., the average sequence length of the arXiv dataset is greater than 8k).

- The author claims that one of his main contributions is long sequence modeling. The authors should compare with more baselines on LRA (Long Range Arena), which is basically the standard benchmark for long sequence understanding.

- Missing memory benchmark. Although Section 4.5 is titled “Speed and Memory Benchmarks,” it only covers speed comparisons. In addition, the author should provide more detailed settings on the left side of Figure 8, such as model layers, model size, convolution details, etc. Can the authors provide some intuitive explanation as to why FlashAttention is slowest when the sequence length is very large (Figure 8 left)?

Regarding the reviewer’s doubts, the author also went back to do his homework and came up with some experimental data to rebuttal.

For example, regarding the first question about model design, the author stated that the team intentionally focused on the complexity of large-scale pre-training rather than small-scale benchmarks.

Nevertheless, Mamba significantly outperforms all proposed models and more on WikiText-103, which is what we would expect from general results in languages .

First, we compared Mamba in exactly the same environment as the Hyena paper [Poli, Table 4.3]. In addition to their reported data, we also tuned our own strong Transformer baseline.

We then changed the model to Mamba, which improved 1.7 ppl over our Transformer and 2.3 ppl over the original baseline Transformer.

Regarding the "lack of memory benchmark", the author said:

With most depth sequences As with models (including FlashAttention), the memory usage is only the size of the activation tensor. In fact, Mamba is very memory efficient; we additionally measured the training memory requirements of the 125M model on an A100 80GB GPU. Each batch consists of sequences of length 2048. We compared this to the most memory-efficient Transformer implementation we know of (kernel fusion and FlashAttention-2 using torch.compile).

For more rebuttal details, please check https://openreview.net/forum?id=AL1fq05o7H

Overall, the reviewers’ comments have been addressed by the author, but these rebuttals have been completely ignored by the reviewers.

Someone found a "point" in this reviewer's opinion: Maybe he doesn't understand what rnn is?

Netizens who watched the whole process said that the whole process was too painful to read. The author of the paper gave such a thorough response, but the reviewer No wavering, no re-evaluation.

Give a 3 points with a confidence level of 5 and ignore the author’s well-founded rebuttal. This kind of reviewer is too annoying. Bar.

The other three reviewers gave high scores of 6, 8, and 8.

The reviewer who scored 6 points pointed out that weakness is "the model still requires secondary memory like Transformer during training."

#The reviewer who scored 8 points said that the weakness of the article was just "the lack of citations to some related works."

Another reviewer who gave 8 points praised the paper, saying "the empirical part is very thorough and the results are strong."

Not even found any Weakness.

There should be an explanation for such widely divergent classifications. But there are no meta-reviewer comments yet.

Netizens shouted: The academic world has also declined!

In the comment area, someone asked a soul torture question: Who scored such a low score of 3? ?

Obviously, this paper achieves better results with very low parameters, and the GitHub code is also clear and everyone can test it , so it has won widespread praise, so everyone thinks it is outrageous.

Some people simply shouted WTF. Even if the Mamba architecture cannot change the LLM landscape, it is a reliable model with multiple uses on long sequences. . To get this score, does it mean that today's academic world has declined?

Everyone sighed with emotion. Fortunately, this is just one of the four comments. The other reviewers gave high scores. At present, the final A decision has not yet been made.

Some people speculate that the reviewer may have been too tired and lost his judgment.

Another reason is that a new research direction such as the State Space model may threaten some reviewers and experts who have made great achievements in the Transformer field. The situation is very complicated.

Some people say that Mamba’s paper getting 3 points is simply a joke in the industry.

They are so focused on comparing crazy fine-grained benchmarks, but the really interesting part of the paper is engineering and efficiency. Research is dying because we only care about SOTA, albeit on outdated benchmarks for an extremely narrow subset of the field.

"Not enough theory, too many projects."

Currently, this "mysterious case" has not yet come to light, and the entire AI community is waiting for a result.

The above is the detailed content of Transformer's groundbreaking work was opposed, ICLR review raised questions! The public accuses black-box operations, LeCun reveals similar experience. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

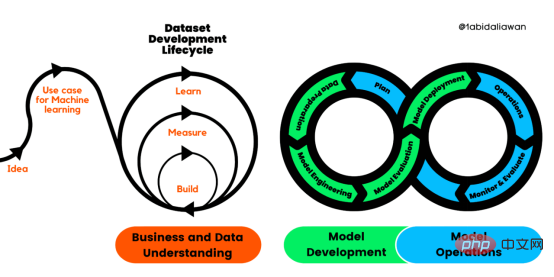

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Dreamweaver Mac version

Visual web development tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool