Lasso regression is a linear regression technique that penalizes the model coefficients to reduce the number of variables and improve the model's prediction ability and generalization performance. It is suitable for feature selection of high-dimensional data sets and controls model complexity to avoid overfitting. Lasso regression is widely used in biology, finance, social networks and other fields. This article will introduce the principles and applications of Lasso regression in detail.

1. Basic principles

Lasso regression is a method used to estimate the coefficients of linear regression models. It achieves feature selection by minimizing the sum of squared errors and adding an L1 penalty term to limit the model coefficients. This method can identify the features that have the most significant impact on the target variable while maintaining prediction accuracy.

Suppose we have a data set X, containing m samples and n features. Each sample consists of a feature vector x_i and the corresponding label y_i. Our goal is to build a linear model y = Xw b that minimizes the error between the predicted value and the true value.

We can use the least squares method to solve the values of w and b to minimize the sum of squared errors. That is:

##\min_{w,b} \sum_{i=1}^m (y_i - \sum_{j=1}^n w_jx_{ij} - b)^ 2 However, when the number of features is large, the model may suffer from overfitting, that is, the model performs well on the training set but performs poorly on the test set. In order to avoid overfitting, we can add an L1 penalty term so that some coefficients are compressed to zero, thereby achieving the purpose of feature selection. The L1 penalty term can be expressed as: \lambda \sum_{j=1}^n \mid w_j \mid##where, λ is the penalty coefficient we need to choose, which controls the intensity of the penalty term. When λ is larger, the impact of the penalty term is greater, and the coefficient of the model tends to zero. When λ tends to infinity, all coefficients are compressed to zero and the model becomes a constant model, that is, all samples are predicted to be the same value.

The objective function of lasso regression can be expressed as:

\min_{w,b} \frac{1}{2m} \sum_{i=1} ^m (y_i - \sum_{j=1}^n w_jx_{ij} - b)^2 \lambda \sum_{j=1}^n \mid w_j \mid

2. Application scenarios

Lasso regression can be used in application scenarios such as feature selection, solving multicollinearity problems, and interpreting model results. For example, in the field of medical diagnostics, we can use Lasso regression to identify which disease risk factors have the greatest impact on predicted outcomes. In finance, we can use Lasso regression to find which factors have the greatest impact on stock price changes.

In addition, Lasso regression can also be used in conjunction with other algorithms, such as random forests, support vector machines, etc. By combining them, we can take full advantage of the feature selection capabilities of Lasso regression while gaining the benefits of other algorithms, thereby improving model performance.

The above is the detailed content of Lasso return. For more information, please follow other related articles on the PHP Chinese website!

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AM

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AMRecent research has shown that AI Overviews can cause a whopping 15-64% decline in organic traffic, based on industry and search type. This radical change is causing marketers to reconsider their whole strategy regarding digital visibility. The New

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AM

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AMA recent report from Elon University’s Imagining The Digital Future Center surveyed nearly 300 global technology experts. The resulting report, ‘Being Human in 2035’, concluded that most are concerned that the deepening adoption of AI systems over t

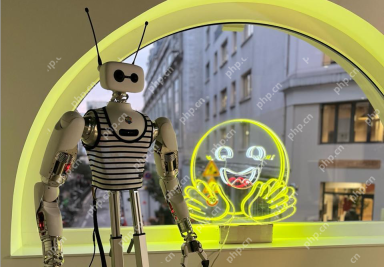

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen RoboticsApr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen RoboticsApr 15, 2025 am 11:25 AM“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

Three Aspects Of Intellectual Property With AIApr 15, 2025 am 11:24 AM

Three Aspects Of Intellectual Property With AIApr 15, 2025 am 11:24 AMAnd before that happens, societies have to look more closely at the issue. First of all, we have to define human content, and bring a broadness to that category of information. You have creative works like songs, and poems and pieces of visual art.

Amazon Unleashes New AI Agents Ready To Take Over Your Daily TasksApr 15, 2025 am 11:23 AM

Amazon Unleashes New AI Agents Ready To Take Over Your Daily TasksApr 15, 2025 am 11:23 AMThis will change a lot of things as we become able to delegate more and more tasks to machines. By connecting with external applications, agents can take care of shopping, scheduling, managing travel, and many of our day-to-day interactions with digi

AI Continents Are Fast Becoming The Latest Geo-Political Power Play For AI SupremacyApr 15, 2025 am 11:17 AM

AI Continents Are Fast Becoming The Latest Geo-Political Power Play For AI SupremacyApr 15, 2025 am 11:17 AMLet’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). EU Makes Bold AI Procl

Rethinking Threat Detection In A Decentralized WorldApr 15, 2025 am 11:16 AM

Rethinking Threat Detection In A Decentralized WorldApr 15, 2025 am 11:16 AMBut that’s changing—thanks in large part to a fundamental shift in how we interpret and respond to risk. The Cloud Visibility Gap Is a Threat Vector in Itself Hybrid and multi-cloud environments have become the new normal. Organizations run workloa

Chinese Robotaxis Have Government Black Boxes, Approach U.S. QualityApr 15, 2025 am 11:15 AM

Chinese Robotaxis Have Government Black Boxes, Approach U.S. QualityApr 15, 2025 am 11:15 AMA recent session at last week’s Ride AI conference in Los Angeles revealed some details about the different regulatory regime in China, and featured a report from a Chinese-American Youtuber who has taken on a mission to ride in the different vehicle

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.