Hugging Face Transformer was originally developed in 2016 by Hugging Face, a company dedicated to providing developers with easy-to-use natural language processing (NLP) tools and technologies. Since its inception, the company has become one of the most popular and successful companies in the NLP field. The success of the Hugging Face Transformer library lies in its powerful yet easy-to-use functionality, while its open source code and active community also play a key role.

The core of the Hugging Face Transformer library is its pre-trained model. These models learn the basic rules and structure of language by training on large corpora. The library contains some well-known pre-trained models, such as BERT, GPT-2, RoBERTa and ELECTRA, etc. These models can be loaded and used with simple Python code for a variety of natural language processing tasks. These pre-trained models can be used for both unsupervised and supervised learning tasks. Through fine-tuning, we can further optimize the model to adapt it to the specific task and data. The process of fine-tuning can be done by training the pre-trained model and fine-tuning it with the data set of a specific task to improve the performance of the model on that task. The design of the Hugging Face Transformer library makes it a powerful and flexible tool that can help us quickly build and deploy natural language processing models. Whether it is tasks such as text classification, named entity recognition, machine translation or dialogue generation, it can all be achieved through the pre-trained models in this library. This allows us to conduct natural language processing research and application development more efficiently.

Transformer is a neural network architecture based on the self-attention mechanism, which has the following advantages:

(1) Ability to handle variable-length inputs Sequence, no need to pre-specify the input length;

(2) Can be calculated in parallel to speed up the model training and inference process;

(3) ) By stacking multiple Transformer layers, different levels of semantic information can be gradually learned, thereby improving the performance of the model.

Therefore, models based on the Transformer architecture perform well in NLP tasks, such as machine translation, text classification, named entity recognition, etc.

The Hugging Face platform provides a large number of pre-trained models based on the Transformer architecture, including BERT, GPT, RoBERTa, DistilBERT, etc. These models have excellent performance in different NLP tasks and have achieved the best results in many competitions. These models have the following characteristics:

(1) Pre-training uses a large-scale corpus and can learn general language expression capabilities;

( 2) It can be fine-tuned to adapt to the needs of specific tasks;

(3) It provides an out-of-the-box API to facilitate users to quickly build and deploy models.

In addition to pre-trained models, Hugging Face Transformer also provides a series of tools and functions to help developers use and optimize models more easily. These tools include tokenizer, trainer, optimizer, etc. Hugging Face Transformer also provides an easy-to-use API and documentation to help developers get started quickly.

Transformer model has a wide range of application scenarios in the field of NLP, such as text classification, sentiment analysis, machine translation, question and answer systems, etc. Among them, the BERT model performs particularly well in various tasks in the field of natural language processing, including text classification, named entity recognition, sentence relationship judgment, etc. The GPT model performs better in generative tasks, such as machine translation, dialogue generation, etc. The RoBERTa model performs outstandingly in multi-language processing tasks, such as cross-language machine translation, multi-language text classification, etc. In addition, Hugging Face's Transformer model can also be used to generate various texts, such as generating dialogues, generating summaries, generating news, etc.

The above is the detailed content of What is the converter for Hugging Face?. For more information, please follow other related articles on the PHP Chinese website!

An AI Space Company Is BornMay 12, 2025 am 11:07 AM

An AI Space Company Is BornMay 12, 2025 am 11:07 AMThis article showcases how AI is revolutionizing the space industry, using Tomorrow.io as a prime example. Unlike established space companies like SpaceX, which weren't built with AI at their core, Tomorrow.io is an AI-native company. Let's explore

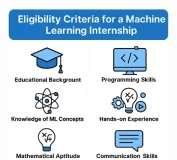

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

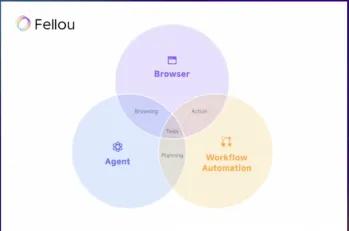

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft