Technology peripherals

Technology peripherals AI

AI How to implement machine learning to convert text into images with sample code?

How to implement machine learning to convert text into images with sample code?

Generative adversarial networks (GAN) are widely used in machine learning to generate text to images. This network structure consists of a generator that converts random noise into images, and a discriminator that works to distinguish between real images and images generated by the generator. Through continuous adversarial training, the generator is able to gradually generate realistic images that are difficult to distinguish from the discriminator. This technology has broad application prospects in image generation, image enhancement and other fields.

A simple example is using GAN to generate images of handwritten digits. The following is sample code in PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torchvision.utils import save_image

from torch.autograd import Variable

# 定义生成器网络

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.fc = nn.Linear(100, 256)

self.main = nn.Sequential(

nn.ConvTranspose2d(256, 128, 5, stride=2, padding=2),

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.ConvTranspose2d(128, 64, 5, stride=2, padding=2),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.ConvTranspose2d(64, 1, 4, stride=2, padding=1),

nn.Tanh()

)

def forward(self, x):

x = self.fc(x)

x = x.view(-1, 256, 1, 1)

x = self.main(x)

return x

# 定义判别器网络

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.main = nn.Sequential(

nn.Conv2d(1, 64, 4, stride=2, padding=1),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(64, 128, 4, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(128, 256, 4, stride=2, padding=1),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(256, 1, 4, stride=1, padding=0),

nn.Sigmoid()

)

def forward(self, x):

x = self.main(x)

return x.view(-1, 1)

# 定义训练函数

def train(generator, discriminator, dataloader, optimizer_G, optimizer_D, device):

criterion = nn.BCELoss()

real_label = 1

fake_label = 0

for epoch in range(200):

for i, (data, _) in enumerate(dataloader):

# 训练判别器

discriminator.zero_grad()

real_data = data.to(device)

batch_size = real_data.size(0)

label = torch.full((batch_size,), real_label, device=device)

output = discriminator(real_data).view(-1)

errD_real = criterion(output, label)

errD_real.backward()

D_x = output.mean().item()

noise = torch.randn(batch_size, 100, device=device)

fake_data = generator(noise)

label.fill_(fake_label)

output = discriminator(fake_data.detach()).view(-1)

errD_fake = criterion(output, label)

errD_fake.backward()

D_G_z1 = output.mean().item()

errD = errD_real + errD_fake

optimizer_D.step()

# 训练生成器

generator.zero_grad()

label.fill_(real_label)

output = discriminator(fake_data).view(-1)

errG = criterion(output, label)

errG.backward()

D_G_z2 = output.mean().item()

optimizer_G.step()

if i % 100 == 0:

print('[%d/%d][%d/%d] Loss_D: %.4f Loss_G: %.4f D(x): %.4f D(G(z)): %.4f / %.4f'

% (epoch+1, 200, i, len(dataloader),

errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

# 保存生成的图像

fake = generator(fixed_noise)

save_image(fake.detach(), 'generated_images_%03d.png' % epoch, normalize=True)

# 加载MNIST数据集

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

dataset = datasets.MNIST(root='./数据集', train=True, transform=transform, download=True)

dataloader = torch.utils.data.DataLoader(dataset, batch_size=64, shuffle=True)

# 定义设备

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 初始化生成器和判别器

generator = Generator().to(device)

discriminator = Discriminator().to(device)

# 定义优化器

optimizer_G = optim.Adam(generator.parameters(), lr=0.0002, betas=(0.5, 0.999))

optimizer_D = optim.Adam(discriminator.parameters(), lr=0.0002, betas=(0.5, 0.999))

# 定义固定噪声用于保存生成的图像

fixed_noise = torch.randn(64, 100, device=device)

# 开始训练

train(generator, discriminator, dataloader, optimizer_G, optimizer_D, device)Running this code will train a GAN model to generate images of handwritten digits and save the generated images.

The above is the detailed content of How to implement machine learning to convert text into images with sample code?. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

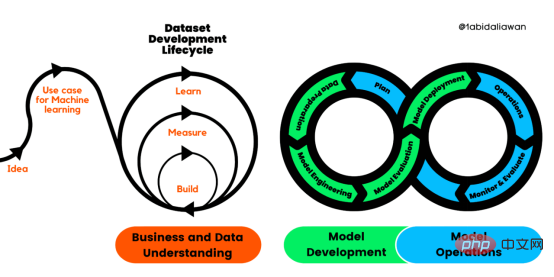

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM机器学习是一个不断发展的学科,一直在创造新的想法和技术。本文罗列了2023年机器学习的十大概念和技术。 本文罗列了2023年机器学习的十大概念和技术。2023年机器学习的十大概念和技术是一个教计算机从数据中学习的过程,无需明确的编程。机器学习是一个不断发展的学科,一直在创造新的想法和技术。为了保持领先,数据科学家应该关注其中一些网站,以跟上最新的发展。这将有助于了解机器学习中的技术如何在实践中使用,并为自己的业务或工作领域中的可能应用提供想法。2023年机器学习的十大概念和技术:1. 深度神经网

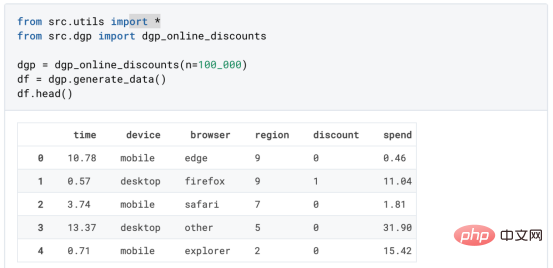

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM译者 | 朱先忠审校 | 孙淑娟在我之前的博客中,我们已经了解了如何使用因果树来评估政策的异质处理效应。如果你还没有阅读过,我建议你在阅读本文前先读一遍,因为我们在本文中认为你已经了解了此文中的部分与本文相关的内容。为什么是异质处理效应(HTE:heterogenous treatment effects)呢?首先,对异质处理效应的估计允许我们根据它们的预期结果(疾病、公司收入、客户满意度等)选择提供处理(药物、广告、产品等)的用户(患者、用户、客户等)。换句话说,估计HTE有助于我

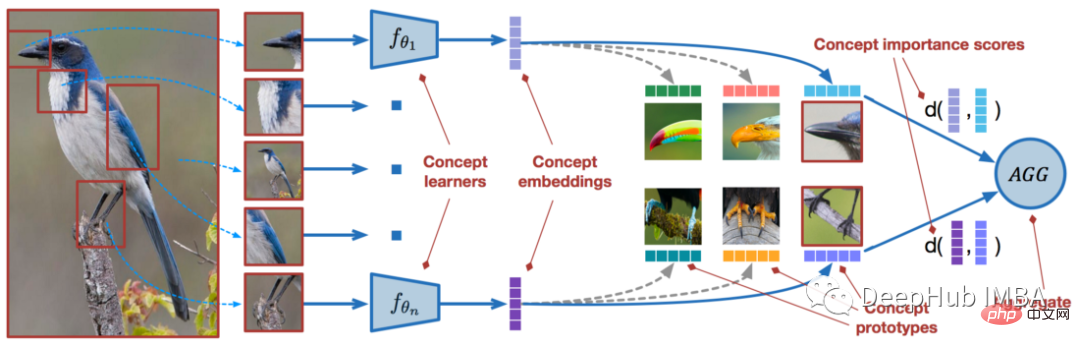

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM近年来,基于深度学习的模型在目标检测和图像识别等任务中表现出色。像ImageNet这样具有挑战性的图像分类数据集,包含1000种不同的对象分类,现在一些模型已经超过了人类水平上。但是这些模型依赖于监督训练流程,标记训练数据的可用性对它们有重大影响,并且模型能够检测到的类别也仅限于它们接受训练的类。由于在训练过程中没有足够的标记图像用于所有类,这些模型在现实环境中可能不太有用。并且我们希望的模型能够识别它在训练期间没有见到过的类,因为几乎不可能在所有潜在对象的图像上进行训练。我们将从几个样本中学习

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。 摘要本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。本文包括的内容如下:简介LazyPredict模块的安装在分类模型中实施LazyPredict

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM

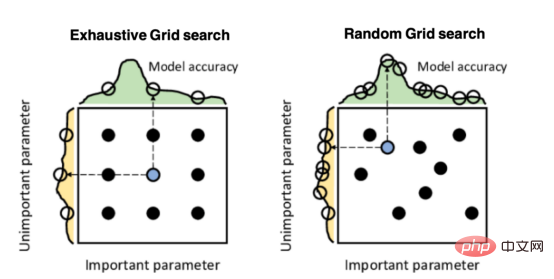

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM译者 | 朱先忠审校 | 孙淑娟引言模型超参数(或模型设置)的优化可能是训练机器学习算法中最重要的一步,因为它可以找到最小化模型损失函数的最佳参数。这一步对于构建不易过拟合的泛化模型也是必不可少的。优化模型超参数的最著名技术是穷举网格搜索和随机网格搜索。在第一种方法中,搜索空间被定义为跨越每个模型超参数的域的网格。通过在网格的每个点上训练模型来获得最优超参数。尽管网格搜索非常容易实现,但它在计算上变得昂贵,尤其是当要优化的变量数量很大时。另一方面,随机网格搜索是一种更快的优化方法,可以提供更好的

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM实现自我完善的过程是“机器学习”。机器学习是人工智能核心,是使计算机具有智能的根本途径;它使计算机能模拟人的学习行为,自动地通过学习来获取知识和技能,不断改善性能,实现自我完善。机器学习主要研究三方面问题:1、学习机理,人类获取知识、技能和抽象概念的天赋能力;2、学习方法,对生物学习机理进行简化的基础上,用计算的方法进行再现;3、学习系统,能够在一定程度上实现机器学习的系统。

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM本文将详细介绍用来提高机器学习效果的最常见的超参数优化方法。 译者 | 朱先忠审校 | 孙淑娟简介通常,在尝试改进机器学习模型时,人们首先想到的解决方案是添加更多的训练数据。额外的数据通常是有帮助(在某些情况下除外)的,但生成高质量的数据可能非常昂贵。通过使用现有数据获得最佳模型性能,超参数优化可以节省我们的时间和资源。顾名思义,超参数优化是为机器学习模型确定最佳超参数组合以满足优化函数(即,给定研究中的数据集,最大化模型的性能)的过程。换句话说,每个模型都会提供多个有关选项的调整“按钮

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),