In machine learning, normalization is a common data preprocessing method. Its main purpose is to eliminate dimensional differences between features by scaling the data to the same range. Dimensional differences refer to the different value ranges and units of different features, which may have an impact on the performance and stability of the model. Through normalization, we can scale the value ranges of different features into the same interval, thereby eliminating the impact of dimensional differences. Doing so helps improve model performance and stability. Commonly used normalization methods include maximum and minimum value normalization and Z-score normalization. Min-Max Normalization scales the data to the range of [0, 1]. The specific method is to linearly transform the value of each feature so that the minimum value corresponds to 0 and the maximum value corresponds to 1. Z-score normalization (Standardization) transforms data into a standard normal distribution by subtracting the mean and dividing by the standard deviation. Doing this adjusts the mean of the data to 0 and the standard deviation to 1. Normalization processing is widely used in machine learning and can improve model performance and stability. In feature engineering, normalization can scale the value ranges of different features to the same interval, improving model performance and stability. In image processing, normalization can scale pixel values to the range [0,1] to facilitate subsequent processing and analysis. In natural language processing, normalization converts text data into numerical vectors for easy processing and analysis by machine learning algorithms. The application of normalization can make the data have similar scales and prevent different features from biasing the model. Through normalization, data features can be better utilized, improving model performance and the reliability of results.

The purpose and significance of normalization processing

1. Reduce the dimensional difference of the data

There may be huge differences in the value ranges of different features, causing some features to The impact of model training results is greater. Through normalization, the range of eigenvalues is scaled to the same interval to eliminate the influence of dimensional differences. This ensures that each feature's contribution to the model is relatively balanced and improves the stability and accuracy of training.

2. Improve the convergence speed of the model

For algorithms based on gradient descent, such as logistic regression and support vector machines, normalization processing has an important impact on the convergence speed and results of the model. Failure to perform normalization may result in slow convergence or local optimal solutions. Normalization can speed up the gradient descent algorithm to find the global optimal solution.

3. Enhance the stability and accuracy of the model

In some data sets, there is a strong correlation between features, which can lead to model overfitting. Through normalization, the correlation between features can be reduced and the stability and accuracy of the model can be improved.

4. Convenient model interpretation and visualization

The normalized data is easier to understand and visualize, which is helpful for model interpretation and visual display of results.

In short, normalization plays an important role in machine learning, which can improve the performance and stability of the model, and also facilitates the interpretation and visualization of data.

Commonly used normalization methods in machine learning

In machine learning, we usually use the following two normalization methods:

Minimum-maximum normalization: This This method is also called dispersion standardization. Its basic idea is to map the original data to the range of [0,1]. The formula is as follows:

x_{new}=\frac{x-x_{min }}{x_{max}-x_{min}}

Where, x is the original data, x_{min} and x_{max} are the minimum and maximum values in the data set respectively.

Z-Score normalization: This method is also called standard deviation standardization. Its basic idea is to map the original data to a normal distribution with a mean of 0 and a standard deviation of 1. The formula is as follows:

x_{new}=\frac{x-\mu}{\sigma}

Where, x is the original data, \mu and \sigma are the mean and standard deviation in the data set respectively .

Both methods can effectively normalize data, eliminate dimensional differences between features, and improve the stability and accuracy of the model. In practical applications, we usually choose an appropriate normalization method based on the distribution of data and the requirements of the model.

The above is the detailed content of Why use normalization in machine learning. For more information, please follow other related articles on the PHP Chinese website!

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

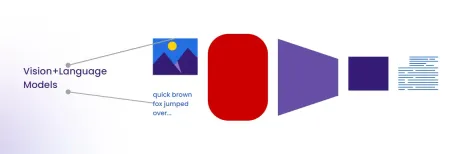

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AM

The Rise Of The AI Analyst: Why This Could Be The Most Important Job In The AI RevolutionApr 12, 2025 am 11:41 AMMy recent conversation with Andy MacMillan, CEO of leading enterprise analytics platform Alteryx, highlighted this critical yet underappreciated role in the AI revolution. As MacMillan explains, the gap between raw business data and AI-ready informat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Chinese version

Chinese version, very easy to use

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function