Technology peripherals

Technology peripherals AI

AI Facial Expression Analysis: Integrating Multimodal Information with Transformer

Facial Expression Analysis: Integrating Multimodal Information with Transformer

Paper Introduction

Human emotional behavior analysis has attracted much attention in human-computer interaction (HCI). This article is intended to introduce the paper we submitted to CVPR 2022 Affective Behavior Analysis in-the-wild (ABAW). To fully exploit emotional knowledge, we employ multi-modal features including spoken language, speech prosody, and facial expressions extracted from video clips in the Aff-Wild2 dataset. Based on these features, we propose a transformer-based multi-modal framework for action unit detection and expression recognition. This framework contributes to a more comprehensive understanding of human emotional behavior and provides new research directions in the field of human-computer interaction.

For the current frame image, we first encode it to extract static visual features. At the same time, we also use sliding windows to crop adjacent frames and extract three multi-modal features from image, audio and text sequences. Next, we introduce a transformer-based fusion module to fuse static visual features and dynamic multi-modal features. The cross-attention module in this fusion module helps focus the output integrated features on key parts that are helpful for downstream detection tasks. In order to further improve model performance, we adopted some data balancing techniques, data augmentation techniques and post-processing methods. In the official tests of ABAW3 Competition, our model ranked first on both EXPR and AU tracks. We demonstrate the effectiveness of our proposed method through extensive quantitative evaluation and ablation studies on the Aff-Wild2 dataset.

Paper link

https://arxiv.org/abs/2203.12367

The above is the detailed content of Facial Expression Analysis: Integrating Multimodal Information with Transformer. For more information, please follow other related articles on the PHP Chinese website!

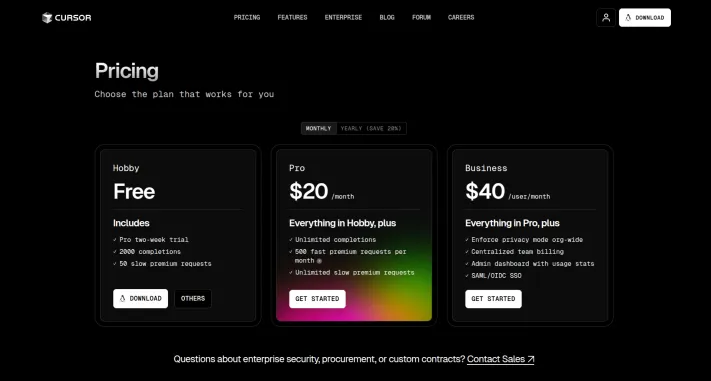

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

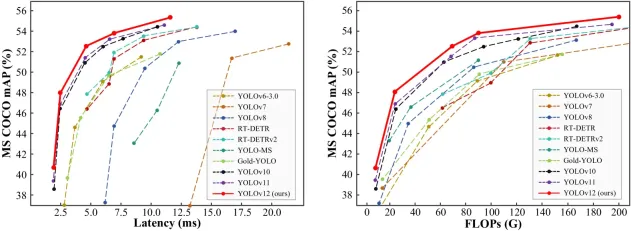

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools