Technology peripherals

Technology peripherals AI

AI Produced by Peking University: The latest SOTA with texture quality and multi-view consistency, achieving 3D conversion of one image in 2 minutes

Produced by Peking University: The latest SOTA with texture quality and multi-view consistency, achieving 3D conversion of one image in 2 minutesIt only takes two minutes to convert pictures into 3D!

It is still the kind with high texture quality and high consistency in multiple viewing angles.

No matter what species it is, the single-view image when input is still like this:

Two minutes later , the 3D version is done:

NeRF); Bottom, Repaint123 (GS)

The new method is calledRepaint123. The core idea is to combine the powerful image generation capability of the 2D diffusion model with the texture alignment capability of the repaint strategy to generate high-quality, consistent images from multiple perspectives.

In addition, this research also introduces a visibility-aware adaptive repaint intensity method for overlapping areas. Repaint123 solves the problems of previous methods such as large multi-view deviation, texture degradation, and slow generation in one fell swoop.

1. Generating a high-quality image sequence with multi-view consistency

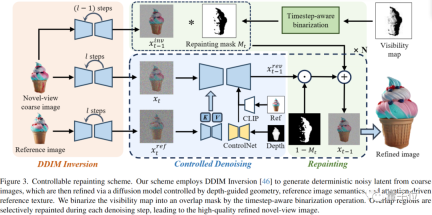

Generating a high-quality image sequence with multi-view consistency is divided into the following three parts:DDIM inversion

In order to retain the generation in the rough model stage To obtain consistent 3D low-frequency texture information, the author uses DDIM inversion to invert the image into a determined latent space, laying the foundation for the subsequent denoising process and generating faithful and consistent images.Controllable denoising

In order to control the geometric consistency and long-range texture consistency in the denoising stage, the author introduced ControlNet, using the depth map rendered by the coarse model as a geometric prior, and at the same time injecting the Attention feature of the reference map for texture migration.

In addition, in order to perform classifier-free guidance to improve image quality, the paper uses CLIP to encode reference images into image cues for guiding the denoising network.

Redraw

Progressive redrawing of occlusions and overlapping portions To ensure that overlapping areas of adjacent images in an image sequence are aligned at the pixel level, the author uses progressive local Redraw strategy.

While keeping overlapping areas unchanged, harmonious adjacent areas are generated and gradually extend to 360° from the reference perspective.

However, as shown in the figure below, the author found that the overlapping area also needs to be refined, because the visual resolution of the previously strabismused area becomes larger during emmetropia, and more high-frequency information needs to be added.

In addition, the thinning intensity is equal to 1-cosθ*, where θ* is the maximum value of the angle θ between all previous camera angles and the normal vector of the viewed surface, Thereby adaptively redrawing overlapping areas.

△The relationship between camera angle and thinning intensity

In order to choose the appropriate thinning intensity to ensure fidelity while improving quality, the author draws lessons from Based on the projection theorem and the idea of image super-resolution, a simple and direct visibility-aware redrawing strategy is proposed to refine the overlapping areas.

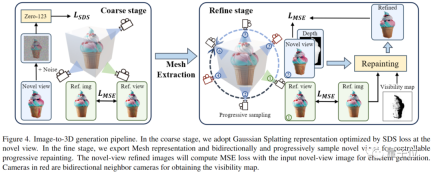

2. Fast and high-quality 3D reconstruction

As shown in the figure below, the author uses two methods in the process of fast and high-quality 3D reconstruction. stage approach.

△Repaint123 two-stage single-view 3D generation framework

First, they utilize Gaussian Splatting representation to quickly generate reasonable geometric structures and rough textures.

At the same time, with the help of the previously generated multi-view consistent high-quality image sequence, the author is able to use a simple mean square error (MSE) loss for fast 3D texture reconstruction.

Optimum for Consistency, Quality and Speed

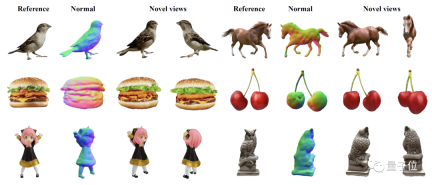

Researchers compared multiple approaches for single-view generation tasks.

△Single-view 3D generation visualization comparison

On RealFusion15 and Test-alpha data sets, Repaint123 achieved three results in consistency, quality and speed. The most advanced effect in terms of performance.

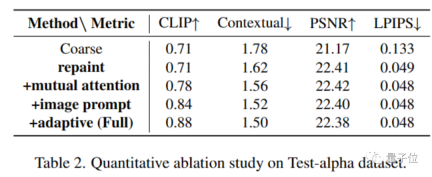

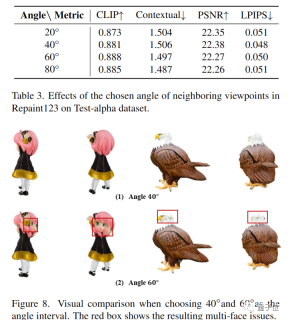

At the same time, the author also conducted ablation experiments on the effectiveness of each module used in the paper and the increment of perspective rotation:

It was also found that when the viewing angle interval is 60 degrees, the performance reaches the peak, but an excessive viewing angle interval will reduce the overlapping area and increase the possibility of multi-faceted problems, so 40 degrees can be used as the optimal viewing angle interval.

Paper address: https://arxiv.org/pdf/2312.13271.pdf

Code address: https:// pku-yuangroup.github.io/repaint123/

Project address: https://pku-yuangroup.github.io/repaint123/

The above is the detailed content of Produced by Peking University: The latest SOTA with texture quality and multi-view consistency, achieving 3D conversion of one image in 2 minutes. For more information, please follow other related articles on the PHP Chinese website!

As AI Use Soars, Companies Shift From SEO To GEOMay 05, 2025 am 11:09 AM

As AI Use Soars, Companies Shift From SEO To GEOMay 05, 2025 am 11:09 AMWith the explosion of AI applications, enterprises are shifting from traditional search engine optimization (SEO) to generative engine optimization (GEO). Google is leading the shift. Its "AI Overview" feature has served over a billion users, providing full answers before users click on the link. [^2] Other participants are also rapidly rising. ChatGPT, Microsoft Copilot and Perplexity are creating a new “answer engine” category that completely bypasses traditional search results. If your business doesn't show up in these AI-generated answers, potential customers may never find you—even if you rank high in traditional search results. From SEO to GEO – What exactly does this mean? For decades

Big Bets On Which Of These Pathways Will Push Today's AI To Become Prized AGIMay 05, 2025 am 11:08 AM

Big Bets On Which Of These Pathways Will Push Today's AI To Become Prized AGIMay 05, 2025 am 11:08 AMLet's explore the potential paths to Artificial General Intelligence (AGI). This analysis is part of my ongoing Forbes column on AI advancements, delving into the complexities of achieving AGI and Artificial Superintelligence (ASI). (See related art

Do You Train Your Chatbot, Or Vice Versa?May 05, 2025 am 11:07 AM

Do You Train Your Chatbot, Or Vice Versa?May 05, 2025 am 11:07 AMHuman-computer interaction: a delicate dance of adaptation Interacting with an AI chatbot is like participating in a delicate dance of mutual influence. Your questions, responses, and preferences gradually shape the system to better meet your needs. Modern language models adapt to user preferences through explicit feedback mechanisms and implicit pattern recognition. They learn your communication style, remember your preferences, and gradually adjust their responses to fit your expectations. Yet, while we train our digital partners, something equally important is happening in the reverse direction. Our interactions with these systems are subtly reshaping our own communication patterns, thinking processes, and even expectations of interpersonal conversations. Our interactions with AI systems have begun to reshape our expectations of interpersonal interactions. We adapted to instant response,

California Taps AI To Fast-Track Wildfire Recovery PermitsMay 04, 2025 am 11:10 AM

California Taps AI To Fast-Track Wildfire Recovery PermitsMay 04, 2025 am 11:10 AMAI Streamlines Wildfire Recovery Permitting Australian tech firm Archistar's AI software, utilizing machine learning and computer vision, automates the assessment of building plans for compliance with local regulations. This pre-validation significan

What The US Can Learn From Estonia's AI-Powered Digital GovernmentMay 04, 2025 am 11:09 AM

What The US Can Learn From Estonia's AI-Powered Digital GovernmentMay 04, 2025 am 11:09 AMEstonia's Digital Government: A Model for the US? The US struggles with bureaucratic inefficiencies, but Estonia offers a compelling alternative. This small nation boasts a nearly 100% digitized, citizen-centric government powered by AI. This isn't

Wedding Planning Via Generative AIMay 04, 2025 am 11:08 AM

Wedding Planning Via Generative AIMay 04, 2025 am 11:08 AMPlanning a wedding is a monumental task, often overwhelming even the most organized couples. This article, part of an ongoing Forbes series on AI's impact (see link here), explores how generative AI can revolutionize wedding planning. The Wedding Pl

What Are Digital Defense AI Agents?May 04, 2025 am 11:07 AM

What Are Digital Defense AI Agents?May 04, 2025 am 11:07 AMBusinesses increasingly leverage AI agents for sales, while governments utilize them for various established tasks. However, consumer advocates highlight the need for individuals to possess their own AI agents as a defense against the often-targeted

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AM

A Business Leader's Guide To Generative Engine Optimization (GEO)May 03, 2025 am 11:14 AMGoogle is leading this shift. Its "AI Overviews" feature already serves more than one billion users, providing complete answers before anyone clicks a link.[^2] Other players are also gaining ground fast. ChatGPT, Microsoft Copilot, and Pe

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Dreamweaver CS6

Visual web development tools

Dreamweaver Mac version

Visual web development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Atom editor mac version download

The most popular open source editor