Technology peripherals

Technology peripherals AI

AI Morph Studio: Free, 1080P, 7-second powerful dark horse video editing software is coming

Morph Studio: Free, 1080P, 7-second powerful dark horse video editing software is coming"Glowing jellyfish slowly rise from the ocean," continue typing in what you want to see in Morph Studio, "turning into shimmering constellations in the night sky."

After a few minutes, Morph Studio generates a short video. A jellyfish is completely transparent and sparkling, spinning and rising, its swaying figure contrasting with the stars in the night sky.

Luminous jellyfish rise from an enchanting sea, morphing into glittering stars in the dark sky.

Enter "joker cinematic", which once swept the world That face is back.

Joaquin Phoenix delivers a hyper-realistic performance as the Joker in the cinematic shot set in the neon-lit streets of New York. Smoke billows around him, adding to the atmosphere of chaos and darkness.

Recently, startup Morph Studio has made an important update to its text-to-video generation technology and community. Their model has undergone a major update, and these video productions show off the updated model, with clear images and vivid details.

Morph Studio is the first team in the world to publicly launch a text-to-video product that can be freely tested by the public, earlier than Runway launched Gen2 in public beta.

Compared with other popular text-to-video products, Morph Studio is different in terms of free services. It offers a default 1080P resolution and a maximum build time of 7 seconds from the start. For text-to-video products, higher resolution, longer generation time, and better expression of intent are three key indicators. Morph has reached the latest level in the industry in these three indicators.

The average length of a single shot of a Hollywood movie is 6 seconds. Extending the generation time to 7 seconds can unlock the creative needs of more users.

It’s easy to experience Morph Studio’s models. You can use it for free by registering on discord.

The model with the word "pro" in the red box on the screen is the updated model and is the subject of this article's experience.

Camera movement is the basic language of video production and a powerful narrative device. Morph provides several general camera languages, including zoom, pan (up, down, left, right), rotation (clockwise or counterclockwise), and still shots.

Morph also provides the MOTION function (1-10) to control video movement. The larger the value, the more violent and exaggerated the action. The smaller the value, the more subtle and smooth the action.

The frame rate (FPS) provides an adjustment range from 8 -30. The higher the value, the smoother the video and the larger the size. For example, -FPS 30 will produce the smoothest but also largest video. By default, all videos are created at 24 frames per second.

The default video length is 3 seconds. To generate a 7-second video, you can enter -s 7 in the command. Additionally, the model offers 5 video ratios to choose from.

If you have requirements for details such as lens, frame rate, and video length, please continue to enter the corresponding parameters after entering the content prompt. (Currently only English input is supported.)

We have experienced the updated model service and strongly feel the visual shock brought by 1080P.

Until recently, humans had the first photo of a snow leopard walking under the stars:

The first human photo of a snow leopard walking under the stars.

We want to know, can Morph Studio’s model be able to generate this relatively rare animal video?

With the same prompt, we put the works of Morph Studio in the upper part of the video, and the works generated with Pika in the lower part of the video.

a snow leopard walking under a starry night, cinematic realistic, super detail, -motion 10, -ar 16:9, -zoom in, -pan up, -fps 30, - s 7. negative: Extra limbs, Missing arms and legs, fused fingers and legs, extra fingers, disfigure

Morph Studio’s answer sheet, the text is understood accurately. In the 1080P picture, the snow leopard’s fur is rich in detail and lifelike. The Milky Way and stars can be seen in the background. However, the snow leopard's movement is not obvious.

In Pika’s homework, the snow leopard is indeed walking, but the night sky seems to be understood as a night with goose feathers and heavy snow. There is still a gap in terms of Snow Leopard style, details, and picture clarity.

Let’s take a look at the effect of character generation.

masterpiece best quality ultra detailed RAW video 1girl solo dancing digital painting beautiful cyborg girl age 21 long wavy red hair blue eyes delicate pale white skin perfect body singing in the eerie light of dawn in a post-apocalyp

In the works generated by Morph Studio, the high resolution brings extremely delicate facial contours and micro-expressions. Under dawn light, hair details are clearly visible.

Due to the lack of resolution, color, and light levels, the overall picture generated by Pika is bluish, and the facial details of the characters are not satisfactory.

People and animals have experienced it, let’s take a look at the generation effect of buildings (man-made objects).

La torre eifel starry night van gogh epic stylish fine art complex deep colors flowing fky moving clouds

and Pika’s works are more of a painting texture Compared with the picture, Morph Studio's work better balances Van Gogh elements and realistic elements. The light levels are very rich, especially the flowing details of the sea of clouds. The sky in Pika's work is almost static.

Finally, experience the creation of natural scenery.

One early morning the sun slowly rose from the sea level and the waves gently touched the beach.

You may wonder about Morph Studio The works are not real shots taken by human photographers under natural conditions.

Because the video generated by Pika lacks delicate light and shadow levels, the waves and the beach appear flat, and the movements of the waves hitting the beach are relatively dull.

In addition to the shocking experience brought by high resolution, with the same prompt to generate videos (such as animals, buildings, people and natural scenery themes), opponents will more or less " "Miss", Morph Studio's performance is relatively more stable, there are relatively fewer corner cases, and it can predict user intentions more accurately.

From the beginning, this startup’s understanding of Vincent Video is that the video must be able to describe user input very accurately, and all optimization work is also moving in this direction. The model structure of Morph Studio has a deeper understanding of textual intentions. This update has made some structural changes and specially made more detailed annotations for some data.

In addition to the relatively good text understanding ability, the detail processing of the picture is not stumped by the high-resolution output. In fact, after the model was updated, the motion content of the screen was richer, which is also reflected in the works we generated using Morph Studio.

"Girl with a Pearl Earring" When the head moves, the earrings are also shaking slightly; the scenes involving more complex actions such as horseback riding are also more smooth, coherent and logical, and the hand movements The output is also good.

1080P means that the model has to process more pixels, which brings greater challenges to detail generation. However, judging from the results, not only did the picture not collapse, but because of the rich layers More detailed and more expressive.

This is a set of natural landscapes we generated using models, including spectacular huge waves and volcanic eruptions, as well as delicate close-ups of flowers.

High-resolution output brings better visual enjoyment to users, but it also prolongs the model output time and affects the experience.

Morph Studio now generates 1080p videos in 3 and a half minutes, the same speed as Pika generates 720P videos. Start-up companies have limited computing resources, so it is not easy for Morph Studio to maintain SOTA.

In addition, in terms of video style, in addition to movie realism, Morph Studio models also support common styles such as comics and 3D animation.

Morph Studio’s focus on text-to-video technology is regarded as the next stage in the AI industry competition.

“Instant video may represent the next leap in AI technology,” the New York Times said in a headline of a technology report, arguing that it will be as important as the web browser and the iPhone.

In September 2022, Meta’s team of machine learning engineers launched a new system called Make-A-Video. Users enter a rough description of the scene, and the system will generate A corresponding short video.

In November 2022, researchers from Tsinghua University and Beijing Academy of Artificial Intelligence (BAAI) also released CogVideo.

At that time, the videos generated by these models were not only blurry (for example, the video resolution generated by CogVideo was only 480 x 480), the pictures were also relatively distorted, and there were many technical limitations. But they still represent a significant development in AI content generation.

On the surface, a video is just a series of frames (still images) put together in a way that gives the illusion of movement. However, it is much more difficult to ensure the consistency of a series of images in time and space.

The emergence of the diffusion model has accelerated the evolution of technology. Researchers have attempted to generalize the diffusion model to other domains such as audio, 3D, and video, and video synthesis technology has made significant advances.

Technology based on the diffusion model mainly allows the neural network to automatically learn some patterns by sorting out massive images, videos and text descriptions. When you input content requirements, the neural network generates a list of all the features it thinks might be used to create the image (think the outline of a cat's ears, the edges of a phone).

Then, a second neural network (also known as the diffusion model) is responsible for creating the image and generating the pixels needed for these features, and converting the pixels into a coherent image. By analyzing thousands of videos, the AI can learn to string many still images together in a similarly coherent manner. The key is to train a model that truly understands the relationships and consistency between each frame.

“This is one of the most impressive technologies we’ve built in the last hundred years,” Runway CEO Cristóbal Valenzuela once told the media. “You need to make people really Use it.”

2023 is regarded by some in the industry as a breakthrough year for video synthesis. There was no publicly available text-to-video model in January, and by the end of the year there were dozens of similar products and millions of users.

a16z partner Justine Moore shared the timeline of Vincent’s video model on social platforms. We can see from it that there are many other models besides big manufacturers. Startup companies, in addition, the speed of technology iteration is very fast.

The current AI-generated videos have not formed a unified and clear technical paradigm similar to LLM. The industry is still in the exploratory stage on how to generate stable videos. But the researchers believe these flaws can be eliminated as their system is trained with more and more data. Eventually, this technology will make creating videos as easy as writing sentences.

A senior domestic AI industry investor told us that several of the most important papers on Vincent Video Technology were published from July to August 2022, which is similar to the industry of Vincent Picture In the process of industrialization, the point where this technology is close to industrialization will appear one year later, that is, from July to August 2023.

The entire video technology is developing very fast, and the technology is becoming more and more mature. This investor said that based on previous investment experience in the GAN field, they predict that the next six months to one year will be The productization period of text-to-video technology.

The Morph team brings together the best young researchers in the field of video generation. After intensive research and development day and night over the past year, founder Xu Huaizhe and co-founders Li Feng, Yin Zixin, Zhao Shihao, and Liu Shaoteng Together with other core technical backbones, we have overcome the problem of AI video generation.

In addition to the technical team, Morph Studio has also recently strengthened its product team. Hai is the contracted producer of Maoyan Films, a judge of the Shanghai International Film Festival, and a core member of the former Silicon Valley head AIGC company. Xin also recently joined Morph Studio.

Heising said that Morph Studio occupies a leading position in the entire industry in terms of technical research; the team is flat, communication efficiency and execution are particularly high; every member is passionate about the industry. Her biggest dream was to join an animation company. After the advent of the AI era, she quickly realized that the animation industry would change in the future. In the past few decades, the animation base was 3D engines, and a new era of AI engines would soon usher in. The Pixar of the future will be born in an AI company. And Morph was her choice.

Founder Xu Huaizhe said that Morph is actively laying out the AI video track. We are determined to be a Super App in the AI video era and realize dreams for users.

The track will have its own Midjourney moment in 2024, he added.

PS: To experience the original fun of free 1080P video generation, please go to:

https://discord.com/ invite/VVqS8QnBkA

The above is the detailed content of Morph Studio: Free, 1080P, 7-second powerful dark horse video editing software is coming. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

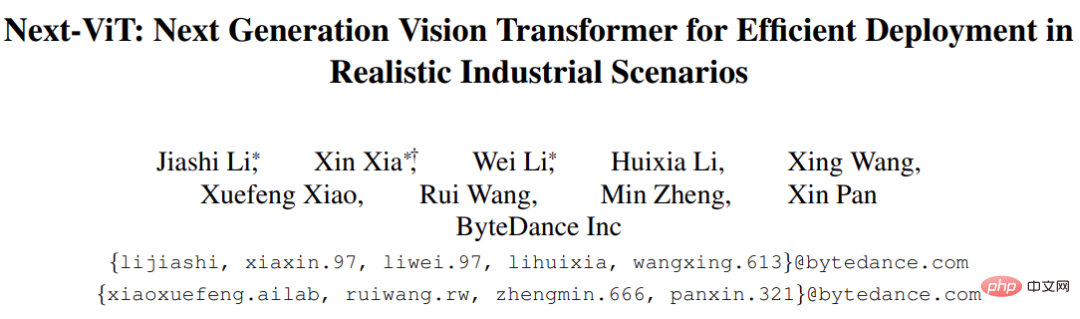

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

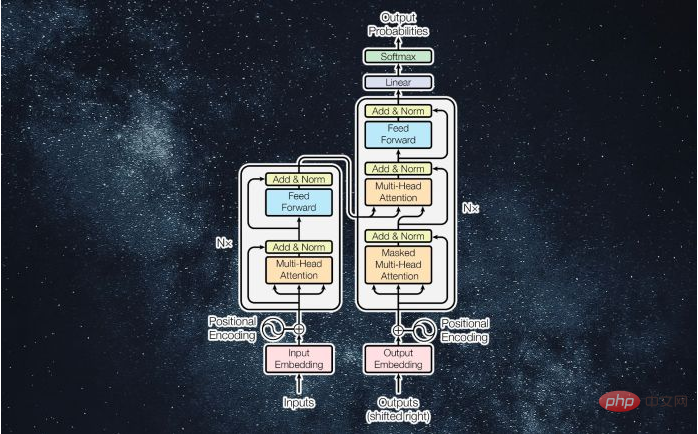

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

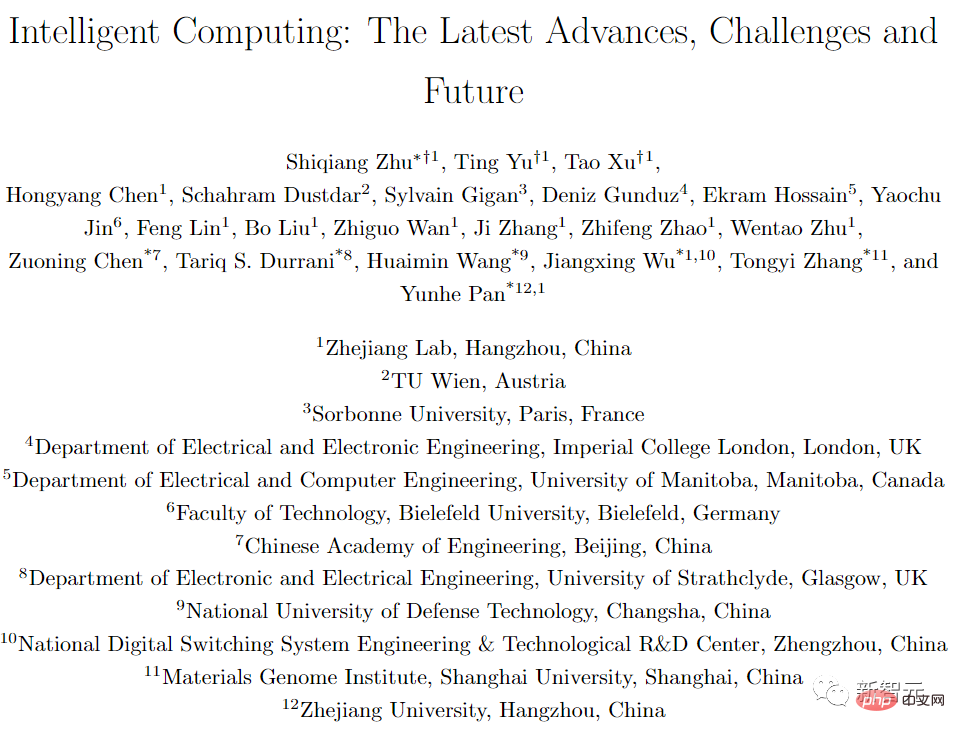

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.