Technology peripherals

Technology peripherals AI

AI LLM learns to fight each other, and the basic model may usher in group innovation

LLM learns to fight each other, and the basic model may usher in group innovationLLM learns to fight each other, and the basic model may usher in group innovation

There is a martial arts stunt in Jin Yong's martial arts novels: left and right fighting; it was a martial art created by Zhou Botong who practiced hard in a cave on Taohua Island for more than ten years. The initial idea was to fight with the left hand and the right hand for his own entertainment. happy. This idea can not only be used to practice martial arts, but can also be used to train machine learning models, such as the Generative Adversarial Network (GAN) that was all the rage in the past few years.

In today’s large model (LLM) era, researchers have discovered the subtle use of left and right interaction. Recently, Gu Quanquan's team at the University of California, Los Angeles, proposed a new method called SPIN (Self-Play Fine-Tuning). This method can greatly improve the capabilities of LLM only through self-game without using additional fine-tuning data. Professor Gu Quanquan said: "It is better to teach someone to fish than to teach him to fish: through self-game fine-tuning (SPIN), all large models can be improved from weak to strong!"

This research has also caused a lot of discussion on social networks. For example, Professor Ethan Mollick of the Wharton School of the University of Pennsylvania said: "More evidence shows that AI will not be limited by the resources available for its training. of human-created content. This paper once again shows that training AI using AI-created data can achieve higher-quality results than using only human-created data."

In addition, many researchers are excited about this method and have great expectations for progress in related directions in 2024. Professor Gu Quanquan told Machine Heart: "If you want to train a large model beyond GPT-4, this is a technology definitely worth trying."

The paper address is https://arxiv.org/pdf/2401.01335.pdf.

Large language models (LLMs) have ushered in an era of breakthroughs in general artificial intelligence (AGI), with extraordinary capabilities to solve a wide range of tasks that require complex reasoning and expertise. LLM areas of expertise include mathematical reasoning/problem solving, code generation/programming, text generation, summarizing and creative writing, and more.

One of the key advancements in LLM is the alignment process after training, which can make the model behave more in line with requirements, but this process often relies on costly human-labeled data. Classic alignment methods include supervised fine-tuning (SFT) based on human demonstrations and reinforcement learning based on human preference feedback (RLHF).

These alignment methods all require a large amount of human-labeled data. Therefore, to streamline the alignment process, researchers hope to develop fine-tuning methods that effectively leverage human data.

This is also the goal of this research: to develop new fine-tuning methods so that the fine-tuned model can continue to become stronger, and this fine-tuning process does not require the use of humans outside the fine-tuning data set Label the data.

In fact, the machine learning community has always been concerned about how to improve weak models into strong models without using additional training data. Research in this area can even be traced back to the boosting algorithm . Studies have also shown that self-training algorithms can convert weak learners into strong learners in hybrid models without the need for additional labeled data. However, the ability to automatically improve LLM without external guidance is complex and poorly studied. This leads to the following question:

Can we make LLM self-improvement without additional human-labeled data?

Method

In technical detail, we can convert the The LLM, denoted as pθt, generates a response y' to a prompt x in the human-annotated SFT dataset. The next goal is to find a new LLM pθ{t 1} that has the ability to distinguish the response y' generated by pθt from the response y given by a human.

This process can be regarded as a game process between two players: the main player is the new LLM pθ{t 1}, and its goal is to distinguish the response of the opponent player pθt and the human generation response; the opponent player is the old LLM pθt, whose task is to generate responses that are as close as possible to the human-annotated SFT data set.

The new LLM pθ{t 1} is obtained by fine-tuning the old LLM pθt. The training process allows the new LLM pθ{t 1} to have a good ability to distinguish the response y' generated by pθt and that given by humans. response y. This training not only allows the new LLM pθ{t 1} to achieve good discrimination ability as a main player, but also allows the new LLM pθ{t 1} to provide more aligned SFT data as an opponent player in the next iteration. set response. In the next iteration, the newly obtained LLM pθ{t 1} becomes the response generated opponent player.

##The goal of this self-game process is to make LLM finally Convergence to pθ∗ = p_data is such that the most powerful possible LLM generates responses that no longer differ from its previous versions and human-generated responses.

Interestingly, this new method shows similarity with the direct preference optimization (DPO) method recently proposed by Rafailov et al., but the obvious difference of the new method is that it uses self- Game mechanism. Therefore, this new method has a significant advantage: no additional human preference data is required.

In addition, we can also clearly see the similarity between this new method and the Generative Adversarial Network (GAN), except that the discriminator (main player) and generator in the new method (The opponent) is an instance of the same LLM after two adjacent iterations.

The team also conducted a theoretical proof of this new method, and the results showed that the method can converge if and only if the distribution of LLM is equal to the target data distribution, that is, when p_θ_t=p_data .

Experiment

In the experiment, the team used a fine-tuned LLM instance zephyr-7b-sft-full based on Mistral-7B .

The results show that the new method can continue to improve zephyr-7b-sft-full in consecutive iterations, and as a comparison, when using the SFT method to continuously train on the SFT dataset Ultrachat200k, The evaluation score will reach the performance bottleneck or even decline.

What’s more interesting is that the dataset used by the new method is only a 50k-sized subset of the Ultrachat200k dataset!

The new method SPIN has another achievement: it can effectively improve the average score of the base model zephyr-7b-sft-full in the HuggingFace Open LLM rankings from 58.14 to 63.16. Among them, there is an astonishing improvement of more than 10% on GSM8k and TruthfulQA, and it can also be improved from 5.94 to 6.78 on MT-Bench.

It is worth noting that in the Open LLM rankings, the model using SPIN fine-tuning can even compete with The model trained using an additional 62k preference dataset is comparable.

Conclusion

By making full use of human-labeled data, SPIN allows large models to rely on self-gaming to overcome weaknesses. Become stronger. Compared to reinforcement learning based on human preference feedback (RLHF), SPIN enables LLM to self-improve without additional human feedback or stronger LLM feedback. In experiments on multiple benchmark datasets including the HuggingFace Open LLM leaderboard, SPIN significantly and stably improves the performance of LLM, even outperforming models trained with additional AI feedback.

We expect that SPIN can help the evolution and improvement of large models, and ultimately achieve artificial intelligence beyond human levels.

The above is the detailed content of LLM learns to fight each other, and the basic model may usher in group innovation. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

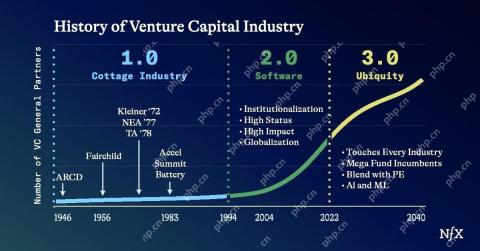

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Notepad++7.3.1

Easy-to-use and free code editor