Technology peripherals

Technology peripherals AI

AI Advanced driving simulation: Driving scene reconstruction with realistic surround data

Advanced driving simulation: Driving scene reconstruction with realistic surround dataOriginal title: DrivingGaussian: Composite Gaussian point rendering for ambient dynamic autonomous driving scenes

Please click here to view the paper: https://arxiv.org/pdf/2312.07920.pdf

Code link: https://pkuvdig.github.io/DrivingGaussian/

Author affiliation: Peking University Google Research University of California, Merced

Thesis idea:

This article proposes DrivingGaussian, a highly efficient and cost-effective framework for dynamic autonomous driving scenarios. For complex scenes with moving objects, this paper first uses incremental static 3D Gaussians to sequentially and progressively model the static background of the entire scene. Then, this paper uses a composite dynamic Gaussian graph to process multiple moving objects, reconstruct each object individually and restore their accurate position and occlusion relationship in the scene. This paper further uses LiDAR priors for Gaussian Splatting to reconstruct the scene with more details and maintain panoramic consistency. DrivingGaussian outperforms existing methods in driving scene reconstruction and enables realistic surround-view synthesis with high fidelity and multi-camera consistency.

Main contribution:

According to the understanding of this article, DrivingGaussian is the first framework to use composite Gaussian splash technology for large-scale dynamic driving scene representation and modeling

Introduced two novel modules, including incremental static 3D Gaussian and composite dynamic Gaussian map. The former incrementally reconstructs a static background, while the latter models multiple dynamic objects using Gaussian maps. Aided by lidar priors, the method helps recover complete geometries in large-scale driving scenarios

Comprehensive experiments demonstrate that DrivingGaussian outperforms previous approaches on challenging autonomous driving benchmarks method, and is able to simulate various extreme situations for downstream tasks

Network design:

This article introduces a new framework called DrivingGaussian for representing look-around Dynamic autonomous driving scenarios. The key idea of this framework is to hierarchically model complex driving scenarios using sequential data from multiple sensors. By using Composite Gaussian Splatting technology, the entire scene is decomposed into static backgrounds and dynamic objects, and each part is reconstructed separately. Specifically, a synthetic scene is first constructed sequentially from look-around multi-camera views using an incremental static 3D Gaussian method. Then, a composite dynamic Gaussian map is employed to reconstruct each moving object individually and dynamically integrate them into the static background through the Gaussian map. On this basis, global rendering is performed through Gaussian Splatting to capture occlusion relationships in the real world, including static backgrounds and dynamic objects. In addition, this paper also introduces a LiDAR prior into the GS representation, which is able to recover more accurate geometries and maintain better multi-view consistency compared to point clouds generated using random initialization or SfM

Extensive experiments show that our method achieves state-of-the-art performance on public autonomous driving datasets. Even without lidar beforehand, our method still shows good performance, demonstrating its versatility in reconstructing large-scale dynamic scenes. In addition, the framework of this article supports dynamic scene construction and corner case simulation, which helps to verify the safety and robustness of the autonomous driving system.

Figure 1. DrivingGaussian achieves realistic rendering performance for surround-view dynamic autonomous driving scenes. Naive methods [13, 49] either produce unpleasant artifacts and blurring in large-scale backgrounds or have difficulty reconstructing dynamic objects and detailed scene geometry. DrivingGaussian first introduced Composite Gaussian Splatting to effectively represent static backgrounds and multiple dynamic objects in complex surround-view driving scenes. DrivingGaussian enables high-quality synthesis of surround views across multiple cameras and facilitates long-term dynamic scene reconstruction.

Figure 2. The overall process of this article’s method. Left: DrivingGaussian acquires continuous data from multiple sensors, including multi-camera images and LiDAR. Center: To represent large-scale dynamic driving scenarios, this paper proposes Composite Gaussian Splatting, which consists of two parts. The first part incrementally reconstructs a broad static background, while the second part uses Gaussian maps to construct multiple dynamic objects and dynamically integrate them into the scene. Right: DrivingGaussian demonstrates good performance across multiple tasks and application scenarios.

Figure 3. Composite Gaussian Splatting with incremental static 3D Gaussian and dynamic Gaussian plots. This article uses Composite Gaussian Splatting to decompose the entire scene into static backgrounds and dynamic objects, reconstruct each part separately and integrate them for global rendering.

Experimental results:

Summary:

This article introduces DrivingGaussian, A novel framework to represent large-scale dynamic autonomous driving scenarios based on the proposed Composite Gaussian Splatting. DrivingGaussian progressively models a static background using incremental static 3D Gaussians and captures multiple moving objects using composite dynamic Gaussian maps. This paper further exploits LiDAR priors to achieve accurate geometry and multi-view consistency. DrivingGaussian achieves state-of-the-art performance on two autonomous driving datasets, enabling high-quality surround view synthesis and dynamic scene reconstructionCitation:

Zhou, X., Lin, Z., Shan, X., Wang, Y., Sun, D., & Yang, M. (2023). DrivingGaussian: Composite Gaussian Splatting for Surrounding Dynamic Autonomous Driving Scenes. ArXiv. /abs/2312.07920

https://www.php.cn/link/a878dbebc902328b41dbf02aa87abb58

The above is the detailed content of Advanced driving simulation: Driving scene reconstruction with realistic surround data. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

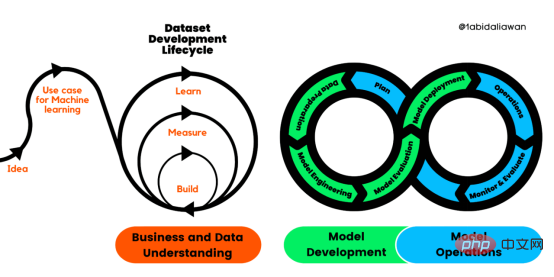

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM

人工智能的环境成本和承诺Apr 08, 2023 pm 04:31 PM人工智能(AI)在流行文化和政治分析中经常以两种极端的形式出现。它要么代表着人类智慧与科技实力相结合的未来主义乌托邦的关键,要么是迈向反乌托邦式机器崛起的第一步。学者、企业家、甚至活动家在应用人工智能应对气候变化时都采用了同样的二元思维。科技行业对人工智能在创建一个新的技术乌托邦中所扮演的角色的单一关注,掩盖了人工智能可能加剧环境退化的方式,通常是直接伤害边缘人群的方式。为了在应对气候变化的过程中充分利用人工智能技术,同时承认其大量消耗能源,引领人工智能潮流的科技公司需要探索人工智能对环境影响的

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM

条形统计图用什么呈现数据Jan 20, 2021 pm 03:31 PM条形统计图用“直条”呈现数据。条形统计图是用一个单位长度表示一定的数量,根据数量的多少画成长短不同的直条,然后把这些直条按一定的顺序排列起来;从条形统计图中很容易看出各种数量的多少。条形统计图分为:单式条形统计图和复式条形统计图,前者只表示1个项目的数据,后者可以同时表示多个项目的数据。

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PM

自动驾驶车道线检测分类的虚拟-真实域适应方法Apr 08, 2023 pm 02:31 PMarXiv论文“Sim-to-Real Domain Adaptation for Lane Detection and Classification in Autonomous Driving“,2022年5月,加拿大滑铁卢大学的工作。虽然自主驾驶的监督检测和分类框架需要大型标注数据集,但光照真实模拟环境生成的合成数据推动的无监督域适应(UDA,Unsupervised Domain Adaptation)方法则是低成本、耗时更少的解决方案。本文提出对抗性鉴别和生成(adversarial d

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM

数据通信中的信道传输速率单位是bps,它表示什么Jan 18, 2021 pm 02:58 PM数据通信中的信道传输速率单位是bps,它表示“位/秒”或“比特/秒”,即数据传输速率在数值上等于每秒钟传输构成数据代码的二进制比特数,也称“比特率”。比特率表示单位时间内传送比特的数目,用于衡量数字信息的传送速度;根据每帧图像存储时所占的比特数和传输比特率,可以计算数字图像信息传输的速度。

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM

数据分析方法有哪几种Dec 15, 2020 am 09:48 AM数据分析方法有4种,分别是:1、趋势分析,趋势分析一般用于核心指标的长期跟踪;2、象限分析,可依据数据的不同,将各个比较主体划分到四个象限中;3、对比分析,分为横向对比和纵向对比;4、交叉分析,主要作用就是从多个维度细分数据。

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM

聊一聊Python 实现数据的序列化操作Apr 12, 2023 am 09:31 AM在日常开发中,对数据进行序列化和反序列化是常见的数据操作,Python提供了两个模块方便开发者实现数据的序列化操作,即 json 模块和 pickle 模块。这两个模块主要区别如下:json 是一个文本序列化格式,而 pickle 是一个二进制序列化格式;json 是我们可以直观阅读的,而 pickle 不可以;json 是可互操作的,在 Python 系统之外广泛使用,而 pickle 则是 Python 专用的;默认情况下,json 只能表示 Python 内置类型的子集,不能表示自定义的

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Linux new version

SublimeText3 Linux latest version