Home >Technology peripherals >AI >CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance

CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance

- PHPzforward

- 2023-12-21 08:13:381175browse

What is the strength of Google Gemini? Carnegie Mellon University conducted a professional and objective third-party comparison

To ensure fairness,all models use the same prompts and generation parameters, and provide reproducible code and complete Transparent results.

will not use CoT@32 to compare 5-shot like Google’s official conference .

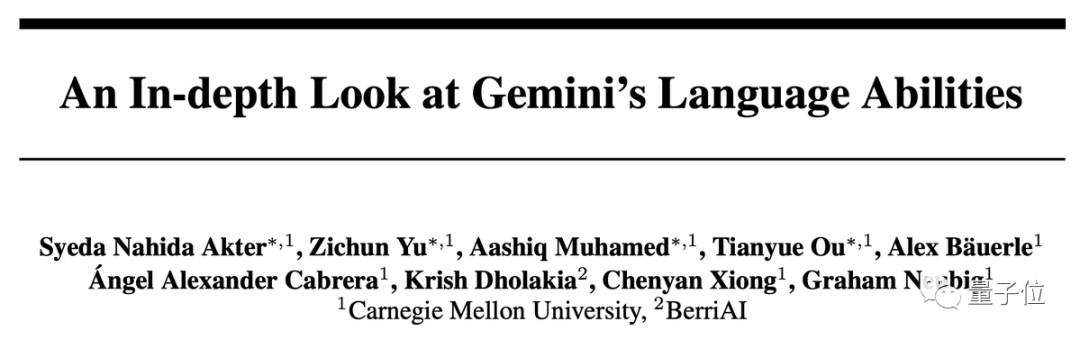

Result in one sentence: The Gemini Pro version is close to but slightly inferior to GPT-3.5 Turbo, GPT-4 is still far ahead.

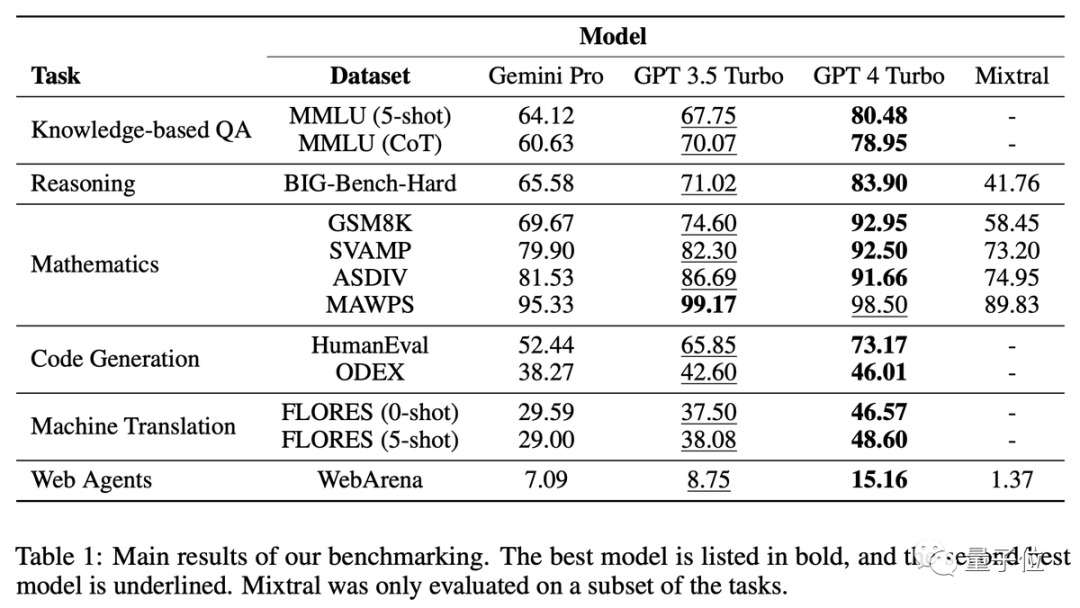

In the in-depth analysis, we also found some strange characteristics of Gemini, such as I like to choose D for multiple-choice questions...

Many researchers said that Gemini underwent very detailed testing just a few days after its release, which is a very remarkable achievement

Six major tasks in-depth test

This test specifically compares 6 different tasks, and selects the corresponding data set for each task

- Question and Answer: MMLU

- Reasoning: BIG-Bench Hard

- Math: GSM8k, SVAMP, ASDIV, MAWPS

- Code: HumanEval, ODEX

- Translation: FLORES

- Surfing the Internet: WebArena

Trivia: Like to choose D

According to the results, it can be seen that using thought chain prompts in this type of task does not necessarily improve the effect

In the MMLU data set, all questions are multiple-choice questions. After further analyzing the results, a strange phenomenon was discovered: Gemini prefers option D. The distribution of the GPT series among the four options is much more balanced. The team suggested that this may be the reason why Gemini

caused by not fine-tuning a lot of instructions for multiple-choice questions.

In addition, Gemini’s security filtering is very strict. When it comes to ethical questions, it only answers 85% of the questions. And when it came to questions related to human sexuality, it only answered 28% of the questions

The GPT series performs better when dealing with longer and more complex problems. In comparison, Gemini Pro performs poorly.

Especially on long problems, GPT-4 Turbo has almost no performance. The performance drops, which shows that it has a strong ability to understand complex problems. This type of problem involves people exchanging items, and ultimately requires AI to determine which items each person owns

Especially on long problems, GPT-4 Turbo has almost no performance. The performance drops, which shows that it has a strong ability to understand complex problems. This type of problem involves people exchanging items, and ultimately requires AI to determine which items each person owns

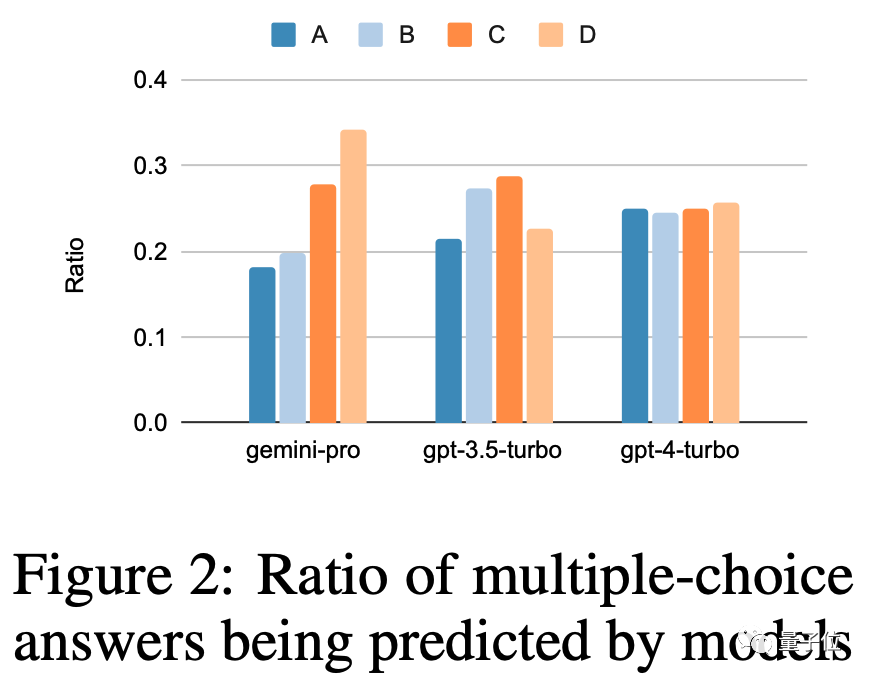

Tasks Gemini excels at include understanding the world's sports knowledge, manipulating symbol stacks, sorting words alphabetically, and parsing tables

- API-based model behavior may change at any time

- Only a limited number of prompts have been tried, and the prompt words applicable to different models may be different

- There is no way to control whether the test set is Leak

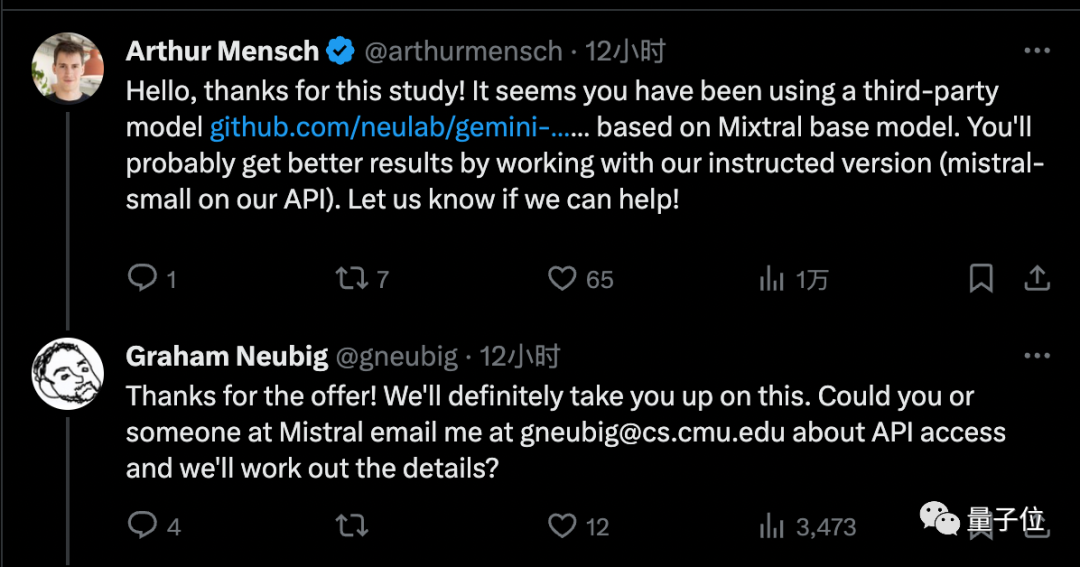

The founder of Mistral AI has provided the team with access to the official version, which he believes will bring better results

Although Gemini Pro is not as good as GPT-3.5, Its advantage is that it can be used for free if it does not exceed 60 calls per minute.

Therefore, many individual developers have changed camps

Currently Gemini has the highest The Ultra version has not yet been released, and the CMU team plans to continue this research by then. Do you think Gemini Ultra can reach the level of GPT-4?

This article introduces the paper in detail: https://arxiv.org/abs/2312.11444Reference link:

[1]https://twitter.com/gneubig/status/1737108977954251216.

The above is the detailed content of CMU conducted a detailed comparative study and found that GPT-3.5 is superior to Gemini Pro, ensuring fair, transparent and reproducible performance. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- How to debug react project at Google

- How to use Google Maps in Uniapp

- Choose GPT-3.5 or fine-tune open source models such as Llama 2? After comprehensive comparison, the answer is

- Looking for scenes for Gemini AI large model Google Project Ellman project exposed

- Violently beating GPT-3.5, Google Gemini declares it free of charge! The most powerful code generation tool is launched to support 20+ languages