Web Front-end

Web Front-end JS Tutorial

JS Tutorial How to implement an online speech recognition system using WebSocket and JavaScript

How to implement an online speech recognition system using WebSocket and JavaScript

How to use WebSocket and JavaScript to implement an online speech recognition system

Introduction:

With the continuous development of science and technology, speech recognition technology has become an important part of the field of artificial intelligence. An important part of. The online speech recognition system based on WebSocket and JavaScript has the characteristics of low latency, real-time and cross-platform, and has become a widely used solution. This article will introduce how to use WebSocket and JavaScript to implement an online speech recognition system, and provide specific code examples to help readers better understand and apply this technology.

1. Introduction to WebSocket:

WebSocket is a protocol for full-duplex communication on a single TCP connection and can be used for real-time data transmission between the client and the server. Compared with the HTTP protocol, WebSocket has the advantages of low latency and real-time performance, and can solve the high delay and resource waste problems caused by HTTP long polling. It is very suitable for application scenarios with high real-time requirements.

2. Overview of speech recognition technology:

Speech recognition technology refers to the process by which computers convert human voice information into understandable text or commands. It is an important research direction in the fields of natural language processing and artificial intelligence, and is widely used in intelligent assistants, voice interaction systems, speech transcription and other fields. Currently, there are many open source speech recognition engines, such as Google's Web Speech API and CMU Sphinx. We can implement online speech recognition systems based on these engines.

3. Online speech recognition system implementation steps:

-

Create WebSocket connection:

In JavaScript code, you can use the WebSocket API to establish a WebSocket connection with the server . The specific code examples are as follows:var socket = new WebSocket("ws://localhost:8080"); // 这里的地址需要根据实际情况做修改 -

Initialize the speech recognition engine:

Select the appropriate speech recognition engine according to actual needs and initialize the engine. Here we take Google's Web Speech API as an example. The specific code examples are as follows:var recognition = new webkitSpeechRecognition(); recognition.continuous = true; // 设置为连续识别模式 recognition.interimResults = true; // 允许返回中间结果 recognition.lang = 'zh-CN'; // 设置识别语言为中文

-

Processing speech recognition results:

In the onmessage event callback function of WebSocket, process speech recognition The recognition results returned by the engine. Specific code examples are as follows:socket.onmessage = function(event) { var transcript = event.data; // 获取识别结果 console.log("识别结果:" + transcript); // 在这里可以根据实际需求进行具体的操作,如显示在页面上或者发送到后端进行进一步处理 }; -

Start speech recognition:

Start the speech recognition process through the recognition.start method, and send audio data through WebSocket for real-time recognition. Specific code examples are as follows:recognition.onstart = function() { console.log("开始语音识别"); }; recognition.onresult = function(event) { var interim_transcript = ''; for (var i = event.resultIndex; i < event.results.length; ++i) { if (event.results[i].isFinal) { var final_transcript = event.results[i][0].transcript; socket.send(final_transcript); // 发送识别结果到服务器 } else { interim_transcript += event.results[i][0].transcript; } } }; recognition.start(); -

Server-side processing:

On the server side, after receiving the audio data sent by the client, the corresponding speech recognition engine can be used for recognition. And return the recognition results to the client. Here we take Python's Flask framework as an example. The specific code examples are as follows:from flask import Flask, request app = Flask(__name__) @app.route('/', methods=['POST']) def transcribe(): audio_data = request.data # 使用语音识别引擎对音频数据进行识别 transcript = speech_recognition_engine(audio_data) return transcript if __name__ == '__main__': app.run(host='0.0.0.0', port=8080)

Summary:

This article introduces how to use WebSocket and JavaScript to implement an online speech recognition system, and provides Specific code examples. By using WebSocket to establish a real-time communication connection with the server and calling an appropriate speech recognition engine for real-time recognition, we can easily implement a low-latency, real-time online speech recognition system. I hope this article will be helpful to readers in understanding and applying this technology.

The above is the detailed content of How to implement an online speech recognition system using WebSocket and JavaScript. For more information, please follow other related articles on the PHP Chinese website!

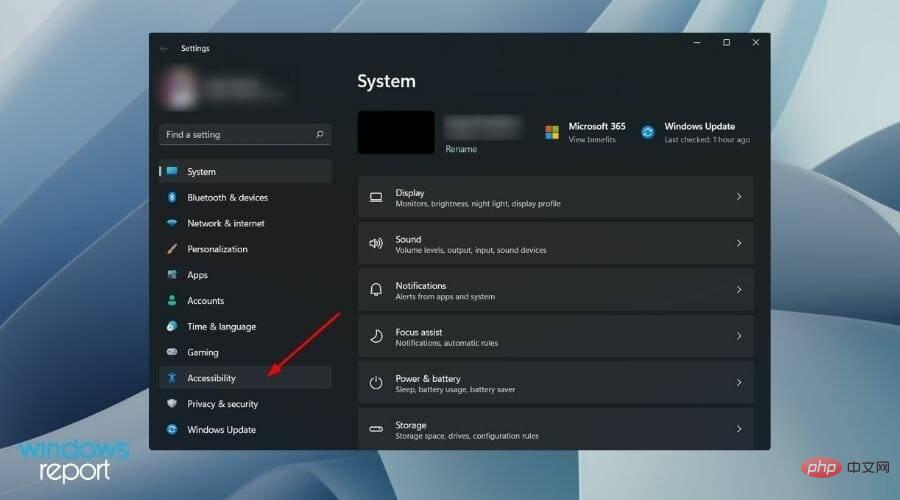

如何在 Windows 11 中禁用语音识别May 01, 2023 am 09:13 AM

如何在 Windows 11 中禁用语音识别May 01, 2023 am 09:13 AM<p>微软最新的操作系统Windows11也提供了与Windows10中类似的语音识别选项。</p><p>值得注意的是,您可以离线使用语音识别或通过互联网连接使用它。语音识别使您可以使用语音控制某些应用程序,还可以将文本口述到Word文档中。</p><p>Microsoft的语音识别服务并未为您提供一整套功能。有兴趣的用户可以查看我们的一些最佳语音识别应用程

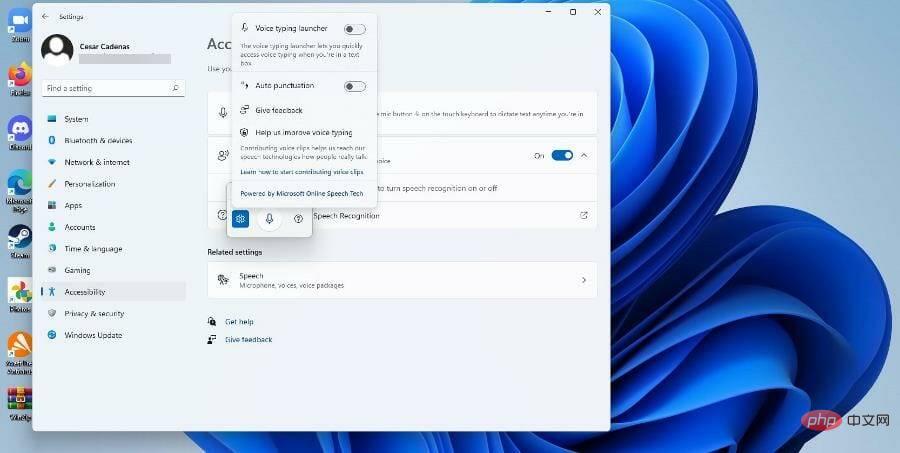

如何在 Windows 11 上使用文本转语音和语音识别技术?Apr 24, 2023 pm 03:28 PM

如何在 Windows 11 上使用文本转语音和语音识别技术?Apr 24, 2023 pm 03:28 PM与Windows10一样,Windows11计算机具有文本转语音功能。也称为TTS,文本转语音允许您用自己的声音书写。当您对着麦克风讲话时,计算机会结合文本识别和语音合成在屏幕上写出文本。如果您在阅读或写作时遇到困难,这是一个很好的工具,因为您可以在说话时执行意识流。你可以用这个方便的工具克服作家的障碍。如果您想为视频生成画外音脚本、检查某些单词的发音或通过Microsoft讲述人大声听到文本,TTS也可以为您提供帮助。此外,该软件擅长添加适当的标点符号,因此您也可以学习良好的语法。语音

使用OpenAI的Whisper 模型进行语音识别Apr 12, 2023 pm 05:28 PM

使用OpenAI的Whisper 模型进行语音识别Apr 12, 2023 pm 05:28 PM语音识别是人工智能中的一个领域,它允许计算机理解人类语音并将其转换为文本。该技术用于 Alexa 和各种聊天机器人应用程序等设备。而我们最常见的就是语音转录,语音转录可以语音转换为文字记录或字幕。wav2vec2、Conformer 和 Hubert 等最先进模型的最新发展极大地推动了语音识别领域的发展。这些模型采用无需人工标记数据即可从原始音频中学习的技术,从而使它们能够有效地使用未标记语音的大型数据集。它们还被扩展为使用多达 1,000,000 小时的训练数据,远远超过学术监督数据集中使用的

Web Speech API开发者指南:它是什么以及如何工作Apr 11, 2023 pm 07:22 PM

Web Speech API开发者指南:它是什么以及如何工作Apr 11, 2023 pm 07:22 PM译者 | 李睿审校 | 孙淑娟Web Speech API是一种Web技术,允许用户将语音数据合并到应用程序中。它可以通过浏览器将语音转换为文本,反之亦然。Web Speech API于2012年由W3C社区引入。而在十年之后,这个API仍在开发中,这是因为浏览器兼容性有限。该API既支持短时输入片段,例如一个口头命令,也支持长时连续的输入。广泛的听写能力使它非常适合与Applause应用程序集成,而简短的输入很适合语言翻译。语音识别对可访问性产生了巨大的影响。残疾用户可以使用语音更轻松地浏览

Java语言中的语音识别应用开发介绍Jun 10, 2023 am 10:16 AM

Java语言中的语音识别应用开发介绍Jun 10, 2023 am 10:16 AMJava语言作为目前最为流行的编程语言之一,其在各种应用开发领域中都有着广泛的应用。其中,语音识别应用是近年来备受瞩目的一个领域,尤其是在智能家居、智能客服、语音助手等领域中,语音识别应用已经变得不可或缺。本文将为读者介绍如何使用Java语言进行语音识别应用的开发。一、Java语音识别技术分类Java语音识别技术可以分为两种:一种是使用Java语言封装的第三

PHP实现语音识别功能Jun 22, 2023 am 08:59 AM

PHP实现语音识别功能Jun 22, 2023 am 08:59 AMPHP实现语音识别功能语音识别是一种将语音信号转换成相应文本或命令的技术,在现代信息化时代被广泛应用。PHP作为一种常用的Web编程语言,也可以通过多种方式来实现语音识别功能,例如使用开源工具库或API接口等。本文将介绍使用PHP来实现语音识别的基本方法,同时还提供了几个常用的工具库和API接口,方便读者在实际开发中选择合适的解决方案。一、PHP语音识别的基

Pytorch创建多任务学习模型Apr 09, 2023 pm 09:41 PM

Pytorch创建多任务学习模型Apr 09, 2023 pm 09:41 PMMTL最著名的例子可能是特斯拉的自动驾驶系统。在自动驾驶中需要同时处理大量任务,如物体检测、深度估计、3D重建、视频分析、跟踪等,你可能认为需要10个以上的深度学习模型,但事实并非如此。HydraNet介绍一般来说多任务学的模型架构非常简单:一个骨干网络作为特征的提取,然后针对不同的任务创建多个头。利用单一模型解决多个任务。上图可以看到,特征提取模型提取图像特征。输出最后被分割成多个头,每个头负责一个特定的情况,由于它们彼此独立可以单独进行微调!特斯拉的讲演中详细的说明这个模型(youtube:

PHP和机器学习:如何进行语音识别与语音合成Jul 28, 2023 pm 09:46 PM

PHP和机器学习:如何进行语音识别与语音合成Jul 28, 2023 pm 09:46 PMPHP和机器学习:如何进行语音识别与语音合成引言:随着机器学习和人工智能的迅猛发展,语音识别和语音合成已经成为了生活中一个重要的技术应用。在PHP中,我们也可以利用机器学习的能力,实现语音识别和语音合成的功能。本文将介绍如何利用PHP进行简单的语音识别与语音合成,并提供相关的代码示例。一、语音识别1.准备工作在进行语音识别之前,我们需要安装相关的扩展和依赖包

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SublimeText3 Chinese version

Chinese version, very easy to use

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft