Home >Technology peripherals >AI >AAAI2024: Far3D - Innovative idea of directly reaching 150m visual 3D target detection

AAAI2024: Far3D - Innovative idea of directly reaching 150m visual 3D target detection

- PHPzforward

- 2023-12-15 13:54:531213browse

I recently read a recent study on pure visual surround perception on Arxiv. This research is based on the PETR series of methods and focuses on solving the pure visual perception problem of long-distance target detection, extending the perception range to 150 meters. The methods and results of this paper have great reference value for us, so I tried to interpret it

Original title: Far3D: Expanding the Horizon for Surround-view 3D Object Detection

Paper link: https://arxiv.org/abs/2308.09616

Author affiliation: Beijing Institute of Technology & Megvii Technology

Mission Background

Three-dimensional object detection plays an important role in understanding the three-dimensional scene of autonomous driving. Its purpose is to accurately locate and classify objects around the vehicle. Pure visual surround perception methods have the advantages of low cost and wide applicability, and have made significant progress. However, most of them focus on short-range sensing (for example, the sensing distance of nuScenes is about 50 meters), and the long-range detection field is less explored. Detecting distant objects is critical to maintaining a safe distance during actual driving, especially at high speeds or in complex road conditions.

Recently, significant progress has been made in 3D object detection from surround-view images, which can be deployed at low cost. However, most studies mainly focus on the short-range sensing range, and there are fewer studies on long-range detection. Directly extending existing methods to cover long distances will face challenges such as high computational cost and unstable convergence. To address these limitations, this paper proposes a new sparse query-based framework called Far3D.

Thesis Idea

According to the intermediate representation, existing look-around sensing methods can be roughly divided into two categories: methods based on BEV representation and methods based on sparse query representation. The method based on BEV representation requires a very large amount of calculation due to the need for intensive calculation of BEV features, making it difficult to extend to long-distance scenarios. The method based on sparse query representation will learn the global 3D query from the training data, the calculation amount is relatively small, and it has strong scalability. However, it also has some weaknesses. Although it can avoid the square growth of the number of queries, the global fixed query is not easy to adapt to dynamic scenarios, and targets are usually missed in long-distance detection

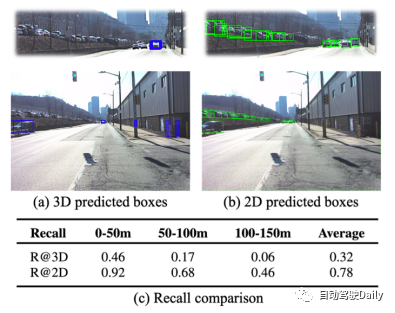

Figure 1: Performance comparison of 3D detection and 2D detection on the Argoverse 2 data set.

In long-range detection, methods based on sparse query representation have two main challenges.

- The first is poor recall performance. Due to the sparse distribution of queries in 3D space, only a small number of matching positive queries can be generated in long distance ranges. As shown in the figure above, the recall rate of 3D detection is lower, while the recall rate of existing 2D detection is much higher, leaving a clear performance gap between the two. Therefore, utilizing high-quality 2D object priors to improve 3D query is a promising method, which is beneficial to achieve precise positioning and comprehensive coverage of objects.

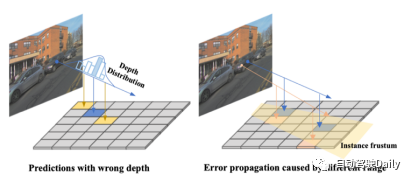

- Secondly, directly introducing 2D detection results to help 3D detection will face the problem of error propagation. As shown in the figure below, the two main sources are 1) object positioning error due to inaccurate depth prediction; 2) 3D position error in the frustum transformation increases with distance. These noisy queries will affect the stability of training and require effective denoising methods to optimize. Furthermore, during training, the model will show a tendency to overfit to densely packed close objects while ignoring sparsely distributed distant objects.

In order to deal with the above-mentioned problems, this article adopts the following design plan:

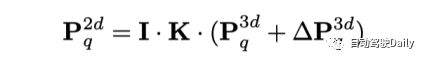

- In addition to the 3D global query learned from the data set, a 3D adaptive query generated from the 2D detection results is also introduced. Specifically, the 2D detector and depth prediction network are first used to obtain the 2D box and corresponding depth, and then projected into the 3D space through spatial transformation as the initialization of the 3D adaptive query.

- In order to adapt to the different scales of objects at different distances, Perspective-aware Aggergation is designed. It allows 3D query to interact with features of different scales, which is beneficial to feature capture of objects at different distances. For example, distant objects require large-resolution features, while close objects require different features. This design allows the model to adaptively interact with features.

- A strategy called Range-modulated 3D Denoising is designed to alleviate the problem of query error propagation and slow convergence. Considering that query regression difficulties at different distances are different, the noisy query is adjusted according to the distance and scale of the real box. Input multiple sets of noisy queries near GT into the decoder to reconstruct the 3D real box (for positive samples) and discard negative samples respectively.

Main contributions

- This paper proposes a new sparse query-based detection framework, which uses high-quality 2D object prior to generate 3D adaptive query, thereby expanding the perception range of 3D detection.

- This article designs a Perspective-aware Aggregation module, which aggregates visual features from different scales and perspectives, and a 3D Denoising strategy based on target distance to solve query error propagation and framework convergence problems.

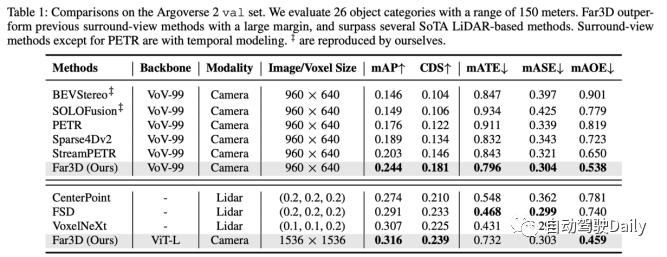

- Experimental results on the long-range Argoverse 2 dataset show that Far3D surpasses previous look-around methods and outperforms several lidar-based methods. And its generality is verified on the nuScenes dataset.

Model design

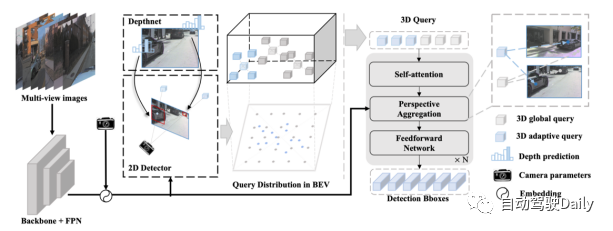

Far3D process overview:

- Input the surround image into the backbone network and FPN layer, encode the 2D image features and encode them with camera parameters.

- Utilizes 2D detectors and depth prediction networks to generate reliable 2D object boxes and their corresponding depths, which are then projected into 3D space through camera transformations.

- The generated 3D adaptive query is combined with the initial 3D global query and iteratively regressed by the decoder layer to predict the 3D object frame. Furthermore, the model can implement time series modeling through long-term query propagation.

Perspective-aware Aggregation:

In order to introduce multi-scale features to the long-range detection model, this article applies 3D spatial deformable attention. It first performs offset sampling near the 3D position corresponding to the query, and then aggregates image features through 3D-2D view transformation. The advantage of this method instead of global attention in the PETR series is that the computational complexity can be significantly reduced. Specifically, for each query's reference point in 3D space, the model learns M sampling offsets around it and projects these offset points into different 2D view features.

Thereafter, the 3D query interacts with the projected sampled features. In this way, various features from different perspectives and scales will be brought together into a three-dimensional query by considering their relative importance.

Range-modulated 3D Denoising:

3D queries with different distances have different regression difficulties, which is different from existing 2D Denoising methods (such as DN-DETR, 2D queries that are usually treated equally). The difference in difficulty comes from query matching density and error propagation. On the one hand, the query matching degree corresponding to distant objects is lower than that of nearby objects. On the other hand, when introducing 2D priors in 3D adaptive query, small errors in 2D object boxes will be amplified, not to mention that this effect will increase as the object distance increases. Therefore, some queries near the GT box can be regarded as positive queries, while others with obvious deviations should be regarded as negative queries. This paper proposes a 3D Denoising method that aims to optimize those positive samples and directly discard negative samples.

Specifically, the authors build a GT-based noisy query by adding groups of positive and negative samples simultaneously. For both types, random noise is applied based on the location and size of the object to facilitate denoising learning in long-range perception. Specifically, positive samples are random points within the 3D box, while negative samples impose a larger offset on the GT, and the offset range changes with the distance of the object. This method can simulate noisy candidate positive samples and false positive samples during the training process

Experimental results

Far3D was achieved on Argoverse 2 with a 150m sensing range Highest performance. And after the model is scaled up, it can achieve the performance of several Lidar-based methods, demonstrating the potential of pure visual methods.

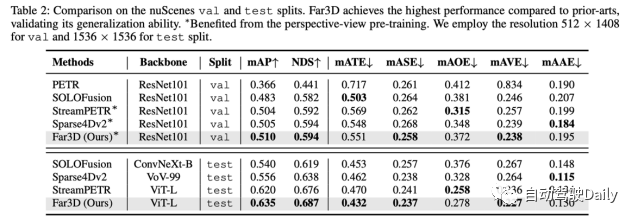

In order to verify the generalization performance, the author also conducted experiments on the nuScenes data set, showing that it achieved SoTA performance on both the validation set and the test set.

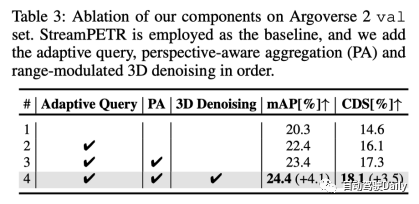

After ablation experiments, we came to the following conclusion: 3D adaptive query, perspective-aware aggregation and range-adjusted 3D noise reduction each have a certain gain

Thoughts on the paper

Q: What is the novelty of this article?

A: The main novelty is to solve the problem of perception of long-distance scenes . There are many problems in extending existing methods to long-distance scenarios, including computational costs and convergence difficulties. The authors of this article propose an efficient framework for this task. Although each module looks familiar individually, they all serve the detection of distant targets and have clear goals.

Q: Compared with BevFormer v2, what are the differences between MV2D?

A: MV2D mainly relies on 2D anchors to obtain corresponding features to bind 3D, but there is no explicit depth estimation, so the uncertainty will be relatively large for distant objects, and then it will be difficult to converge; BevFormer v2 mainly solves the domain gap between 2D backbone and 3D task scenes. Generally, the backbone pre-trained on 2D recognition tasks has insufficient ability to detect 3D scenes and does not explore problems in long-distance tasks.

Q: Can the timing be improved, such as query propagation plus feature propagation?

A: It is feasible in theory, but performance-efficiency tradeoff should be considered in practical applications.

Q: Are there any areas that need improvement?

A: Both long-tail issues and long-distance evaluation indicators deserve improvement. On a 26-class target like Argoverse 2, models do not perform well on long-tail classes and ultimately reduce average accuracy, which has not yet been explored. On the other hand, using unified metrics to evaluate distant and close objects may not be appropriate, which emphasizes the need for practical dynamic evaluation criteria that can be adapted to different scenarios in the real world.

Original link: https://mp.weixin.qq.com/s/xxaaYQsjuWzMI7PnSmuaWg

The above is the detailed content of AAAI2024: Far3D - Innovative idea of directly reaching 150m visual 3D target detection. For more information, please follow other related articles on the PHP Chinese website!