Technology peripherals

Technology peripherals AI

AI Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model

Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction modelMamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model

The latest research from Cornell University and Apple concluded that in order to generate high-resolution images with less computing power, the attention mechanism can be eliminated

As we all know, the attention mechanism is the core component of the Transformer architecture and is crucial for high-quality text and image generation. But its flaw is also obvious, that is, the computational complexity will increase quadratically as the sequence length increases. This is a vexing problem in long text and high-resolution image processing.

To solve this problem, this new research replaces the attention mechanism in the traditional architecture with a more scalable state space model (SSM) backbone and develops a A new architecture called Diffusion State Space Model (DIFFUSSM). This new architecture can use less computing power to match or exceed the image generation effect of existing diffusion models with attention modules, and excellently generate high-resolution images.

Thanks to the release of “Mamba” last week, the state space model SSM is receiving more and more attention. The core of Mamba is the introduction of a new architecture - "selective state space model", which makes Mamba comparable to or even beating Transformer in language modeling. At the time, paper author Albert Gu said that Mamba’s success gave him confidence in the future of SSM. Now, this paper from Cornell University and Apple seems to have added new examples of the application prospects of SSM.

Microsoft principal research engineer Shital Shah warned that the attention mechanism may be pulled off the throne it has been sitting on for a long time.

Paper Overview

The rapid progress in the field of image generation has benefited from the denoising diffusion probability model ( DDPMs). Such models model the generation process as iterative denoising latent variables, and when sufficient denoising steps are performed, they are able to produce high-fidelity samples. The ability of DDPMs to capture complex visual distributions makes them potentially advantageous in driving high-resolution, photorealistic compositions.

Significant computational challenges remain in scaling DDPMs to higher resolutions. The main bottleneck is the reliance on self-attention when achieving high-fidelity generation. In U-Nets architecture, this bottleneck comes from combining ResNet with attention layers. DDPMs go beyond generative adversarial networks (GANs) but require multi-head attention layers. In the Transformer architecture, attention is the central component and therefore critical to achieving state-of-the-art image synthesis results. In both architectures, the complexity of attention scales quadratically with sequence length, so becomes infeasible when processing high-resolution images.

The computational cost has prompted previous researchers to use representation compression methods. High-resolution architectures often employ patchifying or multi-scale resolution. Blocking can create coarse-grained representations and reduce computational costs, but at the expense of critical high-frequency spatial information and structural integrity. Multi-scale resolution, while reducing the computation of attention layers, also reduces spatial detail through downsampling and introduces artifacts when upsampling is applied.

DIFFUSSM is a diffusion state space model that does not use an attention mechanism and is designed to solve the problems encountered when applying attention mechanisms in high-resolution image synthesis. DIFFUSSM uses a gated state space model (SSM) in the diffusion process. Previous studies have shown that the SSM-based sequence model is an effective and efficient general neural sequence model. By adopting this architecture, the SSM core can be enabled to handle finer-grained image representations, eliminating global tiling or multi-scale layers. To further improve efficiency, DIFFUSSM adopts an hourglass architecture in dense components of the network

The authors verified the performance of DIFFUSSM at different resolutions. Experiments on ImageNet demonstrate that DIFFUSSM achieves consistent improvements in FID, sFID, and Inception Score at various resolutions with fewer total Gflops.

Paper link: https://arxiv.org/pdf/2311.18257.pdf

DIFFUSSM Framework

In order not to change the original meaning, the content needs to be rewritten into Chinese. The authors' goal was to design a diffusion architecture capable of learning long-range interactions at high resolution without the need for "length reduction" like blocking. Similar to DiT, this approach works by flattening the image and treating it as a sequence modeling problem. However, unlike Transformer, this method uses sub-quadratic calculations when processing the length of this sequence.

DIFFUSSM is a gate optimized for processing long sequences. The core component of two-way SSM. In order to improve efficiency, the author introduced the hourglass architecture in the MLP layer. This design alternately expands and contracts the sequence length around the bidirectional SSM while selectively reducing the sequence length in the MLP. The complete model architecture is shown in Figure 2

Specifically, each hourglass layer receives a shortened and flattened input sequence I ∈ R ^(J×D), where M = L/J is the ratio of reduction and enlargement. At the same time, the entire block, including bidirectional SSM, is computed on the original length, taking full advantage of the global context. σ is used in this article to represent the activation function. For l ∈ {1 . . . L}, where j = ⌊l/M⌋, m = l mod M, D_m = 2D/M, the calculation equation is as follows:

The authors integrate gated SSM blocks using skip connections in each layer. The authors integrate a combination of class label y ∈ R^(L×1) and time step t ∈ R^(L×1) at each location, as shown in Figure 2.

Parameters: The number of parameters in the DIFFUSSM block is mainly determined by the linear transformation W, which contains 9D^2 2MD^2 parameters. When M = 2, this yields 13D^2 parameters. The DiT transform block has 12D^2 parameters in its core transform layer; however, the DiT architecture has many more parameters in other layer components (adaptive layer normalization). The researchers matched the parameters in their experiments by using additional DIFFUSSM layers.

FLOPs: Figure 3 compares Gflops between DiT and DIFFUSSM. The total Flops of a DIFFUSSM layer is  , where α represents the constant of the FFT implementation. This yields approximately 7.5LD^2 Gflops when M = 2 and linear layers dominate the calculation. In comparison, if full-length self-attention is used instead of SSM in this hourglass architecture, there are an additional 2DL^2 Flops.

, where α represents the constant of the FFT implementation. This yields approximately 7.5LD^2 Gflops when M = 2 and linear layers dominate the calculation. In comparison, if full-length self-attention is used instead of SSM in this hourglass architecture, there are an additional 2DL^2 Flops.

Consider two experimental scenarios: 1) D ≈ L = 1024, which will bring additional 2LD^2 Flops, 2) 4D ≈ L = 4096, which would yield 8LD^2 Flops and significantly increase the cost. Since the core cost of bidirectional SSM is small relative to the cost of using attention, using an hourglass architecture does not work for attention-based models. As discussed earlier, DiT avoids these problems by using chunking at the expense of compressed representation.

Experimental results

Generate category condition image

Table below is the comparison result of DIFFUSSM with all current state-of-the-art category conditional generation models

when no classifier guidance is used , DIFFUSSM outperforms other diffusion models in both FID and sFID, reducing the optimal score of the previous non-classifier-guided latent diffusion model from 9.62 to 9.07, while reducing the number of training steps used to about 1/3 of the original. In terms of total Gflops trained, the uncompressed model reduces total Gflops by 20% compared to DiT. When classifier-free guidance is introduced, the model achieves the best sFID score among all DDPM-based models, outperforming other state-of-the-art strategies, indicating that images generated by DIFFUSSM are more robust to spatial distortion.

DIFFUSSM’s FID score when using classifier-free guidance surpasses all models and maintains a fairly small gap (0.01) when compared to DiT. Note that DIFFUSSM trained with a 30% reduction in total Gflops already outperforms DiT without applying classifier-free guidance. U-ViT is another Transformer-based architecture, but uses a UNet-based architecture with long hop connections between blocks. U-ViT uses fewer FLOPs and performs better at 256×256 resolution, but this is not the case in the 512×512 dataset. The author mainly compares with DiT. For the sake of fairness, this long-hop connection is not adopted. The author believes that the idea of adopting U-Vit may be beneficial to both DiT and DIFFUSSM.

The authors further conduct comparisons on higher-resolution benchmarks using classifier-free guidance. The results of DIFFUSSM are relatively strong and close to state-of-the-art high-resolution models, only inferior to DiT on sFID, and achieve comparable FID scores. DIFFUSSM was trained on 302 million images, observed 40% of the images, and used 25% fewer Gflops than DiT

Unconditional image generation

The results of the comparison of the unconditional image generation capabilities of the models according to the authors are shown in Table 2. The authors' research found that DIFFUSSM achieved comparable FID scores (differences of -0.08 and 0.07) under comparable training budgets as LDM. This result highlights the applicability of DIFFUSSM across different benchmarks and different tasks. Similar to LDM, this method does not outperform ADM on the LSUN-Bedrooms task since it only uses 25% of the total training budget of ADM. For this task, the best GAN model outperforms the diffusion model in model categories

Please refer to the original paper for more details

The above is the detailed content of Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model. For more information, please follow other related articles on the PHP Chinese website!

An AI Space Company Is BornMay 12, 2025 am 11:07 AM

An AI Space Company Is BornMay 12, 2025 am 11:07 AMThis article showcases how AI is revolutionizing the space industry, using Tomorrow.io as a prime example. Unlike established space companies like SpaceX, which weren't built with AI at their core, Tomorrow.io is an AI-native company. Let's explore

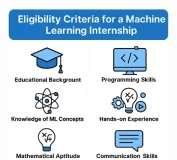

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

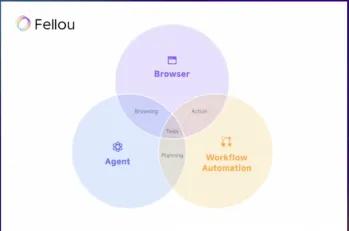

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft