Technology peripherals

Technology peripherals AI

AI DetZero: Waymo ranks first on the 3D detection list, comparable to manual annotation!

DetZero: Waymo ranks first on the 3D detection list, comparable to manual annotation!

This article proposes a set of offline 3D object detection algorithm framework DetZero. Through comprehensive research and evaluation on Waymo’s public data set, DetZero can generate continuous and complete objects. Trajectory sequence, and make full use of long-term point cloud features to significantly improve the quality of perception results. At the same time, it ranked first in the WOD 3D object detection rankings with a performance of 85.15 mAPH (L2). In addition, DetZero can provide high-quality automatic labeling for online model training, and its results have reached or even exceeded the level of manual labeling.

This is the paper link: https://arxiv.org/abs/2306.06023

The content that needs to be rewritten is: Code link: https://github.com/PJLab-ADG/ DetZero

Please visit the homepage link: https://superkoma.github.io/detzero-page

1 Introduction

In order to improve the data annotation efficiency, we studied a new approach. This method is based on deep learning and unsupervised learning and can automatically generate annotated data. By using large amounts of unlabeled data, we can train an autonomous driving perception model to recognize and detect objects on the road. This method can not only reduce the cost of labeling data, but also improve the efficiency of post-processing. We used Waymo's offline 3D object detection method 3DAL[] as a baseline for comparison in our experiments, and the results show that our proposed method has significant improvements in accuracy and efficiency. We believe that this method will play an important role in future autonomous driving technology

- Object detection (Detection): input a small amount of continuous point cloud frame data and output each frame Bounding boxes and category information of 3D objects in ;

- Motion ClassificationMotion Classification): Based on the object trajectory characteristics, determine the object’s motion state (stationary or moving);

- Object-centered optimization (Object-centric Refining): Based on the motion state predicted by the previous module, the temporal point cloud features of stationary and moving objects are extracted respectively to predict accurate bounding boxes. Finally, the optimized 3D bounding box is transferred back to the coordinate system of each frame where the object is located through the pose matrix.

- However, many mainstream online 3D object detection methods have achieved better results than existing offline 3D detection methods by utilizing the temporal context features of point clouds. However, we realize that these methods fail to effectively utilize the characteristics of long sequence point clouds

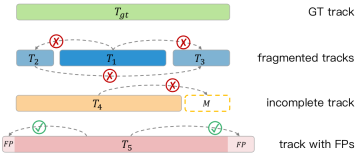

- The quality of the object sequence will have a great impact on the downstream optimization model

The optimization model based on motion state classification does not fully utilize the timing of the object feature. For example, the size of a rigid object remains consistent over time, and more accurate size estimation can be achieved by capturing data from different angles; the motion trajectory of the object should follow certain kinematic constraints, which is reflected in the smoothness of the trajectory. As shown in Figure (a) below, for dynamic objects, the optimization mechanism based on sliding windows does not consider the consistency of the object geometry, and only updates the bounding box through the time-series point cloud information of several adjacent frames, resulting in the predicted geometric size. Deviation occurs. In the example of (b), by aggregating all the point clouds of the object, dense time-series point cloud features can be obtained, and the accurate geometric size of the bounding box can be predicted for each frame.

- The optimization model based on the motion state predicts the size of the object (a), and the geometric optimization model predicts the size of the object after aggregating all point clouds from different perspectives (b)

- Multi-view geometric interaction: by stitching multiple views Object point clouds can complete the appearance and shape of objects. First, local coordinate transformation is performed to align the object point cloud with local frames at different positions, and the projection distance of each point to the six surfaces of the bounding box is calculated to strengthen the information representation of the bounding box, and then directly merge all point clouds of different frames As the key and value of multi-view geometric features, t samples are randomly selected from the object sequence as queries for single-view geometric features. The geometric query will be sent to the self-attention layer to see the differences between each other, and then sent to the cross-attention layer to supplement the features of the required perspective and predict the accurate geometric size.

- Interaction between local and global positions: Randomly select any box in the object sequence as the origin, transfer all other boxes and corresponding object point clouds to this coordinate system, and calculate each point to its respective boundary The distance between the center point of the frame and the eight corner points serves as the key and value of the global position feature. Each sample in the object sequence will be used as a position query and sent to the self-attention layer to determine the relative distance between the current position and other positions. Then it is input to the cross-attention layer to simulate the context relationship from local to global positions and predict this coordinate system. The offset between each initial center point and the true center point, as well as the heading angle difference.

- Confidence Optimization: The classification branch is used to classify whether the object is TP or FP. The IoU regression branch predicts the IoU size between an object and the ground truth box after being optimized by the geometric model and position model. The final confidence score is the geometric mean of these two branches.

2 Method

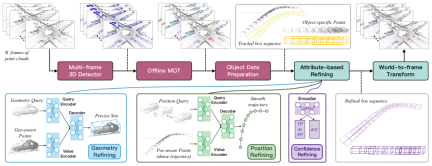

This paper proposes a new offline 3D object detection algorithm framework called DetZero. This framework has the following characteristics: (1) Use multi-frame 3D detectors and offline trackers as upstream modules to provide accurate and complete object tracking, focusing on high recall of object sequences (track-level recall); (2) The downstream module includes an optimization model based on the attention mechanism, which uses long-term point cloud features to learn and predict different attributes of objects, including refined geometric dimensions, smooth motion trajectory positions, and updated confidence scores

2.1 Generate a complete object sequence

We use the public CenterPoint[] as the basic detector. In order to provide more detection candidate frames, we proceed in three aspects Enhanced: (1) Use different frame point cloud combinations as input to maximize performance without reducing performance; (2) Use point cloud density information to fuse original point cloud features and voxel features into a two-stage module to optimize the first stage Boundary results; (3) Use inference stage data augmentation (TTA), multi-model result fusion (Ensemble) and other technologies to improve the model's adaptability to complex environments

A two-stage correlation strategy is introduced in the offline tracking module To reduce false matching, boxes are divided into high and low groups according to confidence, high groups are associated to update existing trajectories, and unupdated trajectories are associated with low groups. At the same time, the length of the object trajectory can last until the end of the sequence, avoiding ID switching problems. In addition, we will perform the tracking algorithm in reverse to generate another set of trajectories, associate them through position similarity, and finally use the WBF strategy to fuse the successfully matched trajectories to further improve the integrity of the beginning and end of the sequence. Finally, for the differentiated object sequence, the corresponding point cloud of each frame is extracted and saved; the unupdated redundant boxes and some shorter sequences will be directly merged into the final output without downstream optimization.

2.2 Object optimization module based on attribute prediction

Previous object-centered optimization models ignored the correlation between objects in different motion states, such as Consistency of geometric shapes and consistency of object motion states at adjacent moments. Based on these observations, we decompose the traditional bounding box regression task into three modules: predicting the geometry, location and confidence attributes of objects respectively

3 Experiment

3.1 Main performance

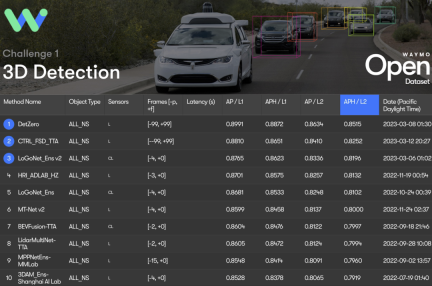

DetZero achieved 85.15 mAPH ( L2) achieved the best results, DetZero showed significant performance advantages whether compared with methods for processing long-term point clouds or compared with state-of-the-art multi-modal fusion 3D detectors

Waymo 3D detection ranking results, all results use TTA or ensemble technology, † refers to offline model, ‡ refers to point cloud image fusion model, * indicates anonymous submission results

Waymo 3D detection ranking results, all results use TTA or ensemble technology, † refers to offline model, ‡ refers to point cloud image fusion model, * indicates anonymous submission results

Similarly, thanks to the detection frame In terms of accuracy and completeness of object tracking sequences, we achieved first place in performance on the Waymo 3D tracking rankings with 75.05 MOTA (L2).

Waymo 3D tracking rankings, * indicates anonymous submission of results

Waymo 3D tracking rankings, * indicates anonymous submission of results

3.2 Ablation experiment

In order to better verify the role of each module we proposed, we conducted an ablation experiment on the Waymo verification set and adopted a more stringent IoU Threshold as a measurement standard

Conducted on Vehicle and Pedestrian on the Waymo verification set, the IoU threshold selected standard value (0.7 & 0.5) and strict value (0.8 & 0.6) respectively

Conducted on Vehicle and Pedestrian on the Waymo verification set, the IoU threshold selected standard value (0.7 & 0.5) and strict value (0.8 & 0.6) respectively

At the same time , for the same set of detection results, we selected the tracker and optimization model in 3DAL and DetZero for cross-combination verification. The results further proved that DetZero’s tracker and optimizer perform better, and the two are more effective when combined. The advantages.

Cross-validation experiments of different upstream and downstream module combinations, the subscripts 1 and 2 represent 3DAL and DetZero respectively, and the indicator is 3D APH

Cross-validation experiments of different upstream and downstream module combinations, the subscripts 1 and 2 represent 3DAL and DetZero respectively, and the indicator is 3D APH

Our offline tracker pays more attention to the object sequence Completeness, although the MOTA performance difference between the two is very small, the performance of Recall@track is one of the reasons for the huge difference in final optimization performance

Offline tracker (Trk2) and 3DAL tracker (Trk1) performance comparison of MOTA and Recall@track

Offline tracker (Trk2) and 3DAL tracker (Trk1) performance comparison of MOTA and Recall@track

Furthermore, comparison with other state-of-the-art trackers also proves the point

Recall@track is Sequence recall after processing by the tracking algorithm, 3D APH is the final performance after processing by the same optimization model

Recall@track is Sequence recall after processing by the tracking algorithm, 3D APH is the final performance after processing by the same optimization model

3.3 Generalization performance

In order to verify our optimization model Whether it is possible to fix the fit to a specific set of upstream results, we selected upstream detection tracking results with different performances as input. The results show that we have achieved significant performance improvements, further proving that as long as the upstream module can recall more and more complete object sequences, our optimizer can effectively utilize the characteristics of its time series point cloud for optimization

Generalization performance verification on the Waymo validation set, the indicator is 3D APH

Generalization performance verification on the Waymo validation set, the indicator is 3D APH

3.4 Comparison with human labeling ability

We will use the experimental settings of 3DAL to compare Report the AP performance of DetZero on 5 specified sequences, measuring human performance by comparing the consistency of single-frame-based re-annotation results with the original ground-truth annotation results. Compared with 3DAL and humans, DetZero has shown advantages in different performance indicators

Performance comparison of 3D AP and BEV AP under different IoU thresholds for the Vehicle category

Performance comparison of 3D AP and BEV AP under different IoU thresholds for the Vehicle category

For To verify whether high-quality automatic annotation results can replace manual annotation results for online model training, we conducted semi-supervised learning verification on the Waymo verification set. We randomly selected 10% of the training data as the training data for the teacher model (DetZero), and performed inference on the remaining 90% of the data to obtain automatic annotation results, which will be used as labels for the student model. We chose single-frame CenterPoint as the student model. On the vehicle category, the results of training using 90% automatic labels and 10% true labels are close to the results of training using 100% true labels, while on the pedestrian category, the results of the model trained with automatic labels are already better than the original ones. The result, which shows that automatic labeling can be used for online model training

Semi-supervised experimental results on the Waymo validation set

Semi-supervised experimental results on the Waymo validation set

3.5 Visualization results

The red box represents the input result of the upstream, and the blue box represents the output result of the optimization model

The red box represents the input result of the upstream, and the blue box represents the output result of the optimization model  The first line represents the input result of the upstream, the second line represents the output result of the optimization model, and the objects within the dotted line represent Positions with obvious differences before and after optimization

The first line represents the input result of the upstream, the second line represents the output result of the optimization model, and the objects within the dotted line represent Positions with obvious differences before and after optimization

Original link: https://mp.weixin.qq.com/s/HklBecJfMOUCC8gclo-t7Q

The above is the detailed content of DetZero: Waymo ranks first on the 3D detection list, comparable to manual annotation!. For more information, please follow other related articles on the PHP Chinese website!

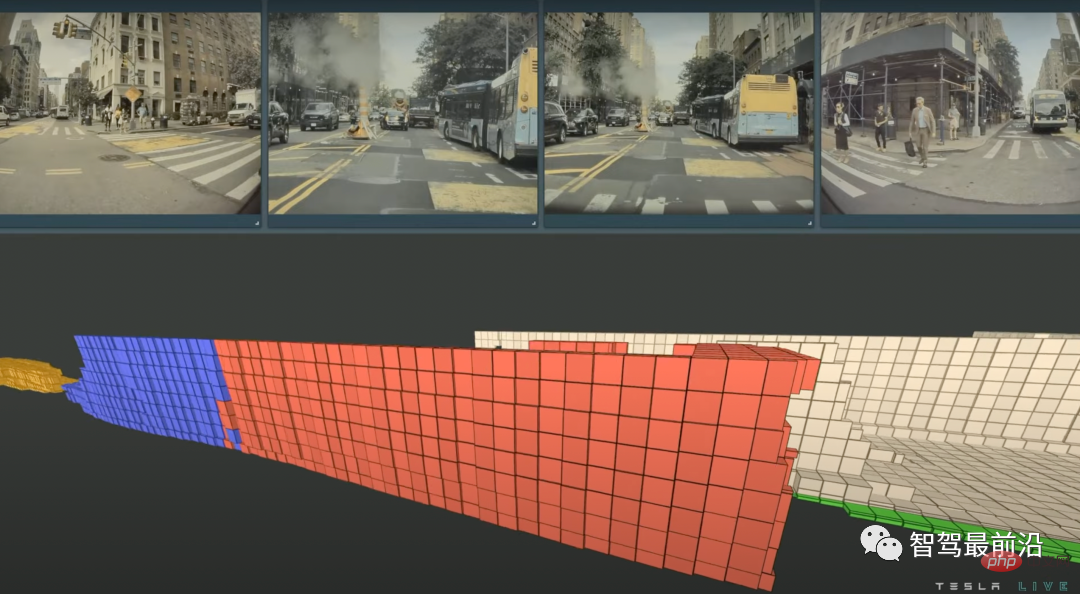

特斯拉自动驾驶算法和模型解读Apr 11, 2023 pm 12:04 PM

特斯拉自动驾驶算法和模型解读Apr 11, 2023 pm 12:04 PM特斯拉是一个典型的AI公司,过去一年训练了75000个神经网络,意味着每8分钟就要出一个新的模型,共有281个模型用到了特斯拉的车上。接下来我们分几个方面来解读特斯拉FSD的算法和模型进展。01 感知 Occupancy Network特斯拉今年在感知方面的一个重点技术是Occupancy Network (占据网络)。研究机器人技术的同学肯定对occupancy grid不会陌生,occupancy表示空间中每个3D体素(voxel)是否被占据,可以是0/1二元表示,也可以是[0, 1]之间的

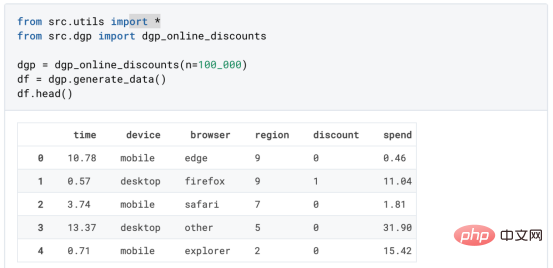

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM译者 | 朱先忠审校 | 孙淑娟在我之前的博客中,我们已经了解了如何使用因果树来评估政策的异质处理效应。如果你还没有阅读过,我建议你在阅读本文前先读一遍,因为我们在本文中认为你已经了解了此文中的部分与本文相关的内容。为什么是异质处理效应(HTE:heterogenous treatment effects)呢?首先,对异质处理效应的估计允许我们根据它们的预期结果(疾病、公司收入、客户满意度等)选择提供处理(药物、广告、产品等)的用户(患者、用户、客户等)。换句话说,估计HTE有助于我

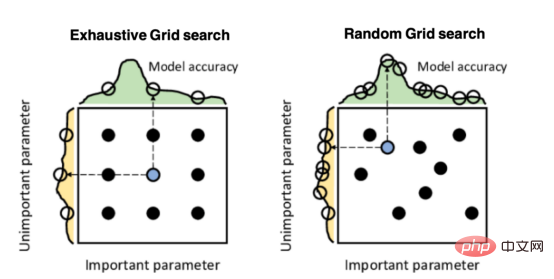

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM译者 | 朱先忠审校 | 孙淑娟引言模型超参数(或模型设置)的优化可能是训练机器学习算法中最重要的一步,因为它可以找到最小化模型损失函数的最佳参数。这一步对于构建不易过拟合的泛化模型也是必不可少的。优化模型超参数的最著名技术是穷举网格搜索和随机网格搜索。在第一种方法中,搜索空间被定义为跨越每个模型超参数的域的网格。通过在网格的每个点上训练模型来获得最优超参数。尽管网格搜索非常容易实现,但它在计算上变得昂贵,尤其是当要优化的变量数量很大时。另一方面,随机网格搜索是一种更快的优化方法,可以提供更好的

因果推断主要技术思想与方法总结Apr 12, 2023 am 08:10 AM

因果推断主要技术思想与方法总结Apr 12, 2023 am 08:10 AM导读:因果推断是数据科学的一个重要分支,在互联网和工业界的产品迭代、算法和激励策略的评估中都扮演者重要的角色,结合数据、实验或者统计计量模型来计算新的改变带来的收益,是决策制定的基础。然而,因果推断并不是一件简单的事情。首先,在日常生活中,人们常常把相关和因果混为一谈。相关往往代表着两个变量具有同时增长或者降低的趋势,但是因果意味着我们想要知道对一个变量施加改变的时候会发生什么样的结果,或者说我们期望得到反事实的结果,如果过去做了不一样的动作,未来是否会发生改变?然而难点在于,反事实的数据往往是

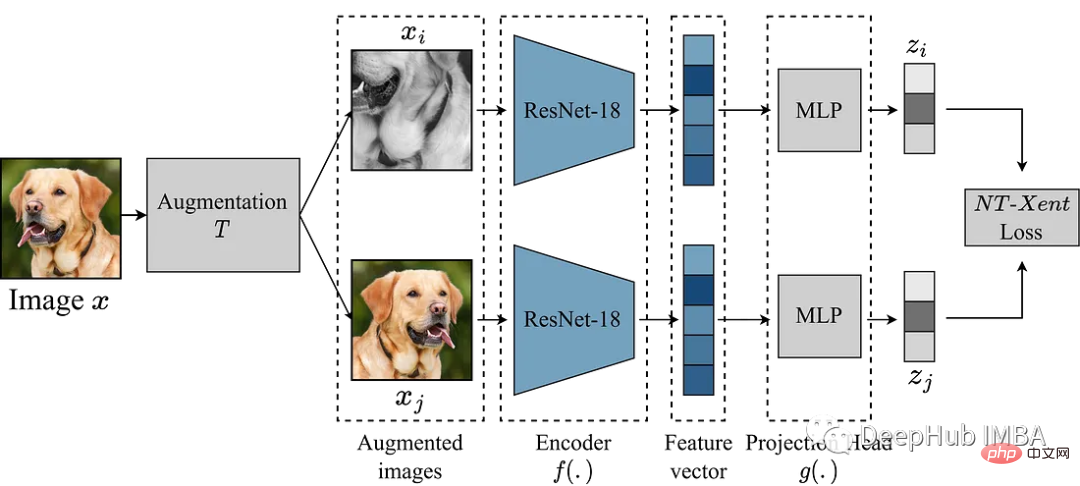

使用Pytorch实现对比学习SimCLR 进行自监督预训练Apr 10, 2023 pm 02:11 PM

使用Pytorch实现对比学习SimCLR 进行自监督预训练Apr 10, 2023 pm 02:11 PMSimCLR(Simple Framework for Contrastive Learning of Representations)是一种学习图像表示的自监督技术。 与传统的监督学习方法不同,SimCLR 不依赖标记数据来学习有用的表示。 它利用对比学习框架来学习一组有用的特征,这些特征可以从未标记的图像中捕获高级语义信息。SimCLR 已被证明在各种图像分类基准上优于最先进的无监督学习方法。 并且它学习到的表示可以很容易地转移到下游任务,例如对象检测、语义分割和小样本学习,只需在较小的标记

盒马供应链算法实战Apr 10, 2023 pm 09:11 PM

盒马供应链算法实战Apr 10, 2023 pm 09:11 PM一、盒马供应链介绍1、盒马商业模式盒马是一个技术创新的公司,更是一个消费驱动的公司,回归消费者价值:买的到、买的好、买的方便、买的放心、买的开心。盒马包含盒马鲜生、X 会员店、盒马超云、盒马邻里等多种业务模式,其中最核心的商业模式是线上线下一体化,最快 30 分钟到家的 O2O(即盒马鲜生)模式。2、盒马经营品类介绍盒马精选全球品质商品,追求极致新鲜;结合品类特点和消费者购物体验预期,为不同品类选择最为高效的经营模式。盒马生鲜的销售占比达 60%~70%,是最核心的品类,该品类的特点是用户预期时

人类反超 AI:DeepMind 用 AI 打破矩阵乘法计算速度 50 年记录一周后,数学家再次刷新Apr 11, 2023 pm 01:16 PM

人类反超 AI:DeepMind 用 AI 打破矩阵乘法计算速度 50 年记录一周后,数学家再次刷新Apr 11, 2023 pm 01:16 PM10 月 5 日,AlphaTensor 横空出世,DeepMind 宣布其解决了数学领域 50 年来一个悬而未决的数学算法问题,即矩阵乘法。AlphaTensor 成为首个用于为矩阵乘法等数学问题发现新颖、高效且可证明正确的算法的 AI 系统。论文《Discovering faster matrix multiplication algorithms with reinforcement learning》也登上了 Nature 封面。然而,AlphaTensor 的记录仅保持了一周,便被人类

研究表明强化学习模型容易受到成员推理攻击Apr 09, 2023 pm 08:01 PM

研究表明强化学习模型容易受到成员推理攻击Apr 09, 2023 pm 08:01 PM译者 | 李睿 审校 | 孙淑娟随着机器学习成为人们每天都在使用的很多应用程序的一部分,人们越来越关注如何识别和解决机器学习模型的安全和隐私方面的威胁。 然而,不同机器学习范式面临的安全威胁各不相同,机器学习安全的某些领域仍未得到充分研究。尤其是强化学习算法的安全性近年来并未受到太多关注。 加拿大的麦吉尔大学、机器学习实验室(MILA)和滑铁卢大学的研究人员开展了一项新研究,主要侧重于深度强化学习算法的隐私威胁。研究人员提出了一个框架,用于测试强化学习模型对成员推理攻击的脆弱性。 研究

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment