Technology peripherals

Technology peripherals AI

AI SDXL Turbo and LCM bring the era of real-time generation of AI drawings: as fast as typing, images are presented instantly

SDXL Turbo and LCM bring the era of real-time generation of AI drawings: as fast as typing, images are presented instantlySDXL Turbo and LCM bring the era of real-time generation of AI drawings: as fast as typing, images are presented instantly

Stability AI launched a new generation of image synthesis model - Stable Diffusion XL Turbo on Tuesday, which has aroused enthusiastic response. Many people say that using this model for image-to-text generation has never been easier

Enter your ideas in the input box and SDXL Turbo will respond quickly and generate the corresponding content without having to Other operations. No matter you input more or less content, it will not affect its speed.

You can use the Some images are created more precisely. Just take a piece of white paper and tell SDXL Turbo that you want a white cat. Before you finish typing, the little white cat will already appear in your hands

The speed of the SDXL Turbo model has reached an almost "real-time" level, which makes people wonder: whether the image generation model can be used for other purposes

Someone directly connected to the game and got a 2fps style transfer screen:

##According to the official blog, on the A100, SDXL Turbo can Generates 512x512 image in 207 ms (on-the-fly encoding single denoising step decoding, fp16), of which a single UNet forward evaluation takes 67 ms.

In this way, we can judge that Vincent Tu has entered the "real-time" era.

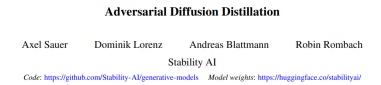

Such "instant generation" efficiency looks somewhat similar to the Tsinghua LCM model that became popular not long ago, but the technical content behind them is different. Stability detailed the inner workings of the model in a research paper released at the same time. The research focuses on a technology called Adversarial Diffusion Distillation (ADD). One of the claimed advantages of SDXL Turbo is its similarity to generative adversarial networks (GANs), particularly in generating single-step image outputs.

Paper details

Simply put, adversarial diffusion distillation is a general method that reduces the number of inference steps of a pre-trained diffusion model to 1- 4 sampling steps while maintaining high sampling fidelity and potentially further improving the overall performance of the model.

To this end, the researchers introduced a combination of two training objectives: (i) adversarial loss and (ii) distillation loss corresponding to SDS. The adversarial loss forces the model to directly generate samples that lie on the real image manifold on each forward pass, avoiding blurring and other artifacts common in other distillation methods. The distillation loss uses another pretrained (and fixed) diffusion model as the teacher, effectively leveraging its extensive knowledge and retaining the strong compositionality observed in large diffusion models. During the inference process, the researchers did not use classifier-free guidance, further reducing memory requirements. They retain the model's ability to improve results through iterative refinement, an advantage over previous single-step GAN-based approaches.

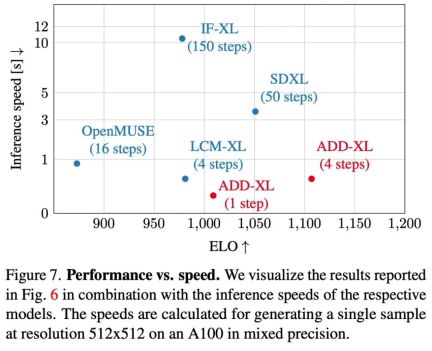

The training steps are shown in Figure 2:

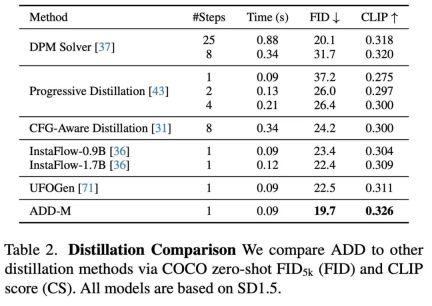

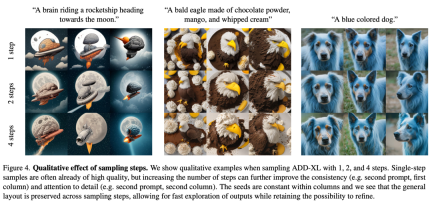

Next is a comparison with other SOTA models. Here the researchers did not use automated indicators, but chose a more reliable user preference evaluation method. The goal was to evaluate prompt compliance and overall image. To compare multiple different model variants (StyleGAN-T, OpenMUSE, IF-XL, SDXL and LCM-XL), the experiment uses the same prompt to generate the output. In blind tests, the SDXL Turbo beat the LCM-XL's 4-step configuration in a single step, and beat the SDXL's 50-step configuration in just 4 steps. From these results, it can be seen that SDXL Turbo outperforms state-of-the-art multi-step models while significantly reducing computational requirements without sacrificing image quality Presented here is a visual chart of the ELO score regarding inference speed at In Table 2, different few-step sampling and distillation methods using the same base model are compared. The results show that the ADD method outperforms all other methods, including the 8-step standard DPM solver as a quantitative In addition to the experimental results, the paper also shows some qualitative experimental results, demonstrating the improvement capabilities of ADD-XL based on the initial sample. Figure 3 compares ADD-XL (1 step) with the current best baseline in few-step schemes. Figure 4 describes the iterative sampling process of ADD-XL. Figure 8 provides a direct comparison of ADD-XL with its teacher model SDXL-Base. As user studies show, ADD-XL outperforms the teacher model in both quality and prompt alignment. #For more research details, please refer to the original paper #

#

The above is the detailed content of SDXL Turbo and LCM bring the era of real-time generation of AI drawings: as fast as typing, images are presented instantly. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Chinese version

Chinese version, very easy to use