Technology peripherals

Technology peripherals AI

AI The world's first 'AI System Security Development Guidelines' were released, proposing four aspects of safety supervision requirements

The world's first 'AI System Security Development Guidelines' were released, proposing four aspects of safety supervision requirements

On November 26, 2023, the cybersecurity regulatory authorities of 18 countries including the United States, the United Kingdom, and Australia jointly issued the world's first "AI System Security Development Guidelines" to achieve Protect AI models from malicious tampering and urge AI companies to pay more attention to "security by design" when developing or using AI models.

The U.S. Cybersecurity and Infrastructure Security Agency (CISA), as one of the main participants, stated that the world is experiencing an inflection point in the rapid development of AI technology, and AI technology is likely to be The most impactful technology today. However, ensuring cybersecurity is key to building safe, reliable and trustworthy AI systems. To this end, we have united the cybersecurity regulatory authorities of multiple countries and cooperated with technical experts from companies such as Google, Amazon, OpenAI, and Microsoft to jointly write and publish this guideline, aiming to improve the security of AI technology applications

It is understood that this guideline is the world’s first guidance document for the development safety of AI systems issued by an official organization. The guidelines clearly require that AI companies must prioritize ensuring safe results for customers, actively advocate transparency and accountability mechanisms for AI applications, and build the organization's management structure with safety design as the top priority. The guidelines aim to improve the cybersecurity of AI and help ensure the safe design, development and deployment of AI technology.

In addition, based on the U.S. government’s long-standing experience in cybersecurity risk management, the guidelines require all AI R&D companies to conduct sufficient testing before publicly releasing new AI tools to ensure that security measures have been taken. Measures to minimize social harms (such as prejudice and discrimination) and privacy concerns. The guidelines also require AI R&D companies to commit to facilitating third parties to discover and report vulnerabilities in their AI systems through a bug bounty system, so that vulnerabilities can be quickly discovered and repaired

Specifically, the guidelines released this time are for AI systems Security development puts forward four major regulatory requirements:

1. Give priority to "security by design" and "security by default"

AI development companies have repeatedly emphasized "security by design" in their guidelines ” and the “safe by default” principle. This means they should proactively take measures to protect AI products from attacks. To comply with the guidelines, AI developers should prioritize safety in their decision-making processes and not just focus on product functionality and performance. The guidelines also recommend that products provide the safest default application option and clearly communicate to users the risks of overriding that default configuration. Furthermore, as required by the Code, developers of AI systems should be responsible for downstream application outcomes, rather than relying on customers to control security

Excerpt from the request: “The user (whether the or integrating external AI components) often lack sufficient visibility and expertise to fully understand, assess, or address the risks associated with the AI systems they are using. Therefore, in accordance with the 'safe by design' principle, providers of AI components Should be responsible for the security consequences of users downstream of the supply chain.”

2. Pay close attention to complex supply chain security

AI tool developers often rely on third-party components when designing their own products, such as basic Models, training datasets and APIs. A large supplier network will bring a larger attack surface to the AI system, and any weak link in it may have a negative impact on the security of the product. Therefore, the guidelines require developers to fully assess the security risks when deciding to reference third-party components. When working with third parties, developers should review and monitor the vendor's security posture, require vendors to adhere to the same security standards as their own organization, and implement scanning and quarantine of imported third-party code.

Excerpt from the request: “Developers of mission-critical systems are required to be prepared to switch to alternative solutions if third-party components do not meet security standards. Businesses can use NCSC’s Supply Chain Guidance Resources such as the Software Artifact Supply Chain Level (SLSA), which tracks supply chain and software development life cycle certifications.”

3. Consider the unique risks faced in AI applications

AI systems will generate some unique threats (such as prompt injection attacks and data poisoning) when applied, so developers need to fully consider the unique security factors of AI systems. An important component of a "secure by design" approach to AI systems is to set up safety guardrails for AI model output to prevent the leakage of sensitive data and limit the operation of AI components used for tasks such as file editing. Developers should incorporate AI-specific threat scenarios into pre-release testing and monitor user input for malicious attempts to exploit the system.

Required excerpt: "The term 'adversarial machine learning' (AML) is used to describe the exploitation of security vulnerabilities in machine learning components, including hardware, software, workflows, and supply chains. AML enables Attackers can induce unexpected behaviors in machine learning systems, including: affecting the classification or regression performance of the model, allowing users to perform unauthorized operations, and extracting sensitive model information."

4. AI system Security development should be continuous and collaborative

The guidelines outline the best security practices for the entire life cycle stages of AI system design, development, deployment, operation and maintenance, and emphasize the continuous monitoring of deployed AI systems. Importance in order to spot model behavior changes and suspicious user input. The principle of "security by design" is a key component of any software update, and the guidelines recommend that developers automatically update by default. Finally, it is recommended that developers take advantage of the vast AI community feedback and information sharing to continuously improve the security of the system

Excerpt from the request: "When needed, AI system developers can Escalate the problem to the larger community, such as issuing an announcement in response to a vulnerability disclosure, including a detailed and complete enumeration of common vulnerabilities. When a security issue is discovered, developers should take action to mitigate and fix the problem quickly and appropriately."

Reference link:

The content that needs to be rewritten is as follows: In November 2023, the United States, the United Kingdom and global partners issued a statement

4 of the Global Artificial Intelligence Safety Guidelines Key points

The above is the detailed content of The world's first 'AI System Security Development Guidelines' were released, proposing four aspects of safety supervision requirements. For more information, please follow other related articles on the PHP Chinese website!

一个适合程序员的 AI创业思路Apr 09, 2024 am 09:01 AM

一个适合程序员的 AI创业思路Apr 09, 2024 am 09:01 AM大家好,我卡颂。许多程序员朋友都希望参与自己的AI产品开发。我们可以根据"流程自动化程度"和"AI应用程度"将产品的形态划分为四个象限。其中:流程自动化程度衡量「产品的服务流程有多少需要人工介入」AI应用程度衡量「AI在产品中应用的比重」首先,限制AI的能力,以处理一张AI图片应用,用户在应用内通过与UI交互就能完成完整的服务流程,从而自动化程度高。同时,“AI图片处理”重度依赖AI的能力,所以AI应用程度高。第二象限,是常规的应用开发领域,比如开发个知识管理应用、时间管理应用、流程自动化程度高

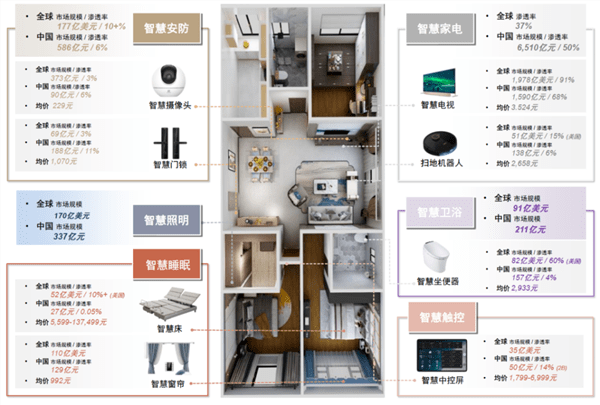

家电行业观察:AI加持下,全屋智能将成为智能家电未来?Jun 13, 2023 pm 05:48 PM

家电行业观察:AI加持下,全屋智能将成为智能家电未来?Jun 13, 2023 pm 05:48 PM若是将人工智能比喻为第四次工业革命的话,那么大模型便是第四次工业革命的粮食储备。在应用层面,它使工业界得以重温1956年美国达特茅斯会议的设想,并正式开启了重塑世界的序幕。根据大厂定义,AI家电是具备互联互通、人机交互和主动决策能力的家电,AI家电可以视作是智能家电的最高形态。然而,目前市面上AI加持的全屋智能模式是否能成为未来行业的主角?家电行业是否会诞生新竞争格局?本文将从三个方面来解析。全屋智能为何雷声大,雨点小?资料来源:Statista、中安网、艾瑞咨询、洛图科技、全国制锁行业信息中心

生成式AI技术为制造企业降本增效提供强大支持Nov 21, 2023 am 09:13 AM

生成式AI技术为制造企业降本增效提供强大支持Nov 21, 2023 am 09:13 AM在2023年,生成式人工智能(ArtificialIntelligenceGeneratedContent,简称AIGC)成为科技领域最热门的话题,毫无疑问那么对于制造行业来说,他们应该怎样从生成式AI这项新兴技术中获益?广大正在实施数字化转型的中小企业,又可以由此获得怎样的启示?最近,亚马逊云科技与制造行业的代表一同合作,就中国制造业目前的发展趋势、传统制造业数字化转型所面临的挑战与机遇,以及生成式人工智能对制造业的创新重塑等话题进行了分享和深入探讨生成式AI在制造行业的应用现状提及中国制造业

华为余承东表示:鸿蒙可能拥有强大的人工智能大模型能力Aug 04, 2023 pm 04:25 PM

华为余承东表示:鸿蒙可能拥有强大的人工智能大模型能力Aug 04, 2023 pm 04:25 PM华为常务董事余承东在今天的微博上发布了HDC大会邀请函,暗示鸿蒙或许将具备AI大模型能力。据他后续微博内容显示,邀请函文字是由智慧语音助手小艺生成的。余承东表示,鸿蒙世界即将带来更智能、更贴心的全新体验根据之前曝光的信息来看,今年鸿蒙4在AI能力方面有望取得重大进展,进一步巩固了AI作为鸿蒙系统的核心特性

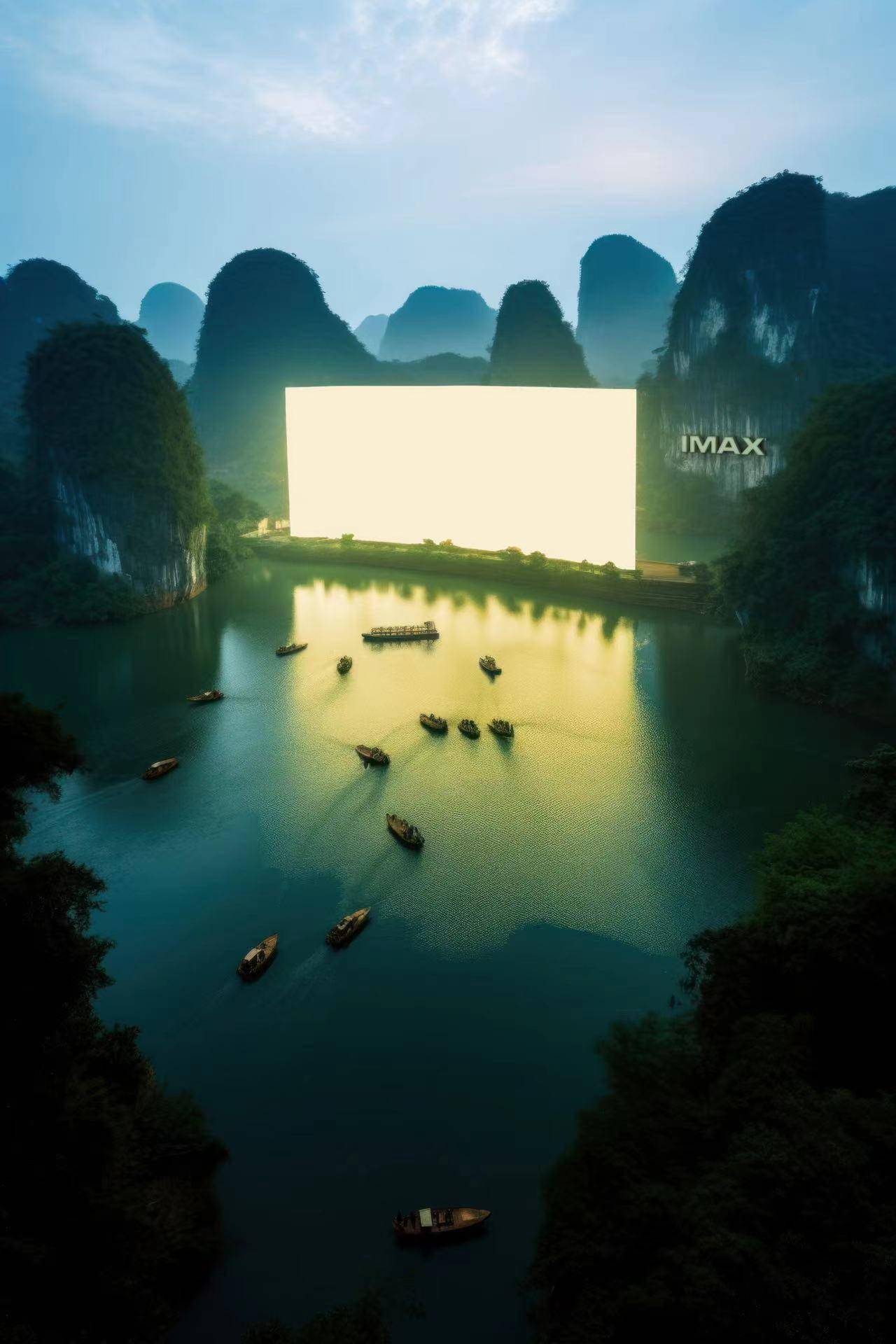

IMAX中国AI艺术大片把影院搬到经典地标Jun 10, 2023 pm 01:03 PM

IMAX中国AI艺术大片把影院搬到经典地标Jun 10, 2023 pm 01:03 PMIMAX中国AI艺术大片把影院搬到经典地标漓江时光网讯近日,IMAX打造中国首款AI艺术大片,在AI技术的助力下,IMAX影院“落地”包括长城、敦煌、桂林漓江、张掖丹霞在内的多个国内经典地标。此款AI艺术大片由IMAX联合数字艺术家@kefan404和尼欧数字创作,组画共四张,IMAX标志性的超大银幕或铺展于张掖丹霞缤纷绚丽的大自然“画布”之中,或于承载千年文化积淀的敦煌比邻矗立,或与桂林漓江的山水长卷融为一体,或在层峦叠嶂之中眺望巍峨长城,令人不禁期待想象成真的那一天。自2008年于日本东京巨

AI客服替代优势尽显 需求匹配与普及应用尚待时日Apr 12, 2023 pm 07:34 PM

AI客服替代优势尽显 需求匹配与普及应用尚待时日Apr 12, 2023 pm 07:34 PM从人工呼叫中心时代,经历了IVR流程设计、在线客服系统等的应用,到已经发展至如今的人工智能(AI)客服。作为服务客户的重要窗口,客服行业始终站在时代前端,不断利用新科技发展新生产力,向着高效率化、高品质化、高服务化以及个性化、全天候客户服务迈进。伴随着客户人群、数量的增多,以及人工服务成本的快速增加,如何利用人工智能、大数据等新一代信息科技,促进各行业的客户服务中心从劳动密集型向智能化、精细化、精细化的技术转型升级,已成为摆在诸多行业面前的重要问题。得益于人工智能技术不断进步与场景化应用的快速

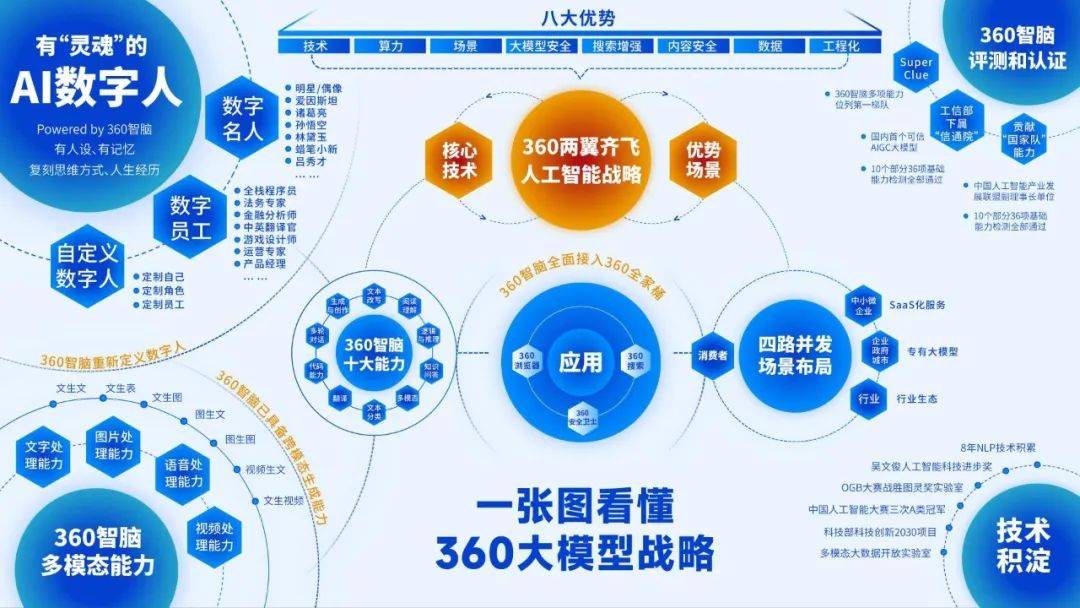

AI技术加速迭代:周鸿祎视角下的大模型战略Jun 15, 2023 pm 02:25 PM

AI技术加速迭代:周鸿祎视角下的大模型战略Jun 15, 2023 pm 02:25 PM今年以来,360集团创始人周鸿祎在所有公开场合的讲话都离不开一个话题,那就是人工智能大模型。他曾自称“GPT的布道者”,对ChatGPT取得的突破赞不绝口,更是坚定看好由此产生的AI技术迭代。作为一个擅于表达的明星企业家,周鸿祎的演讲往往妙语连珠,所以他的“布道”也创造过很多热点话题,确实为AI大模型添了一把火。但对周鸿祎而言,光做意见领袖还不够,外界更关心他执掌的360公司如何应对这波AI新浪潮。事实上,在360内部,周鸿祎也早已掀起一场全员变革,4月份,他发出内部信,要求360每一位员工、每

为什么说信息化平权是AI给人类最重要的积极意义?探索未来的故事Sep 21, 2023 pm 06:21 PM

为什么说信息化平权是AI给人类最重要的积极意义?探索未来的故事Sep 21, 2023 pm 06:21 PM在一个充满未来科技的世界中,人工智能已经成为人类生活中必不可少的助手。然而,人工智能不仅仅是为了方便我们的生活,它还以一种悄然存在的方式改变着人类社会的结构和运行。其中最重要的积极意义之一就是信息平等化消除数字鸿沟,让每个人都能平等享受科技带来的便利在当前数字化时代,信息化已经成为推动社会发展的重要力量。然而,我们也面临着一个现实问题,即存在着数字鸿沟,导致一部分人无法享受到科技所带来的便利。因此,信息化平权显得尤为重要,它能够消除数字鸿沟,让每个人都能平等分享科技发展的成果,实现社会的全面进步

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Zend Studio 13.0.1

Powerful PHP integrated development environment