Technology peripherals

Technology peripherals AI

AI Ensemble methods for unsupervised learning: clustering of similarity matrices

Ensemble methods for unsupervised learning: clustering of similarity matricesEnsemble methods for unsupervised learning: clustering of similarity matrices

In machine learning, the term ensemble refers to combining multiple models in parallel. The idea is to use the wisdom of the crowd to form a better consensus on the final answer given.

In the field of supervised learning, this method has been widely studied and applied, especially in classification problems with very successful algorithms like RandomForest. A voting/weighting system is often employed to combine the output of each individual model into a more robust and consistent final output. In the world of unsupervised learning, this task becomes more difficult. First, because it encompasses the challenges of the field itself, we have no prior knowledge of the data to compare ourselves to any target. Second, because finding a suitable way to combine information from all models remains a problem, and there is no consensus on how to do this.

In this article, we discuss the best approach on this topic, namely clustering of similarity matrices.

The main idea of this method is: given a data set X, create a matrix S such that Si represents the similarity between xi and xj. This matrix is constructed based on the clustering results of several different models.

The main idea of this method is: given a data set X, create a matrix S such that Si represents the similarity between xi and xj. This matrix is constructed based on the clustering results of several different models.

Binary co-occurrence matrix

Creating a binary co-occurrence matrix between inputs is the first step in building a model

it Used to indicate whether two inputs i and j belong to the same cluster.

it Used to indicate whether two inputs i and j belong to the same cluster.

import numpy as np from scipy import sparse def build_binary_matrix( clabels ): data_len = len(clabels) matrix=np.zeros((data_len,data_len))for i in range(data_len):matrix[i,:] = clabels == clabels[i]return matrix labels = np.array( [1,1,1,2,3,3,2,4] ) build_binary_matrix(labels)

Use KMeans to construct a similarity matrix

Use KMeans to construct a similarity matrix

We have constructed a function to binarize our clustering, and now we can enter the stage of constructing the similarity matrix .

We introduce here a common method, which only involves calculating the average value between M co-occurrence matrices generated by M different models. We define it as:

When the entries fall in the same cluster, their similarity value will be close to 1, while when the entries fall in different groups, Their similarity value will be close to 0

When the entries fall in the same cluster, their similarity value will be close to 1, while when the entries fall in different groups, Their similarity value will be close to 0

We will build a similarity matrix based on the labels created by the K-Means model. Conducted using the MNIST dataset. For simplicity and efficiency, we will only use 10,000 PCA-reduced images.

from sklearn.datasets import fetch_openml from sklearn.decomposition import PCA from sklearn.cluster import MiniBatchKMeans, KMeans from sklearn.model_selection import train_test_split mnist = fetch_openml('mnist_784') X = mnist.data y = mnist.target X, _, y, _ = train_test_split(X,y, train_size=10000, stratify=y, random_state=42 ) pca = PCA(n_components=0.99) X_pca = pca.fit_transform(X)

To allow for diversity between models, each model is instantiated with a random number of clusters.

NUM_MODELS = 500 MIN_N_CLUSTERS = 2 MAX_N_CLUSTERS = 300 np.random.seed(214) model_sizes = np.random.randint(MIN_N_CLUSTERS, MAX_N_CLUSTERS+1, size=NUM_MODELS) clt_models = [KMeans(n_clusters=i, n_init=4, random_state=214) for i in model_sizes] for i, model in enumerate(clt_models):print( f"Fitting - {i+1}/{NUM_MODELS}" )model.fit(X_pca)

The following function is to create a similarity matrix

def build_similarity_matrix( models_labels ):n_runs, n_data = models_labels.shape[0], models_labels.shape[1] sim_matrix = np.zeros( (n_data, n_data) ) for i in range(n_runs):sim_matrix += build_binary_matrix( models_labels[i,:] ) sim_matrix = sim_matrix/n_runs return sim_matrix

Call this function:

models_labels = np.array([ model.labels_ for model in clt_models ]) sim_matrix = build_similarity_matrix(models_labels)

The final result is as follows:

The information from the similarity matrix can still be post-processed before the last step, such as applying logarithmic, polynomial, etc. transformations.

The information from the similarity matrix can still be post-processed before the last step, such as applying logarithmic, polynomial, etc. transformations.

In our case, we will keep the original intention unchanged and rewrite

Pos_sim_matrix = sim_matrix

Clustering the similarity matrix

The similarity matrix is a A way to represent the knowledge built by the collaboration of all clustering models.

We can use it to visually see which entries are more likely to belong to the same cluster and which ones do not. However, this information still needs to be converted into actual clusters. This is done by using a clustering algorithm that can receive a similarity matrix as a parameter. Here we use SpectralClustering.

from sklearn.cluster import SpectralClustering spec_clt = SpectralClustering(n_clusters=10, affinity='precomputed',n_init=5, random_state=214) final_labels = spec_clt.fit_predict(pos_sim_matrix)

Comparison with the standard KMeans model

Let’s compare it with KMeans to confirm whether our method is effective.

We will use NMI, ARI, cluster purity and class purity indicators to evaluate the standard KMeans model and compare with our ensemble model. Additionally, we will plot a contingency matrix to visualize which categories belong in each cluster

from seaborn import heatmap import matplotlib.pyplot as plt def data_contingency_matrix(true_labels, pred_labels): fig, (ax) = plt.subplots(1, 1, figsize=(8,8)) n_clusters = len(np.unique(pred_labels))n_classes = len(np.unique(true_labels))label_names = np.unique(true_labels)label_names.sort() contingency_matrix = np.zeros( (n_classes, n_clusters) ) for i, true_label in enumerate(label_names):for j in range(n_clusters):contingency_matrix[i, j] = np.sum(np.logical_and(pred_labels==j, true_labels==true_label)) heatmap(contingency_matrix.astype(int), ax=ax,annot=True, annot_kws={"fontsize":14}, fmt='d') ax.set_xlabel("Clusters", fontsize=18)ax.set_xticks( [i+0.5 for i in range(n_clusters)] )ax.set_xticklabels([i for i in range(n_clusters)], fontsize=14) ax.set_ylabel("Original classes", fontsize=18)ax.set_yticks( [i+0.5 for i in range(n_classes)] )ax.set_yticklabels(label_names, fontsize=14, va="center") ax.set_title("Contingency Matrix\n", ha='center', fontsize=20)

from sklearn.metrics import normalized_mutual_info_score, adjusted_rand_score def purity( true_labels, pred_labels ): n_clusters = len(np.unique(pred_labels))n_classes = len(np.unique(true_labels))label_names = np.unique(true_labels) purity_vector = np.zeros( (n_classes) )contingency_matrix = np.zeros( (n_classes, n_clusters) ) for i, true_label in enumerate(label_names):for j in range(n_clusters):contingency_matrix[i, j] = np.sum(np.logical_and(pred_labels==j, true_labels==true_label)) purity_vector = np.max(contingency_matrix, axis=1)/np.sum(contingency_matrix, axis=1) print( f"Mean Class Purity - {np.mean(purity_vector):.2f}" ) for i, true_label in enumerate(label_names):print( f" {true_label} - {purity_vector[i]:.2f}" ) cluster_purity_vector = np.zeros( (n_clusters) )cluster_purity_vector = np.max(contingency_matrix, axis=0)/np.sum(contingency_matrix, axis=0) print( f"Mean Cluster Purity - {np.mean(cluster_purity_vector):.2f}" ) for i in range(n_clusters):print( f" {i} - {cluster_purity_vector[i]:.2f}" ) kmeans_model = KMeans(10, n_init=50, random_state=214) km_labels = kmeans_model.fit_predict(X_pca) data_contingency_matrix(y, km_labels) print( "Single KMeans NMI - ", normalized_mutual_info_score(y, km_labels) ) print( "Single KMeans ARI - ", adjusted_rand_score(y, km_labels) ) purity(y, km_labels)

data_contingency_matrix(y, final_labels) print( "Ensamble NMI - ", normalized_mutual_info_score(y, final_labels) ) print( "Ensamble ARI - ", adjusted_rand_score(y, final_labels) ) purity(y, final_labels)

By observing the above values, it can be clearly seen that the Ensemble method can effectively improve the quality of clustering. At the same time, more consistent behavior can also be observed in the contingency matrix, with better distribution categories and less "noise"

The above is the detailed content of Ensemble methods for unsupervised learning: clustering of similarity matrices. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

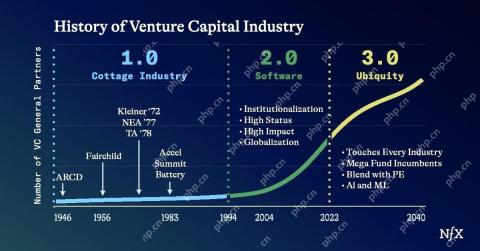

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Chinese version

Chinese version, very easy to use

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.