With the advent of the big data era, data processing and analysis are becoming more and more important. In the field of data processing and analysis, MongoDB, as a popular NoSQL database, is widely used in real-time data processing and analysis. This article will start from actual experience and summarize some experiences in real-time data processing and analysis based on MongoDB.

1. Data model design

When using MongoDB for real-time data processing and analysis, reasonable data model design is crucial. First, you need to analyze the business requirements and understand the data types and structures that need to be processed and analyzed. Then, design an appropriate data model based on the characteristics of the data and query requirements. When designing a data model, you need to consider the relationship and hierarchical structure of the data, and choose appropriate data nesting and data indexing methods.

2. Data import and synchronization

Real-time data processing and analysis require real-time acquisition and import of data. When using MongoDB for data import and synchronization, you can consider the following methods:

- Use MongoDB's own import tool: MongoDB provides mongodump and mongorestore commands to easily import and back up data.

- Use ETL tools: ETL (Extract-Transform-Load) tools can be used to extract data from other data sources, convert the data into MongoDB format, and then import it into MongoDB.

- Use real-time data synchronization tools: Real-time data synchronization tools can synchronize data to MongoDB in real time to ensure the accuracy and timeliness of data.

3. Establishing indexes

When using MongoDB for real-time data processing and analysis, it is very important to establish appropriate indexes. Indexes can improve query efficiency and speed up data reading and analysis. When building an index, it is necessary to select appropriate index types and index fields based on query requirements and data models to avoid excessive indexing and unnecessary indexing to improve system performance.

4. Utilizing replication and sharding

When the amount of data increases, a single MongoDB may not be able to meet the needs of real-time data processing and analysis. At this time, you can consider using MongoDB's replication and sharding mechanism to expand the performance and capacity of the database.

- Replication: MongoDB’s replication mechanism can achieve redundant backup and high availability of data. By configuring multiple replica sets, data can be automatically copied to multiple nodes, and data reading and writing can be separated to improve system availability and performance.

- Sharding: MongoDB’s sharding mechanism can achieve horizontal expansion of data. By spreading data across multiple shards, the system's concurrent processing capabilities and storage capacity can be improved. When sharding, it is necessary to reasonably divide the sharding keys and intervals of the data to avoid data skew and over-sharding.

5. Optimizing query and aggregation

When using MongoDB for real-time data processing and analysis, it is necessary to optimize query and aggregation operations to improve the response speed and performance of the system.

- Use the appropriate query method: Choose the appropriate query method according to the data model and query requirements. You can use basic CRUD operations or more complex query operations, such as querying nested hierarchical data or using geographical location queries.

- Use the aggregation framework: MongoDB provides a powerful aggregation framework that can perform complex data aggregation and analysis operations. Proper use of the aggregation framework can reduce the amount of data transmission and calculation, and improve query efficiency and performance.

6. Monitoring and Optimization

Real-time data processing and analysis systems require regular monitoring and optimization to maintain system stability and performance.

- Monitor system performance: By monitoring the system's CPU, memory, network and other indicators, you can understand the system's load and performance bottlenecks, and adjust system configurations and parameters in a timely manner to improve system stability and performance.

- Optimize query plan: Regularly analyze the execution plan of query and aggregation operations to find out performance bottlenecks and optimization space, and adjust indexes, rewrite query statements, etc. to improve query efficiency and response speed.

- Data compression and archiving: For historical data and cold data, data compression and archiving can be performed to save storage space and improve system performance.

Summary:

Real-time data processing and analysis based on MongoDB requires reasonable data model design, data import and synchronization, index establishment, replication and sharding, query and aggregation optimization, and regular monitoring and optimization. By summarizing these experiences, MongoDB can be better applied for real-time data processing and analysis, and the efficiency and accuracy of data processing and analysis can be improved.

The above is the detailed content of Summary of experience in real-time data processing and analysis based on MongoDB. For more information, please follow other related articles on the PHP Chinese website!

一文详解Python数据分析模块Numpy切片、索引和广播Apr 10, 2023 pm 02:56 PM

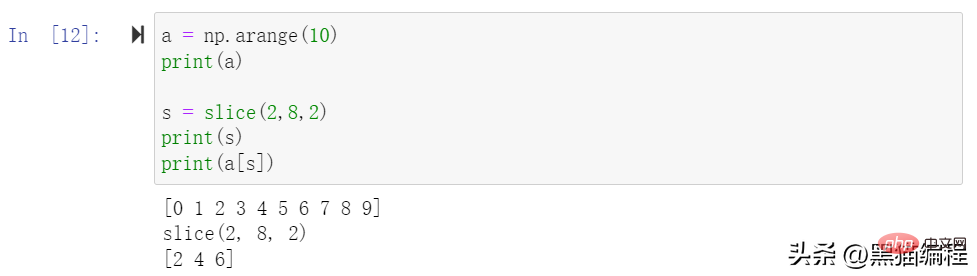

一文详解Python数据分析模块Numpy切片、索引和广播Apr 10, 2023 pm 02:56 PMNumpy切片和索引ndarray对象的内容可以通过索引或切片来访问和修改,与 Python 中 list 的切片操作一样。ndarray 数组可以基于 0 ~ n-1 的下标进行索引,切片对象可以通过内置的 slice 函数,并设置 start, stop 及 step 参数进行,从原数组中切割出一个新数组。切片还可以包括省略号 …,来使选择元组的长度与数组的维度相同。 如果在行位置使用省略号,它将返回包含行中元素的 ndarray。高级索引整数数组索引以下实例获取数组中 (0,0),(1,1

Python中的机器学习是什么?Jun 04, 2023 am 08:52 AM

Python中的机器学习是什么?Jun 04, 2023 am 08:52 AM近年来,机器学习(MachineLearning)成为了IT行业中最热门的话题之一,Python作为一种高效的编程语言,已经成为了许多机器学习实践者的首选。本文将会介绍Python中机器学习的概念、应用和实现。一、机器学习概念机器学习是一种让机器通过对数据的分析、学习和优化,自动改进性能的技术。其主要目的是让机器能够在数据中发现存在的规律,从而获得对未来

如何利用 Go 语言进行数据分析和机器学习?Jun 10, 2023 am 09:21 AM

如何利用 Go 语言进行数据分析和机器学习?Jun 10, 2023 am 09:21 AM随着互联网技术的发展和大数据的普及,越来越多的公司和机构开始关注数据分析和机器学习。现在,有许多编程语言可以用于数据科学,其中Go语言也逐渐成为了一种不错的选择。虽然Go语言在数据科学上的应用不如Python和R那么广泛,但是它具有高效、并发和易于部署等特点,因此在某些场景中表现得非常出色。本文将介绍如何利用Go语言进行数据分析和机器学习

数据挖掘和数据分析的区别是什么?Dec 07, 2020 pm 03:16 PM

数据挖掘和数据分析的区别是什么?Dec 07, 2020 pm 03:16 PM区别:1、“数据分析”得出的结论是人的智力活动结果,而“数据挖掘”得出的结论是机器从学习集【或训练集、样本集】发现的知识规则;2、“数据分析”不能建立数学模型,需要人工建模,而“数据挖掘”直接完成了数学建模。

Python量化交易实战:获取股票数据并做分析处理Apr 15, 2023 pm 09:13 PM

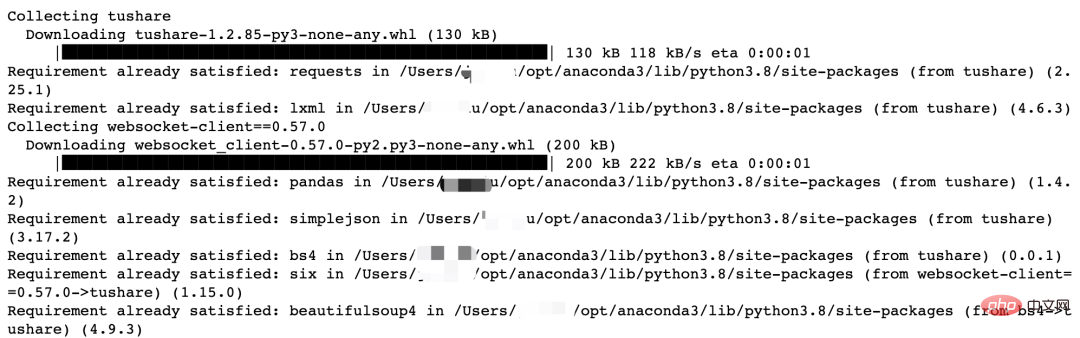

Python量化交易实战:获取股票数据并做分析处理Apr 15, 2023 pm 09:13 PM量化交易(也称自动化交易)是一种应用数学模型帮助投资者进行判断,并且根据计算机程序发送的指令进行交易的投资方式,它极大地减少了投资者情绪波动的影响。量化交易的主要优势如下:快速检测客观、理性自动化量化交易的核心是筛选策略,策略也是依靠数学或物理模型来创造,把数学语言变成计算机语言。量化交易的流程是从数据的获取到数据的分析、处理。数据获取数据分析工作的第一步就是获取数据,也就是数据采集。获取数据的方式有很多,一般来讲,数据来源主要分为两大类:外部来源(外部购买、网络爬取、免费开源数据等)和内部来源

MySQL中的大数据分析技巧Jun 14, 2023 pm 09:53 PM

MySQL中的大数据分析技巧Jun 14, 2023 pm 09:53 PM随着大数据时代的到来,越来越多的企业和组织开始利用大数据分析来帮助自己更好地了解其所面对的市场和客户,以便更好地制定商业策略和决策。而在大数据分析中,MySQL数据库也是经常被使用的一种工具。本文将介绍MySQL中的大数据分析技巧,为大家提供参考。一、使用索引进行查询优化索引是MySQL中进行查询优化的重要手段之一。当我们对某个列创建了索引后,MySQL就可

为何军事人工智能初创公司近年来备受追捧Apr 13, 2023 pm 01:34 PM

为何军事人工智能初创公司近年来备受追捧Apr 13, 2023 pm 01:34 PM俄乌冲突爆发 2 周后,数据分析公司 Palantir 的首席执行官亚历山大·卡普 (Alexander Karp) 向欧洲领导人提出了一项建议。在公开信中,他表示欧洲人应该在硅谷的帮助下实现武器现代化。Karp 写道,为了让欧洲“保持足够强大以战胜外国占领的威胁”,各国需要拥抱“技术与国家之间的关系,以及寻求摆脱根深蒂固的承包商控制的破坏性公司与联邦政府部门之间的资金关系”。而军队已经开始响应这项号召。北约于 6 月 30 日宣布,它正在创建一个 10 亿美元的创新基金,将投资于早期创业公司和

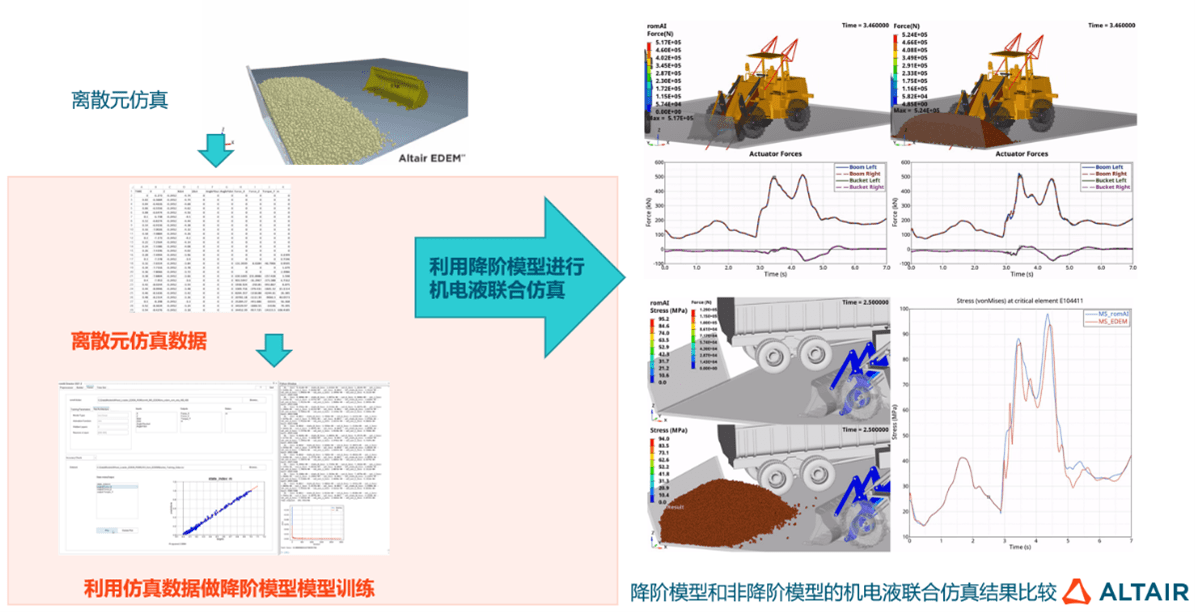

AI牵引工业软件新升级,数据分析与人工智能在探索中进化Jun 05, 2023 pm 04:04 PM

AI牵引工业软件新升级,数据分析与人工智能在探索中进化Jun 05, 2023 pm 04:04 PMCAE和AI技术双融合已成为企业研发设计环节数字化转型的重要应用趋势,但企业数字化转型绝不仅是单个环节的优化,而是全流程、全生命周期的转型升级,数据驱动只有作用于各业务环节,才能真正助力企业持续发展。数字化浪潮席卷全球,作为数字经济核心驱动,数字技术逐步成为企业发展新动能,助推企业核心竞争力进化,在此背景下,数字化转型已成为所有企业的必选项和持续发展的前提,拥抱数字经济成为企业的共同选择。但从实际情况来看,面向C端的产业如零售电商、金融等领域在数字化方面走在前列,而以制造业、能源重工等为代表的传

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Chinese version

Chinese version, very easy to use