Technology peripherals

Technology peripherals AI

AI Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation

Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulationReinforcement learning (RL) is a machine learning method that allows an agent to learn how to behave in its environment through trial and error. Agents are rewarded or punished for taking actions that lead to desired outcomes. Over time, the agent learns to take actions that maximize its expected reward

RL agents typically use a Markov decision process ( MDP), a mathematical framework for modeling sequential decision problems. MDP consists of four parts:

- #State: A set of possible states of the environment.

- Action: A set of actions that an agent can take.

- Transition function: A function that predicts the probability of transitioning to a new state given the current state and action.

- Reward function: A function that assigns a reward to the agent for each conversion.

The goal of the agent is to learn a policy function that maps states to actions. Maximize the agent's expected return over time through a policy function.

Deep Q-learning is a reinforcement learning algorithm that uses deep neural networks to learn policy functions. Deep neural networks take the current state as input and output a vector of values, where each value represents a possible action. The agent then takes the action based on the highest value

Deep Q-learning is a value-based reinforcement learning algorithm, which means it learns the value of each state-action pair. The value of a state-action pair is the expected reward for the agent to take that action in that state.

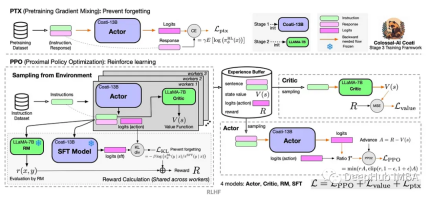

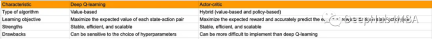

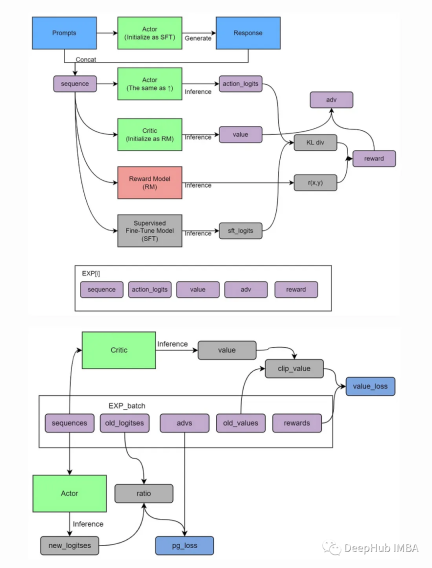

Actor-Critic is a RL algorithm that combines value-based and policy-based. There are two components:

#Actor: The actor is responsible for selecting operations.

Critic: Responsible for evaluating the Actor's behavior.

Actors and critics are trained at the same time. Actors are trained to maximize expected rewards and critics are trained to accurately predict expected rewards for each state-action pair

The Actor-Critic algorithm has several advantages over other reinforcement learning algorithms advantage. First, it is more stable, which means that bias is less likely to occur during training. Second, it's more efficient, which means it can learn faster. Third, it has better scalability and can be applied to problems with large state and operation spaces

The table below summarizes the differences between Deep Q-learning and Actor-Critic The main differences:

Advantages of Actor-Critic (A2C)

Actor-Critic is A popular reinforcement learning architecture that combines policy-based and value-based approaches. It has many advantages that make it a strong choice for solving various reinforcement learning tasks:

1. Low variance

Compared Compared to traditional policy gradient methods, A2C usually has lower variance during training. This is because A2C uses both the policy gradient and the value function, and uses the value function to reduce variance in the calculation of the gradient. Low variance means that the training process is more stable and can converge to a better strategy faster

2. Faster learning speed

Due to Due to the low variance feature, A2C can usually learn a good strategy faster. This is especially important for tasks that require extensive simulations, as faster learning speeds save valuable time and computing resources.

3. Combining policy and value function

One of the distinctive features of A2C is that it learns policy and value function simultaneously. This combination enables the agent to better understand the correlation between environment and actions, thereby better guiding policy improvements. The existence of the value function also helps reduce errors in policy optimization and improve training efficiency.

4. Supports continuous and discrete action spaces

A2C can adapt to different types of action spaces, including continuous and discrete actions, and is very versatile . This makes A2C a widely applicable reinforcement learning algorithm that can be applied to a variety of tasks, from robot control to gameplay optimization

5. Parallel training

A2C can be easily parallelized to take full advantage of multi-core processors and distributed computing resources. This means more empirical data can be collected in less time, thus improving training efficiency.

Although Actor-Critic methods have some advantages, they also face some challenges, such as hyperparameter tuning and potential instability in training. However, with appropriate tuning and techniques such as experience replay and target networks, these challenges can be mitigated to a large extent, making Actor-Critic a valuable approach in reinforcement learning

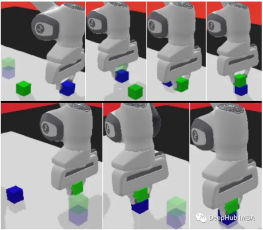

panda-gym

##panda-gym is developed based on the PyBullet engine and encapsulates reach, push, slide, Six tasks including pick&place, stack, and flip are mainly inspired by OpenAI Fetch.

We will use panda-gym as an example to show the following code

1. Installation library

First, we need to initialize the code of the reinforcement learning environment:

!apt-get install -y \libgl1-mesa-dev \libgl1-mesa-glx \libglew-dev \xvfb \libosmesa6-dev \software-properties-common \patchelf !pip install \free-mujoco-py \pytorch-lightning \optuna \pyvirtualdisplay \PyOpenGL \PyOpenGL-accelerate\stable-baselines3[extra] \gymnasium \huggingface_sb3 \huggingface_hub \ panda_gym

2. Import libraryimport os import gymnasium as gym import panda_gym from huggingface_sb3 import load_from_hub, package_to_hub from stable_baselines3 import A2C from stable_baselines3.common.evaluation import evaluate_policy from stable_baselines3.common.vec_env import DummyVecEnv, VecNormalize from stable_baselines3.common.env_util import make_vec_env

3. Create a running environmentenv_id = "PandaReachDense-v3" # Create the env env = gym.make(env_id) # Get the state space and action space s_size = env.observation_space.shape a_size = env.action_space print("\n _____ACTION SPACE_____ \n") print("The Action Space is: ", a_size) print("Action Space Sample", env.action_space.sample()) # Take a random action

4. Standardization of observations and rewards

A good way to optimize reinforcement learning is to optimize the input Features are normalized. We calculate the running mean and standard deviation of the input features through the wrapper. At the same time, the reward is normalized by adding norm_reward = True

env = make_vec_env(env_id, n_envs=4) env = VecNormalize(env, norm_obs=True, norm_reward=True, clip_obs=10.)

5, creating an A2C model

We use the official model trained by the Stable-Baselines3 team Agent

model = A2C(policy = "MultiInputPolicy",env = env,verbose=1)

6, Training A2Cmodel.learn(1_000_000) # Save the model and VecNormalize statistics when saving the agent model.save("a2c-PandaReachDense-v3") env.save("vec_normalize.pkl")

7, Evaluating agentfrom stable_baselines3.common.vec_env import DummyVecEnv, VecNormalize # Load the saved statistics eval_env = DummyVecEnv([lambda: gym.make("PandaReachDense-v3")]) eval_env = VecNormalize.load("vec_normalize.pkl", eval_env) # We need to override the render_mode eval_env.render_mode = "rgb_array" # do not update them at test time eval_env.training = False # reward normalization is not needed at test time eval_env.norm_reward = False # Load the agent model = A2C.load("a2c-PandaReachDense-v3") mean_reward, std_reward = evaluate_policy(model, eval_env) print(f"Mean reward = {mean_reward:.2f} +/- {std_reward:.2f}")

Summary

In "panda-gym", the effective combination of the Panda robotic arm and the GYM environment allows us to easily perform reinforcement learning of the robotic arm locally,

In the Actor-Critic architecture, the agent learns to make incremental improvements at each time step, which is in contrast to the sparse reward function (in which the result is binary), which makes the Actor-Critic method Particularly suitable for such tasks.

By seamlessly combining policy learning and value estimation, the robot agent is able to skillfully manipulate the robotic arm end effector to accurately reach the designated target position. This not only provides practical solutions for tasks such as robot control, but also has the potential to transform a variety of fields that require agile and informed decision-making

#

The above is the detailed content of Deep Q-learning reinforcement learning using Panda-Gym's robotic arm simulation. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

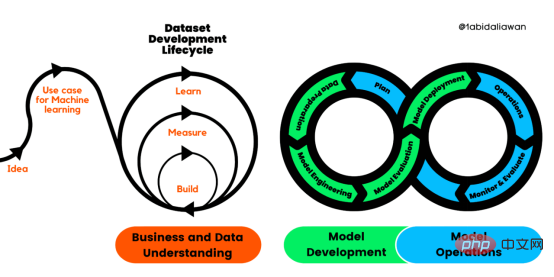

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM机器学习是一个不断发展的学科,一直在创造新的想法和技术。本文罗列了2023年机器学习的十大概念和技术。 本文罗列了2023年机器学习的十大概念和技术。2023年机器学习的十大概念和技术是一个教计算机从数据中学习的过程,无需明确的编程。机器学习是一个不断发展的学科,一直在创造新的想法和技术。为了保持领先,数据科学家应该关注其中一些网站,以跟上最新的发展。这将有助于了解机器学习中的技术如何在实践中使用,并为自己的业务或工作领域中的可能应用提供想法。2023年机器学习的十大概念和技术:1. 深度神经网

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM

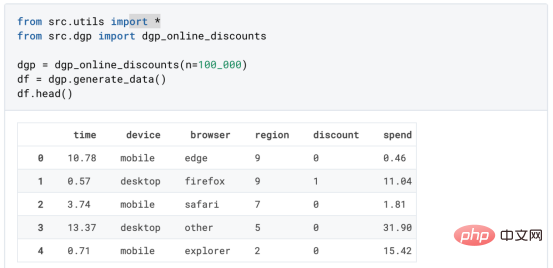

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM译者 | 朱先忠审校 | 孙淑娟在我之前的博客中,我们已经了解了如何使用因果树来评估政策的异质处理效应。如果你还没有阅读过,我建议你在阅读本文前先读一遍,因为我们在本文中认为你已经了解了此文中的部分与本文相关的内容。为什么是异质处理效应(HTE:heterogenous treatment effects)呢?首先,对异质处理效应的估计允许我们根据它们的预期结果(疾病、公司收入、客户满意度等)选择提供处理(药物、广告、产品等)的用户(患者、用户、客户等)。换句话说,估计HTE有助于我

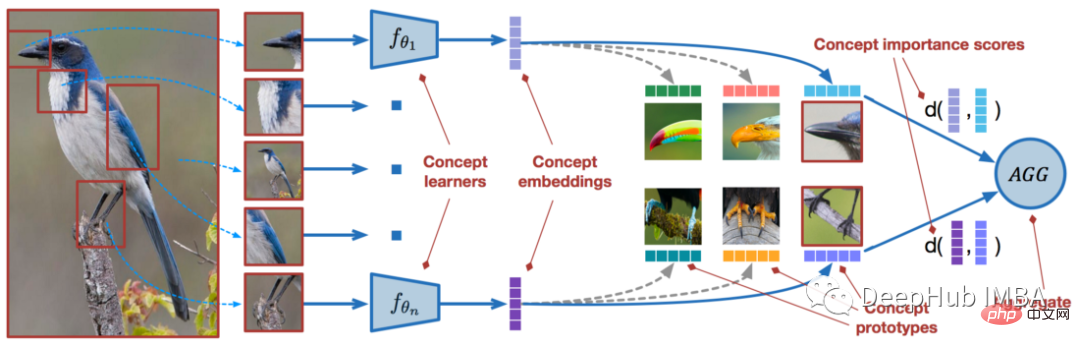

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM近年来,基于深度学习的模型在目标检测和图像识别等任务中表现出色。像ImageNet这样具有挑战性的图像分类数据集,包含1000种不同的对象分类,现在一些模型已经超过了人类水平上。但是这些模型依赖于监督训练流程,标记训练数据的可用性对它们有重大影响,并且模型能够检测到的类别也仅限于它们接受训练的类。由于在训练过程中没有足够的标记图像用于所有类,这些模型在现实环境中可能不太有用。并且我们希望的模型能够识别它在训练期间没有见到过的类,因为几乎不可能在所有潜在对象的图像上进行训练。我们将从几个样本中学习

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。 摘要本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。本文包括的内容如下:简介LazyPredict模块的安装在分类模型中实施LazyPredict

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM

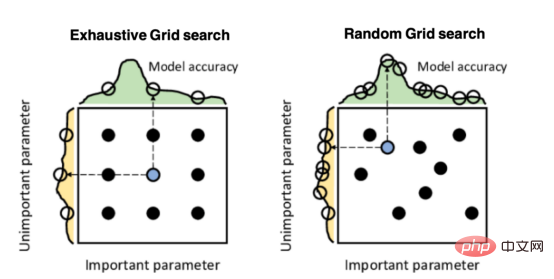

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM译者 | 朱先忠审校 | 孙淑娟引言模型超参数(或模型设置)的优化可能是训练机器学习算法中最重要的一步,因为它可以找到最小化模型损失函数的最佳参数。这一步对于构建不易过拟合的泛化模型也是必不可少的。优化模型超参数的最著名技术是穷举网格搜索和随机网格搜索。在第一种方法中,搜索空间被定义为跨越每个模型超参数的域的网格。通过在网格的每个点上训练模型来获得最优超参数。尽管网格搜索非常容易实现,但它在计算上变得昂贵,尤其是当要优化的变量数量很大时。另一方面,随机网格搜索是一种更快的优化方法,可以提供更好的

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM本文将详细介绍用来提高机器学习效果的最常见的超参数优化方法。 译者 | 朱先忠审校 | 孙淑娟简介通常,在尝试改进机器学习模型时,人们首先想到的解决方案是添加更多的训练数据。额外的数据通常是有帮助(在某些情况下除外)的,但生成高质量的数据可能非常昂贵。通过使用现有数据获得最佳模型性能,超参数优化可以节省我们的时间和资源。顾名思义,超参数优化是为机器学习模型确定最佳超参数组合以满足优化函数(即,给定研究中的数据集,最大化模型的性能)的过程。换句话说,每个模型都会提供多个有关选项的调整“按钮

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM实现自我完善的过程是“机器学习”。机器学习是人工智能核心,是使计算机具有智能的根本途径;它使计算机能模拟人的学习行为,自动地通过学习来获取知识和技能,不断改善性能,实现自我完善。机器学习主要研究三方面问题:1、学习机理,人类获取知识、技能和抽象概念的天赋能力;2、学习方法,对生物学习机理进行简化的基础上,用计算的方法进行再现;3、学习系统,能够在一定程度上实现机器学习的系统。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment